HugginFace has been on top of every NLP(Natural Language Processing) practitioners mind with their transformers and datasets libraries. In 2020, we saw some major upgrades in both these libraries, along with introduction of model hub. For most of the people, “using BERT” is synonymous to using the version with weights available in HF’s transformers library.

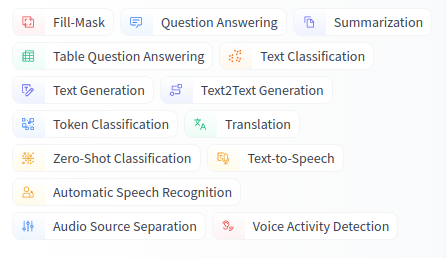

In this post, I’ll highlight some of the models & tasks, which the huggingface ecosystem now supports(transformers+datasets+model hub), but are not as popular as BERT.

Blenderbot was launched in 2020 by Facebook AI, as an open-source state-of-the-art chatbot. It’s claim is to have good conversational skills like empathy, knowledge and personality blended in a same system. Blending these skills is a difficult challenge because systems must be able to switch between different tasks when appropriate, like adjusting tone if a person changes from joking to serious. The instructions to load this model are available here.

This is a transformer framework to learn visual and language connections. It’s used for visual QnA, where answers are to be given based on an image. HuggingFace however, only has the model implementation, and the image feature extraction has to be done separately. This demo notebook walks through an end-to-end usage example.

Based on this image as input, questions could be asked to LXMERT model like “What is the shape of the monitor? (Rectangle)” or “Where is the Cat? (Desk)”

Dense Passage Retrieval (DPR) is a set of tools and models for state-of-the-art open-domain Q&A research. It’s open-sourced by facebook and the pretrained models available here are trained on google’s Natural Questions dataset. The set of models perform the task of retreiving answers to a question from a set of available passages. To perform generic QnA on wikipedia articles, Wikipedia dataset is available on HF with precomputed embeddings. Training on any custom dataset is also straightforward when using the datasets library, and could be done by adding a faiss index to a dataset.

Retrieval-augmented generation(RAG) models by facebook build on top of Dense Passage Retrieval(DPR) models by combining it with a seq2seq model. In a crude sense, the passages extracted are utilized to come up with a more human-readable, generative answer. HuggingFace has an interactive streamlit based demo to try the model out. The demo contains an example for question generation as well.

Tapas was introduced by google as an architecture to find answers from tabular data by providing the input queries in plaintext and not in any particular structured format.

TAPAS models are finetuned on many different datasets, and many community provided and google provided models are available on HF model hub. Last year, we also saw this magic being peformed by OpenAI’s GPT-3, where the model converted english queries into SQL queries.

Paraphrasing is a techinque I personally use for data-augmentation. Such models convert the given input sentence into another sentence while preserving(or attempt to preserve) the meaning of the original sentence. Below is an sample paraphrasing example, which could be tried here.

Zero shot classification is a neat technique which allows model to select from any given set of prediction classes, without actually training the model. This technique was made very popular by GPT-3 and is also available under huggingface’s toolkit for a while now. While the predictions are not as accurate as a custom trained model, it is super helpful when labelled training data isn’t available or is difficult to collect. Various pretrained models could be tried here.