GENIE🧞 is our new suite of human-in-the-loop leaderboards for generative tasks — learn more about the approach and give it a try for your research.

Progress in NLP is usually measured by automatically computed metrics on shared benchmarks, such as the F1 score on the popular Stanford Question Answering Dataset (SQuAD). Leaderboards offer a way to record these evaluations over time and to encourage further research by providing standardized comparisons of new methods against the previous state-of-the-art approaches.

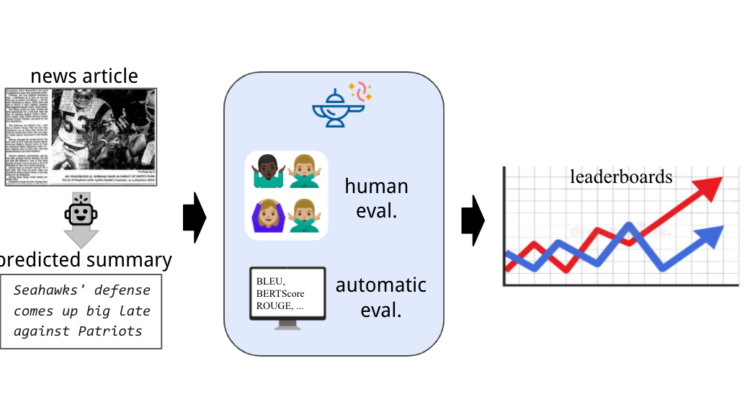

Researchers have created many successful leaderboards for NLP tasks that use evaluation metrics that closely correspond to what it actually means to do well at the task. For example, in classification or span-highlighting tasks, the evaluation can be as straightforward as matching the predicted annotations against reference annotations. Tasks that require text generation (e.g., summarization, translation, or abstractive QA), however, often lack such a gold standard for reliable, automated evaluation. Recent research found that popular metrics currently used for evaluating generative tasks such as BLEU and ROUGE tend to produce significantly different scores than would a human evaluating the same task. Text generation tasks are difficult to evaluate because the space of correct solutions is vast and difficult to quantify, so evaluating them successfully usually requires more complex, intelligent assessments like identifying paraphrases or entailments between the generated and reference text.

To address this difficult evaluation challenge we are releasing GENIE🧞, a new suite of human-in-the-loop leaderboards for generative tasks. When a researcher makes a leaderboard submission, GENIE automatically crowdsources human evaluations from Amazon Mechanical Turk. This novel evaluation approach standardizes human evaluations across datasets and facilitates controlled comparisons. Once the human evaluation is complete, GENIE ranks the models relative to previous submissions, just like other popular leaderboards.

The GENIE approach has several advantages:

- It standardizes high-quality human evaluation of generative tasks, which is currently done in a case-by-case manner with model developers using hard-to-compare approaches.

- It frees model developers from the burden of designing, building, and running crowdsourced human model evaluations. Making human evaluation quick and easy encourages more researchers to report this gold standard above and beyond imprecise automatic metrics such as BLEU and ROUGE.

- Genie provides researchers interested in either HCI for human evaluation or in automatic metric creation with a central, updating hub of model submissions and associated human-annotated evaluations.

Building a system to reliably run human evaluations over time and at a reasonable cost faces several hurdles. Efficient and reliable human feedback requires substantial effort in training annotators and designing crowdsourcing interfaces. Additionally, since human evaluations are inevitably noisy, it’s important to study the variability of evaluations elicited from humans. Our draft investigates these challenges and suggests approaches for dealing with them.

We hope you’ll find that GENIE is a useful tool for your own research. To benefit the community as much as possible, we’d like to expand GENIE beyond the initial set of 4 leaderboards. If you think GENIE can help your research, please try it out and let us know what you think!