Why Code, Trained Weights, & a Web GUI are the 3 necessary components of reproducible ML.

You’re probably aware that machine learning (ML) has a reproducibility problem. Hundreds of pre-prints and papers are published every week in the ML space but too many can’t be replicated or validated [1]. As a result, they amount to little more than hype, and compromise trust and sustainability in the field.

The reproducibility problem is not new, and efforts have been made to tackle it by enabling the sharing of code repositories and trained models. That said, anyone who has tried to replicate or validate an ML paper knows that it’s still surprisingly difficult and time-consuming. This is especially problematic when validation of a model requires reproducing it. How do you know if a model is even worth the effort required to replicate it, if you can’t quickly test it yourself?

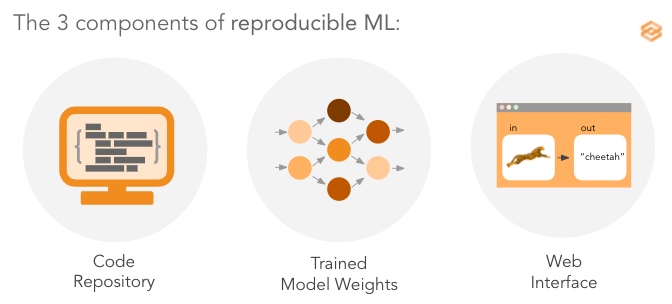

With this in mind, what can researchers do to meet the rising expectations of transparency and reproducibility? In this post, we’ll discuss why you should share (1) code, (2) pre-trained models, and (3) web interfaces, as well as the limitations of each.

The obvious first step to addressing the reproducibility problem is sharing the code that was used to generate the algorithm. In theory, this should allow other researchers to validate and expand on the published work as it lays bare all the small but critical details of the training pipeline. However, leveraging a repo to train a model is easier said than done, and often requires expensive engineering time and frustrating debugging.

It goes without saying that not all repositories are created equal. Organizations like Papers With Code have made it easy to link articles in a journal with the corresponding code, and have raised the standards of the linked repositories with resources like the ML Code Completeness Checklist mentioned above. According to the Checklist, the standard adopted by the NeurIPS conference, repositories should specify dependencies, environment information, and training and evaluation scripts. This is lowering the barriers to publishing code, raising expectations for researchers, and improving transparency and reproducibility. Still, most papers don’t meet these expectations, which greatly exacerbates the legwork and guesswork required of replicators [2].

Even when a repository meets all of these requirements, replicators can be blocked by other obstacles. For example, many ML models are trained on restricted datasets, such as those containing sensitive patient information that can’t be made publicly available. Additionally, models produced by large organizations (like OpenAI’s GPT-3) can cost millions of dollars in computing power to train, an insurmountable hurdle for the average research lab [3].

So if sharing code isn’t enough, the reasonable next step would be to share the trained model itself. This eliminates the need to repeat training, and only requires passing in the input, which at face value, sounds straightforward. However, even with trained weights freely available, running inferences is harder than it sounds. These issues extend beyond environment and versioning issues, which can be resolved by simply providing a containerized model (think Docker). But there is still a lot of guesswork involved when figuring out how to preprocess data to feed into a model, and how to make sense of the output on the other side. For example, how should an input be preprocessed? What versions of processing libraries should be used? What class labels do the indices of an output array map to? These may sound like insignificant concerns, but these tiny details must be precisely replicated to get a model working. When it comes to validating a trained model, replicators face death by a thousand, poorly-defined, cuts.

That said, sharing models (and specifying all the necessary documentation and code to run them) is still a good way to build trust. But there are many reasons why this is not always possible. The most obvious reason is financial conflicts; if an ML engineer is hoping to commercialize a model, it may not make sense to make it publicly available. Additionally, sharing a model exposes the creator to legal liability and loss of control; for example, the creator of a clinical diagnostics algorithm could be subject to regulatory laws if the model ended up in the wrong hands and was used to treat patients [4]. Once a model is released into the world, it is no longer in the creator’s hands what can or will be done with it. Finally, there’s evidence to suggest that information about training data can be derived from model weights, compromising dataset privacy [5]. This is a problem when the dataset contains sensitive information, like genetic information or medical history, that must be protected.

Sharing a web interface to an internally hosted model can surpass all of the above problems, allowing others to test a model without needing to access datasets, reproduce training, replicate preprocessing steps, or debug versioning and environment mismatches. Replicators can’t recreate or build on the algorithm with an interface alone, but this is an advantage if the goal is to protect IP.

Sharing a web interface has been made much easier by tools like Gradio, a Python library that automatically generates an interface for any function with just a few additional lines of code. The generated interfaces can be shared and used instantly. This allows researchers to verify for themselves that the results of a paper are representative, rather than just cherry picked examples.

Web GUIs also enable validation by non-programmer domain experts, such as scientists and clinicians, since no coding is required to run the model. Easy to use interfaces allow for crowd-testing, incentivizing the development of models that work on real data, not just artificially curated datasets. This wider testing also catches biases, errors, and unpredictable results that may otherwise remain hidden. It also solves the liability problem; an algorithm can be shared publicly or privately without the model itself ever leaving the servers, and can be restricted or taken down at developer discretion.

In order to resolve the reproducibility problem, the culture of ML needs to adapt to rising expectations of transparency. In this post, we’ve discussed the advantages and limitations of sharing code, trained weights, and web GUIs. None of these components alone is enough — but together they enable public confidence and trust in ML.

[1] Z. Lipton, J. Steinhardt. Troubling Trends in Machine Learning Scholarship (2018). arXiv

[2] R. Stojnic, ML Code Completeness Checklist (2020), Medium Papers With Code

[3] B. Dickson, The untold story of GPT-3 is the transformation of OpenAI (2020), Tech Talks

[4] S. McKinney, A. Karthikesalingam, D. Tse, C. J. Kelly, Y. Liu, G. S. Corrado and Shravya Shetty, Reply to: Transparency and reproducibility in artificial intelligence (2020), Nature

[5] K. Wiggers, AI has a privacy problem, but these techniques could fix it (2019), Venture Beat