Recently, a neighbor from my building confused me with my sister for the 5th time. She says that I and my sister look very much alike for her to the point of confusing us. A week ago I finally submitted a project for an AI subject I’m taking while doing Erasmus in Budapest.

In this project, now article, I create a facial recognition system from scratch with a Convolutional Neural Network(CNN) structure using Keras, to recognize me and my sister.

Later on, in this project and Notebook, I build another model using the Transfer Learning technique, to reach higher validation accuracy scores and when testing with new and different photos, as well as when using the web camera with different lighting.

In this tutorial I create two models: One from scratch and another one with a pre-trained model

All along, for both of the models, applying Data Augmentation as well. With this technique, I was able to augment data up to 2794 photos, by randomly adding or removing brightness, saturation, and more angles of the faces by flipping them and rotating them. It’s because of this Data Augmentation that I could create a more robust model.

From the augmented resulting images, 1871 were used to training the model and 923 for the test validation set.

The model is tested in different types of photos taken from different cameras/phones, different times, and different looks/make-up. With a confusion matrix, it’s possible to see how the models can have more precision when predicting one of the classes (in this case either for my sister’s face or mine).

The models are saved as “.h5” files for easy portability, as well as the complete dataset of images created are compressed as “.npz” files for the same reason.

At the end of this project, I was able to make the model recognize me even when using a face mask. Very interesting for me.

You can find the full code in my Github link at the end of the article.

If you are interested in Computer vision, you can learn the hottest and most useful techniques in Image processing that you can apply across almost all industries, including some techniques used in this project in my course “Image Processing with Python” at Datacamp.

The good thing about taking selfies is that for this case, of building a facial recognition system, it’s faster to do if you already count with a relatively good amount of photos. In my case and of my sister we do count with photos of ourselves, although some of them have filters applied.

Some photos selected for the dataset were a bit of full-body type, so a face detection system was required as well, this is going to be more explicitly detailed later on in this document and the project.

After picking several photos of me and my sister, I realized even the face detector could fail in some of the photos, leaving the dataset to be a lot smaller than was planned. I came across an online tool from Google “Teachable Machine”, that allows people to train and test a model locally in the navigator and to generate photo samples from video, that way I could generate hundreds of photos to complement the dataset.

In the case of my face, 166 samples were extracted from my photos, while I was able to generate another 541 face samples from photos taken with the online tool. While for my sister, 105 face photos were extracted from her photos, and 585 faces from the photos taken with the tool.

The complete dataset of faces was saved as a compressed “.npz” file, for portability.

For each of the images collected or to test with, face detection it’s required for later on passing the face in specif shape and size to the model.

You can learn how to detect faces more in detail in my course “Image Processing with Python” at Datacamp.

I knew about the Scikit-image library, also from the course in where we learned to use it for Image processing: Template match, convert images to grayscale, etc. I used the Cascade classifier model that’s provided as a Class by Scikit-Image. By training it with a dataset of front faces also provided by the module as an XML file, the detector was ready to be configured and then used.

I created functions to extract the faces from the photos once these were detected and also showed by red boxes in the original photos.

It’s very comfortable since it’s a package we have already used, and that Google Colab already has installed and imported. It’s possible to create another detector for profile faces, then for me to extract faces in photos, two detectors were needed for each photo.

The detector was only able to detect front faces, but as soon as the faces were taken in different angles, like ¾ or profile angles, it didn’t detect faces so I was unable to extract them with the function I created for that.

Since the Scikit-Image face detection technique is really good for front images, we also want the model to recognize faces in profile and other angles from the photos.

For that, I tried and compared MTCNN. A Keras implementation for face detection. This is capable with only one detector, find and later on extract faces from more angles: from profile angles, ¾, front, etc.

It’s comfortable to work with once it’s installed in the Colab/notebook environment. Really good when detecting faces and it’s possible to select a defined image size to be the same that the model will have as input: (224,224), 224×224 pixels with RGB channels. The model will have as input the shape of (224,224,3).

It is required to be installed beforehand.

An important effort was made to join all the detected faces coming from different sources, and organized them in folders with the corresponding label, to then prepare them as NumPy arrays for the model.

Multiple steps were taken for optimally managing the data. The resulting full dataset with all the faces was saved as a compressed “.npz” file for portability.

Data augmentation in data analysis is about techniques and strategies used to significantly increase the diversity of data available for training models, by adding slightly modified copies of already existing data or newly created synthetic data from existing data. It acts as a regularizer and helps reduce overfitting when training a machine or deep learning model.

Data augmentation was applied to expand the original dataset up to 2794 photos from which around 1800 were for training and 900 for validation testing.

The techniques applied to photos were scaling, rotation, brightness, and flipping or shifting. The first two were fully controlled without random selection values, while for brightness and flipping a random factor was added. For example, by having a random selection on a range of values for either decreasing the brightness of the image or by increasing it and therefore also adding exposure and saturation. These image transformations were learned from the course, using the OpenCV library.

You can learn about these techniques in my course “Image Processing with Python” at Datacamp.

This way the model will focus more on the facial features rather than the colors or other picture factors.

The resulting augmented dataset is then ready to be prepared for the input model, in this case, the original labels that are originally our names as strings will be converted into numerical labels: 0 and 1. For then be converted to one-hot encoding. The resulting dataset is saved as a compressed file for easy use later.

Using the augmented data, now preprocessed to meet the input requirements of the model; I use callback from Keras to get more efficient training.

The input of the model will be images of the shape (224,224,3) representing the 224 by 224 pixels images with 3 RGB channels for the color encoding.

A convolutional base is created using a common pattern: a stack of 2-dimensional convolutional layers “Conv2D” and Max Pooling layers “MaxPooling2D” layers.

To complete the model, I will feed the last output tensor from the convolutional base (of shape (28, 28, 64)) from the last Max Pooling, into two Dense layers to perform classification. Dense layers take vectors as input (which are 1D), while the current output is a 3D tensor. Because of that, I will flatten (or unroll) the 3D output to 1D, then add Dense layers on top, with a drop_put of 0.2 between them. The final Dense layer will have 2 outputs.

Model summary:

After a lot of models tried, I realized that for a model in many cases, including this, it’s not necessarily required to have a big amount of neurons for each layer or convolutional layer. On the other hand, having more neurons would allow the model to see and cover more details.

What is Max pooling?

Max pooling is a pooling operation that selects the maximum element from the region of the feature map covered by the filter. Thus, the output after the max–pooling layer would be a feature map containing the most prominent features of the previous feature map.

Why use Pooling Layers?

- Pooling layers are used to reduce the dimensions of the feature maps. Thus, it reduces the number of parameters to learn and the amount of computation performed in the network.

- The pooling layer summarises the features present in a region of the feature map generated by a convolution layer. So, further operations are performed on summarised features instead of precisely positioned features generated by the convolution layer. This makes the model more robust to variations in the position of the features in the input image.

What are Dropouts?

Dropout is a regularization method that approximates training a large number of neural networks with different architectures in parallel.

During training, some number of layer outputs are randomly ignored or “dropped out.” This has the effect of making the layer look-like and be treated-like a layer with a different number of nodes and connectivity to the prior layer. In effect, each update to a layer during training is performed with a different “view” of the configured layer.

Batch normalization and dropout layers can prevent the model to get overfitted.

The model compiles with Adam optimizer, Categorical_crossentropy, and the metrics to be about accuracy. With the callbacks, it’s possible to avoid overfitting with an “EarlyStopping” function to watch when the validation loss is showing signs of overfitting. and then by the ModelCheckpoint, to automatically save the best model from the training based on the validation accuracy.

Adam is a replacement optimization algorithm for stochastic gradient descent for training deep learning models. Adam combines the best properties of the AdaGrad and RMSProp algorithms to provide an optimization algorithm that can handle sparse gradients on noisy problems.

According to different sources, It seems the Adaptive Moment Estimation (Adam) optimizer nearly always works better (faster and more reliably reaching a global minimum) when minimizing the cost function in training neural nets.

In mathematical optimization and decision theory, a loss function or cost function is a function that maps an event or values of one or more variables onto a real number intuitively representing some “cost” associated with the event. An optimization problem seeks to minimize a loss function.

Categorical cross-entropy is a loss function that is used in multi-class classification tasks. These are tasks where an example can only belong to one out of many possible categories, and the model must decide which one. Formally, it is designed to quantify the difference between two probability distributions.

With EarlyStopping, I avoid overfitting, it will stop the training when there is no improvement. I defined what to monitor while saving the model checkpoints. I will monitor validation loss for stopping the model training.

With ModelCheckPoint, I’m saving the best model from the training as “facial_recognition_daniela_rebeca_scratch.h5” to, later on, compare it.

The model accomplishes 97% of accuracy. We see that the validation loss continues to decrease with some small pick-ups. The model stops itself to avoid overfitting when validation loss isn’t decreasing anymore and it’s actually increasing at some pace.

The Validation accuracy and the training accuracy, both, increase, meaning that the model is learning.

We accomplish a score of 97% And if we want to, we can also load the best model from the training since we specified in the callbacks. Although in this case, it will be the same as the latest model.

A confusion matrix is a performance measurement for a machine learning classification problem where output can be two or more classes. It is a table with different combinations of predicted and actual values. It’s often used to describe the performance of a classification model (or “classifier”) on a set of test data for which the true values are known.

Generate a confusion matrix to see the confidence of the predictions and where the model is confused. Here we can see that it’s better at recognizing me than my sister(Daniela).

At another time, I re-created, trained, and ran the model once again, and see that the result this time was now perfectly balanced with 97% of precision for both of us. So here we can see proof of the small random factor when training the models.

Before working with this model, I created others with a lot more neurons per layer, up to 512 for the majority. Also, trained them with smaller pieces of the dataset. I realized at that moment that when the model is fed with less amount of data, then it’s better to add more neurons since they have to look better into the image matrix to see the difference in each epoch.

When I trained the model with only 800 photos, the model with a very similar architecture described above would reach only up to 87% of accuracy.

I will test the model with different new photos. Using functions to detect the face, extract it, pass it to the model and, with a boolean as a parameter, see the prediction confidence for both classes.

That photo above was actually me (Rebeca) in Bokodi! Although the difference in confidence between the two classes is little: By only 14%.

This is the most recent photo of me, taken only 2 days ago:

The model is really sure it’s me, even though I’m wearing makeup.

The model performs well even from different angles. This photo’s 2 years old, I got from her Facebook.

That last one was Daniela, not me. This time the difference is by 20% of confidence.

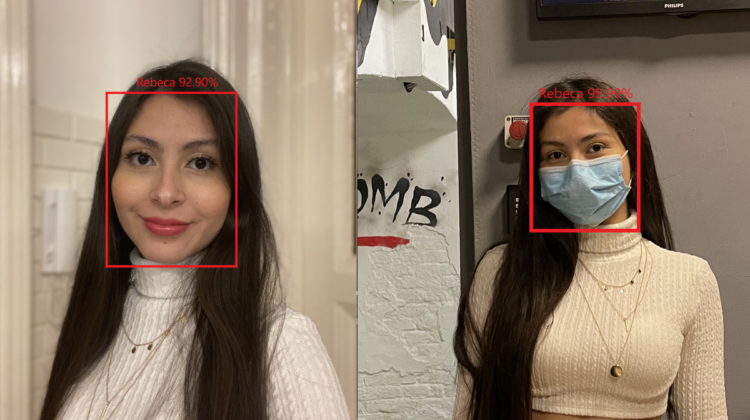

Although the confidence level is not very high for the recognition decision, it’s able to recognize me and my sister from the web camera. These were taken from the Google Colab Notebook.

In this case, the model has a very high confidence level when recognizing me. with 93% confidence.

Even if I cover part of my face, it still recognizes me with 73% confidence:

I thought about trying and see if the model was able to recognize me even with the mask. And incredibly enough, it did!!

83% of confidence

And 97% in this one.

Still, once I change the angle of my face to be looking to one side, it’s then when start to get confused. Lowering the confidence levels when recognizing to fully mistake when doing it.

The model has 99.99% of confidence that is me! Very well done.

I decided to build a model using the Transfer Learning technique, and so I can also compare the performance between these two models. I will use the VGG16 pre-trained model.

What’s Transfer Learning?

Transfer learning is a machine learning method where a model developed for a task is reused as the starting point for a model on a second task.

It is a popular approach in deep learning where pre-trained models are used as the starting point on computer vision and natural language processing tasks given the vast compute and time resources required to develop neural network models on these problems and from the huge jumps in the skill that they provide on related problems.

Creating the model with Transfer Learning

The pre-trained model of choice is the Convolutional Neuronal Network VGG16 since it’s also the one I have used the most recently.

The model is imported from Keras, this way a new input shape for the model can be given as a parameter. I use the concatenation technique, so the layers can be connected to one another. In this case, the new layer is being connected to the output of the VGG16 model.

A new Dense layer of 256 neurons is added, with a “Relu” activation function. A robust drop out of a layer of 0.5 is applied afterward, so it discards the “doubts” during the process in a way. It randomly selecting nodes to be dropped-out with a given probability, each weight update cycle. Dropout is only used during the training of a model and is not used when evaluating the skill of the model. This regularization helps prevent overfitting.

Batch Normalization is also added to the model. This is a technique for training very deep neural networks that standardizes the inputs to a layer for each mini-batch. This has the effect of stabilizing the learning process and dramatically reducing the number of training epochs required to train deep networks.

In the end, all the layers from the base VGG16 model are frozen or set to not trainable. That way the total number of parameters will decrease considerably, to be only the ones we are adding to the model.

The resulting model is considerably big compared to the one I did before.

This model is compiled the same way the first model is, with the same optimizer, loss function, and callbacks, except that the best model will be saved with a different name.

When training it, the model can accomplish 98.27% of validation accuracy and 99.30% in training accuracy, all while avoiding overfitting during the training with callbacks.

When loading the bets model from the training that was automatically saved by the callback, it’s possible to see that it’s slightly better than the latest one, with 98.37%

I generate a confusion matrix to see the confidence of the predictions and where the model is confused. Here we can see that it’s slightly better at recognizing my sister (Daniela) than me.

I will test the model with different new photos. Using functions to detect the face, extract it, pass it to the model and, with a boolean as a parameter, see the prediction confidence for both classes.

We reach 99% this time, with this model, when predicting on the most recent photo:

Trying in photos where both of us are and see the results.

By taking new photos from the Google Colab notebook, using the web camera, the model performs very well, all of the time classifying correctly and with high confidence levels.

99% sure it’s me.

Very good confidence levels for my sister as well, with 95% and 99% respectively.

98% and 80% were confident it’s me.

Both of the models do a good job when recognizing our faces while wearing face masks.

The first model had a confidence score of 99.99% when labeling me while in this latest model we get 99.14%. Still really good.

You can find the whole code summarized in a Jupyter Notebook here.

I believe that anyone can create and contribute to incredible projects related to Computer Vision. And even if you are a beginner, you can easily get going with platforms like Datacamp. In where you can find almost any course to get you where you want to be in the Data Science world! 🙌