2. Libraries:

We will use Pandas, Numpy, and Scikit-learn libraries. We will import DecisionTreeRegressor class from Scikit-learn to train our dataset.

# importing the libraries

import pandas as pd

import numpy as np# Reading the dataset using pandas librarie

dataset = pd.read_csv('name_of_dataset.csv')

3. Exploratory Data Analysis(EDA):

Now we will explore our dataset, check it has any missing values, what is the type of data we have? Encode the categorical data, etc, etc.

- Shape and type of dataset:

# shape of the dataset

print(dataset.shape)

# Type of the dataset

print(dataset.dtypes)

- Checking for the null value:

# checking for the null value

print(dataset.isna().sum())

# Counting the number of null values

print(dataset.isna().sum().sum())

- Encoding the categorical data:

By using dtypes function we know that our dataset only contains float and integer values so we don’t have to use Encoding on the above dataset.

# encoding the categorical data

dataset = pd.get_dummies(dataset)

4. Dependent and Independent variables(matrix):

To train our dataset we need to specify dependent and independent variables. Dependent variables will contain our output column and vise versa.

# independent variables

X = dataset.iloc[:, :-1].values

# dependent variable

y = dataset.iloc[:, -1:].values

5. Splitting the dataset:

We will split the dataset into training and testing sets. The training set will have most of the data cause we will train our model on that dataset, and the testing set will have few amounts of data so we can use it only for testing purposes.

# spliting the dataset

from sklearn.model_selection import train_test_split

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state = 0)

6. Feature Scaling:

Using feature scaling we will normalize the range of independent variables.

# using feature scaling

from sklearn.preprocessing import StandardScaler

X_sc = StandardScaler()

X_train = X_sc.fit_transform(X_train)

7. Training the dataset:

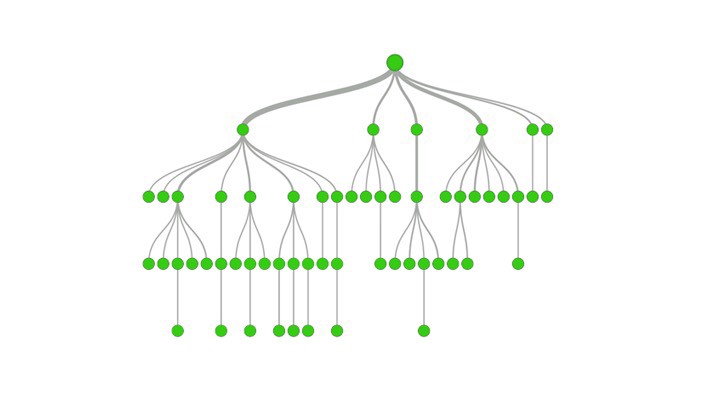

It’s time to train our dataset, we will use DecisionTreeRegressor class from the tree module.

# training the dataset

from sklearn.tree import DecisionTreeRegressor

regrassor = DecisionTreeRegressor(random_state = 0)

regrassor.fit(X_train, y_train)

8. Predicting the result:

After training the model, we will predict the result using test data

# predictzing the result

pred = regrassor.predict(X_sc.transform(X_test))

9. Accuracy:

Now, we will see how accurate our algorithm is?

# Accuracy of the algorithm

from sklearn.metrics import accuracy_score

print(accuracy_score(y_test, pred))

Kaggle Notebook link:

Happy Coding!!!