All machine learning models learn how to map inputs to outputs. In deep learning, the ‘learning’ is done via a series of transformations or layers, and each transformation is defined by the weights of that layer. The diagram below illustrates how a simple network works.

Initially, the weights are implemented randomly and propagated through the hidden layers of the network (forward-propagation) to the final layer, the output layer, or the prediction. However, the transformations at this point are meaningless and the result is likely far off. The network implements a loss function that measures how far off the output is from the ground truth, and feeds this value back to the layers to adjust the value of the weights, so to reduce the loss. At every iteration, the model learns more and gets closer to the optimal values. This process is called back-propagation and is implemented by the optimizer.

Of course, the above diagram is extremely simplified, and real-world applications can get pretty complex. Nonetheless, the building blocks are the same for all of them.

Logistic Regression from scratch

Logistic Regression is a shallow Neural Network. It only has one input layer and one output layer and no hidden layers. It is, however, a very good place to start to understand the inner workings of a Neural Network and fairly easy to implement from scratch.

First, let’s create some artificial data for the binary classifier.

X has a shape (100,2) and y has a shape (100,1). Data is shown in the figure below.

Let’s implement now the building blocks of our network: Forward Propagation and Back Propagation.

Forward Propagation

Let’s randomly initialize the weights matrix W for forward propagation. The output layer needs to have the same dimensions as y, (100,1) in this example. Since there are no hidden layers, the output layer is simply the dot product of X and W. According the rules of matrix product, the weights matrix W needs to have dimensions (1,2) so that (100,2) x (2,1) = (100,1). For the sake of calculation, however, we define W with shape (1,2) and take the transposed.

The output of the classifier needs to be bounded between 0 and 1, therefore we also need an activation function to apply to the output layer. For a Logistic Regression, the activation function is simply the logistic (or sigmoid) function. The output activation is our prediction.

Let’s visualize the decision boundary. The background color refers to the classification decision of the untrained classifier. Since the weights are still random and we haven’t optimized them yet, we expect the classifier to be pretty bad at this point. In fact, it is not able to properly separate the positive class from the negative.

We now need to optimize the weights. Before we go into the back-propagation bit, let’s talk about the optimizer we’ll use, Gradient Descent.

Gradient Descent

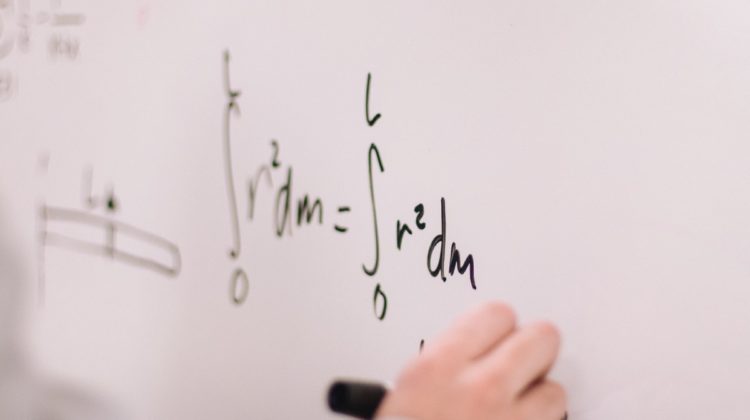

GD is based on the concept of a gradient, which can be seen as a generalization of derivatives to multi-dimensional inputs. If a function is differentiable, i.e. its derivative exists, then it’s possible to find its minimum, which is the point where the derivative is equal to zero.

The derivative of a function gives us its slope at any given point. If the loss decreases when the weights move into a certain direction (increase or decrease), the derivative will return a negative value. The gradient descent algorithm updates the parameters in the direction of the negative gradient (down along the loss function). This process is then repeated for a certain number of iterations or until convergence.

In mathematical terms, this means solving a polynomial equation of N parameters — where N is the total number of weights in the network — to find the combination of parameters that minimizes the loss.

Note that, in real case scenarios, N can easily be in the millions, and solving such equations would be impossible. That’s why we generally implement Batch Gradient Descent, i.e randomly draw a batch of the training data and compute the gradient for the batch only.

Luckily, we don’t have to do that in our simple Logistic Regression so, for simplicity, we’ll implement regular Gradient Descent, not the Batch one.

Back Propagation

The main steps we’ll implement in Back Propagation are the following:

1. Calculate the loss between the activation layer (prediction) and the ground truth

2. Compute the gradient

3. Update the weights.

In order to calculate the loss, we first need to define it. In binary classification problems, it is common to use binary cross-entropy (or log-loss) so we will use that.

The gradient is the derivative of the loss function with respect to each of the weights (partial derivatives). It can be demonstrated that the derivative of cross-entropy function w.r.t. W is (y_hat−y)X where y_hat=sigmoid(z). We are not going to demonstrate it in this article but there’s an excellent explanation here.

Once we have the gradient, we can update the weights. For this step, we need to define the step or learning rate. It determines how fast the model will learn and tunes the size of the step GD takes.

We also need to define a certain number of iterations for the algorithm to converge. In our case, 15 iterations are enough for the algorithm to converge. As we get closer to the optimal values, the loss will decrease and the steps of the gradient become smaller.

Piecing everything together

We can now put all the pieces together and run the model.

We can visualize what the gradient descent is doing at each iteration. From the figure below, we can see how the model takes smaller and smaller steps as it approaches the minimum loss.

Let’s look at the decision boundary again. Now the weights have been optimized and the classifier performs a lot better. There still are some misclassified points but, overall, the classifier does a good job in separating the two classes.

The complete code is available here.