It is widely believed in the deep learning community that growing training sets and model size should improve performance. It is therefore beneficial to develop a deeper understanding of the relationships between training set size, computational scale and model accuracy improvements to advance the state-of-the-art.

Issues involving scaling are critical, as the test loss of neural networks scales as a power-law along with model and dataset size. Questions such as why these power laws emerge and what features of the data and models determine the values of the power-law exponents are of considerable theoretical and practical importance, as these components determine how quickly performance improves in correlation with more data and larger models. Several intuitive rules of thumb have been obtained from empirical studies, but as yet there are few rigorous results.

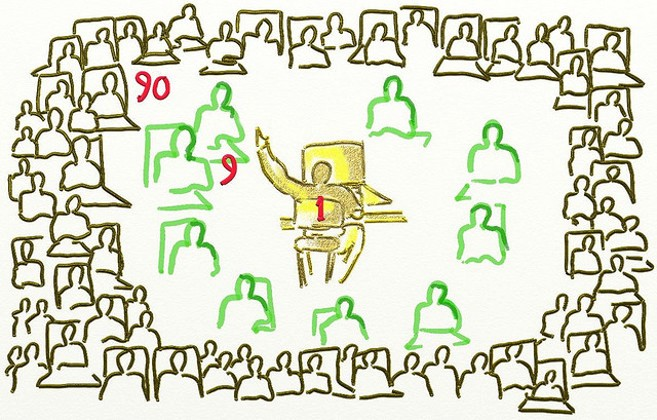

In an effort to provide such a rigorous theory to explain and connect scaling laws, new research from a Google and Johns Hopkins University team identifies variance-limited and resolution-limited scaling behaviours for both dataset and model size for four related scaling regimes.

The researchers summarize their work’s main contributions as:

- Identify four scaling regions of neural networks and provide empirical support for all four regions for deep models on standard datasets.

- Present simple yet general theoretical assumptions under which we can derive this scaling behaviour.

- Present a concrete solvable example where all four scaling behaviours can be observed and understood: linear, random-feature teacher-student models.

- Empirically investigate the dependence of the scaling exponent on changes in architecture and data.

In essence, the variance-limited regime can be series-expanded in either inverse width or inverse dataset size. Specifically, in the limit of infinite data or an arbitrarily wide model, the researchers will simplify some aspects of neural network training. For example, if either model parameters or dataset size is fixed and we study scaling with respect to the other parameter as it becomes arbitrarily large, then the loss scales as 1/x. In this case, it is sufficient to say that the infinite data or width limit exists and is smooth.

In a resolution-limited regime, either model parameters or dataset size is effectively infinite, and we study scaling as the other parameter increases. Normally, the observed power-law scaling is 1/xα, typically with 0 < α < 1. In this case, if we assume that trained models map the data into a d-dimensional data manifold, the model can make independent predictions in each component of the data manifold in order to optimize the training loss.

The researchers tested the theory in a teacher-student framework. By scanning the dimensions of inputs to the teacher, they were able to generate unlimited training samples and controllably tune the dimensions of the data manifold. They also explored resolution-limited scaling behaviour in Wide ResNet (WRN) architectures trained in the context of standard classification datasets.

To further investigate data distribution effects on the resolution-limited exponent, the researchers used CIFAR-100-derived tasks that group classes into broader semantic categories, tuning the number of target classes and input noise

The results show that although performance depends on the number of categories, the resolution-limited exponent is insensitive to this number.

Overall, the study validates the premise that models with larger exponents tend to perform better due to increased dataset size and model parameters, and enables the estimation of expected gains that come from increases in the scale of datasets and model parameters. On a broader level, the work provides a paradigm for defining and measuring progress in deep learning models.

The paper Explaining Neural Scaling Laws is on arXiv.