The creation of Artificial Intelligence(pseudo) has allowed us to create amazing programs that can far surpass the abilities of any human. Well some of the most cool AI daily use tools like Siri, pseudo-intelligent digital personal assistant or Alexa, boss of the smart homes or even Tesla, emerging self driving cars . It all started from something we all are familiar with Neural Networks (also known as Artificial Neural Networks [ANN]), the first chapter of AI.

Before getting into complexities, I would like to show you a glimpse of how the idea of neural networks came through and it’s connection with biological neurons.

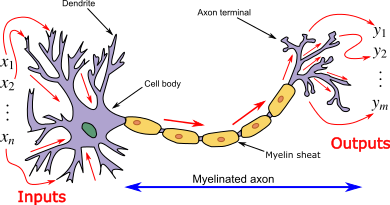

Biological Neurons (also called nerve cells) or simply neurons are the fundamental units of the brain and nervous system, the cells responsible for receiving sensory input from the external world via dendrites, process it and gives the output through Axons.

As we can see from fig1, these components define some of the important working steps of neurons in human body:

Dendrites: Each neuron has fine, hair-like tubular structures (extensions) around it. They branch out into a tree around the cell body and their function is to accept incoming signals.

Axons: They are long, thin, tubular structure that works like a transmission line.

Synapse: Neurons are connected to one another in a complex spatial arrangement. At the end of the axon are highly complex and specialized structures called synapses which basically connects two neurons.

Dendrites receive input through the synapses of other neurons and output of this particular neuron is sent out to other neurons through the axon and the synapses. This is the general idea how brain process signals through a chain of neurons functioning at the backend through which we can learn, adapt or remember different things.

Inspired by the way how biological neurons works, we were able to apply the architecture of our brains to recreate the same structure of human neurons to process information resulting in much more accurate results. They takes a large set of data, process the data(draws out the patterns from data), and outputs what it is.

ANN’s are composed of a large number of highly interconnected processing elements (neurons) working in unison to solve specific problems. ANNs, like people, like child, they even learn by example and it is configured for a specific application, such as pattern recognition or data classification, Image recognition, voice recognition through a learning process.

don’t be anxious, we are coming to the part that what are those circles and arrows and how are they working at the backend.

There are 3 components of a Neural Network:

Input Layer [yellow circles]: The input layer is the very beginning of the workflow for the artificial neural network. Each circle represents 1 feature (a piece of information). This can be anything like pixel value of image or passenger fare from titanic dataset or square footage of your house for a house price prediction, literally anything!. The function of this layer is take input values and passes them onto next layer for further processing by subsequent layers. There can be as many hidden layers you want, totally depends on your dataset.

Hidden Layer [blue and green circles]: These layers do all the processing for neural networks. You can have as many of these as you want. In this, function applies weights to the inputs and directs them through an activation function as the output and we can say that these layers perform nonlinear transformations of the inputs entered into the network.

Output Layer [red circles]: The output layer is responsible for producing the final result. This is the last layer that simply brings together the information from the last hidden layer of the network to output all the information you need from the program.

Now we got the general architecture how neural networks look like, we can deep dive inside the operation that is being performed at the backend in each neuron.

Each neuron receives input from some other nodes, or from an external source and computes an output. Each input has an associated weight (w), which is assigned on the basis of its relative importance to other inputs. The node applies a function f (known as activation function) to the weighted sum of its inputs as in figure below.

The above network takes numerical inputs X1 and X2 and has weights w1 and w2 associated with those inputs. Each connection of neurons has its own weight (initialized randomly at first), and those are the only values that will be modified during the learning process.

Additionally, there is another input X0=1 with weight W0(called the Bias) associated with it. The main function of Bias is to provide every node with a trainable constant value (in addition to the normal inputs that the node receives).

The function f is non-linear and is called the Activation Function(applied to the summations). The purpose of the activation function is to introduce non-linearity into the output of a neuron. This is important because most real world data is non linear and we want neurons to learn these non linear representations. Every activation function (or non-linearity) takes a single number and performs a certain fixed mathematical operation on it. There are several activation functions you may encounter in practice (we will discuss those AFs in our next article)

In the simplest case, these products are summed, fed to a function (activation function) to generate a result, and this result is sent as output.

Mathematically, x0.w0(bias) + x1.w1 + x2.w2 + x3.w3 + ...... + xn.wn = ∑ xi.wi.

Now activation function is applied 𝜙(∑ xi.wi) and that’s all a neuron does ! Take all values from connected neurons multiplied by their respective weight, add them, and apply an activation function. Then, the neuron is ready to send its new value to other neurons. After every neurons of a column did it, the neural network passes to the next column. At the end, the last values obtained in the output layer gives our predicted value (ŷ) [see fig2]

This whole process is called Forward Propagation in NNs.

Neural networks often start off with random weights and biases, but then train themselves over and over again till they reach peak performance. Lets look at an analogy may be useful in understanding the mechanisms of a neural network. Learning in a neural network is closely related to how we learn in our regular lives and activities — we perform an action and are either accepted or corrected by a teacher or coach to understand how to get better at a certain task. Similarly, neural networks require a trainer in order to describe what should have been produced as a response to the input. Based on the difference between the actual value and the predicted value, an error value also called Loss Function is computed and sent back through the system.

For each layer of the network, the loss function is analyzed and used to adjust the threshold and weights for the next input. Our aim is to minimize the loss function. The lower the loss function, the closer the actual value to the predicted value. This process of minimizing loss is called Optimization and optimizers change the attributes of the neural network such as weights and learning rate to reduce the losses.

We compare the outputs 𝑦𝑗 from each neuron to the observation target 𝑡𝑗. We use this difference to update the weight 𝑤𝑖𝑗, based on the input and a desired learning rate[𝜂] (Having a learning rate less than one results in a more stable learning rate, though this stability is traded off against having to expose the network to the data multiple times. Typical learning rates are in the 0.1–0.4 range). This results in an weight updation formula:

𝑤𝑖𝑗←𝑤𝑖𝑗+𝜂(𝑡𝑗−𝑦𝑗)𝑥𝑖

As long as there exists a disparity between the actual value and the predicted value, we need to adjust those weights. Once we tweak them a little and run the neural network again, A new loss function will be produced, hopefully, smaller than the last.

We need to repeat this process until we scrub the loss function down to as small as possible and then we will be able to say that our network is learnt.

The above process is known as Back Propagation in NNs.

Now I hope you guys can correlate some of the stuff that is going inside neural networks and you may also started to ponder on the fact how futuristic they are, However this article only covered the basic story of neural networks, there’s a lot of advanced concepts that go into actually performing all these complicated processes.

……………………………………………………………………………………