This article will help you understand another unsupervised ML algorithm to solve clustering problems. Let’s begin.

k-means is one of the mildest unsupervised learning algorithms used to solve the well-known clustering problem. It is an iterative algorithm that tries to partition the dataset into a ‘k’ number of pre-defined distinct non-overlapping subgroups (clusters). The main idea is to define k centers, one for each cluster. These centers should be placed in a crafty way because of different location causes the different result. So, the better choice is to place them as much as possible far away from each other. The next step is to take each point belonging to a given data set and associate it to the nearest center.

When no point is pending, the first step is completed and an early group age is done. At this point, we need to re-calculate k new centroids as the barycenter of the clusters resulting from the previous step.

After we have these k new centroids, a new binding has to be done between the same data set points and the nearest new center. A loop has been generated. As a result of this loop, we may notice that the k centers change their location step by step until no more changes are done or in other words, centers do not move anymore. Finally, this algorithm aims at minimizing an objective function known as the squared error function given by:

- ‘||xi — vj||’ is the Euclidean distance between xi and vj.

- ‘ci’ is the number of data points in the ith cluster.

- ‘c’ is the number of cluster centers.

Let,

X = [x1,x2,x3,……..,xn] be the set of data points and

V = [v1,v2,…….,vc] be the set of centers.

- Randomly select ‘c’ cluster centers.

- Calculate the distance between each data point and cluster centers.

- Assign the data point to the cluster center whose distance from the cluster center is the minimum of all the cluster centers.

- Recalculate the new cluster center using the below formula:

where ‘ci’ represents the number of data points in the ith cluster.

5. Recalculate the distance between each data point and new obtained cluster centers.

6. If no data point was reassigned then stop, otherwise repeat step 3.

We can also use the ‘elbow method’ to find the no. of clusters formed. (even if we already know it), this is just to validate it visually. Below is an example.

Implementing k-means using in GoogleColab (python) using sklearn.

- k-means algorithm is fast, robust, and easier to understand compared to several other clustering algorithms.

- It is relatively efficient: O(tknd), where n is no. of objects, k is no. of clusters, d is no. of dimensions of each object, and t is no of iterations. Normally, k, t, d << n.

- Gives the best result when data sets are distinct or well separated from each other.

- Euclidean distance measures can unequally weight underlying factors.

- The learning algorithm provides the local optima of the squared error function.

- Randomly choosing the cluster center cannot lead us to a fruitful result.

- Applicable, only when mean, is defined i.e. fails for categorical data.

- Unable to handle noisy data and outliers.

- The algorithm fails for a non-linear data set.

- The learning algorithm requires apriori specification of the number of cluster centers

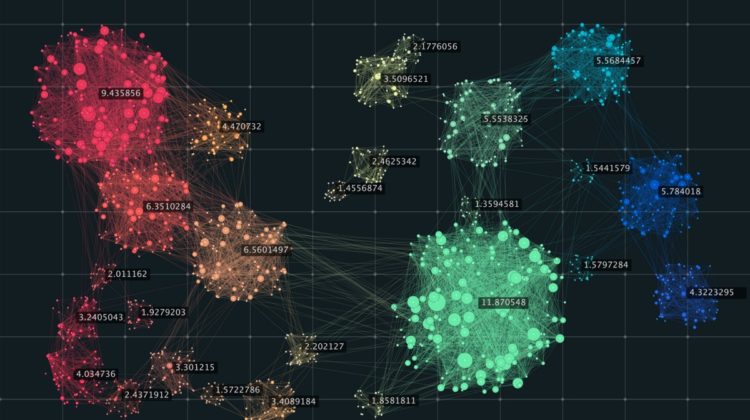

K-means algorithms can be used in a variety of applications such as market segmentation, document clustering, image segmentation, and etc.

The goal usually when we undergo a cluster analysis is either:

- Get a meaningful intuition of the structure of the data we’re dealing with.

- Cluster-then-predict where different models will be built for different subgroups if we believe there is a wide variation in the behaviors of different subgroups.

Kmeans clustering is one of the most popular clustering algorithms and usually, the first thing practitioners apply when solving clustering tasks to get an idea of the structure of the dataset. The goal of means is to group data points into distinct non-overlapping subgroups. However, it suffers as the geometric shapes of clusters deviate from shapes. Moreover, it also doesn’t learn the number of clusters from the data and requires it to be pre-defined.