Why we invested in LatticeFlow

Artificial intelligence (AI) is already ubiquitous in today’s world. If you found this article on social media or a search engine, an AI-based algorithm decided that you might want to see it (good guess, it seems). More and more products and industries use AI to provide improved or entirely new capabilities — from voice assistants and smart homes to self-driving cars and highly automated factories.

But the technological breakthroughs that led to today’s AI systems and machine learning (ML) algorithms are relatively recent. Over the past few years, AI practitioners have encountered many challenges in making ML algorithms perform reliably under real-world conditions with the trustworthiness required for mission-critical use cases.

To oversimplify a bit: With today’s readily available ML software packages it’s not that hard anymore to get to AI models that are 90% accurate for most use cases. Getting to 95% is already much, much harder, and the last 5% are often incredibly difficult and prohibitively expensive, requiring massive investments in training data, testing and algorithm refinement.

Depending on the nature of the problem, those last 5% might not matter that much. For example, you are probably not terribly disappointed if Google Photos doesn’t find every single photo of your dog with its AI-enabled picture search.

But there are many AI use cases where inaccurate results might be either very expensive — consider financial and industrial applications — or might even cost lives. Think of medical diagnostics, self-driving cars or preventative maintenance and it’s obvious that faulty ML algorithms might have catastrophic consequences.

Measuring, monitoring and improving the reliability of ML models is a very complex field that requires the combination of several sophisticated approaches. LatticeFlow, a spin-off of ETH Zürich, set out to solve this problem. It is btov Partners’ latest seed investment.

LatticeFlow’s technology is the result of years of research at Prof. Martin Vechev’s Secure, Reliable, and Intelligent Systems Lab (SRI) at ETH Zürich. He co-founded the company with Petar Tsankov (CEO) and Pavol Bielik (CTO), both experienced AI researchers who spent years exploring this problem and coming up with groundbreaking solutions. They are joined by Prof. Andreas Krause, a world-renowned machine learning expert and the chairman of ETH’s new AI Center.

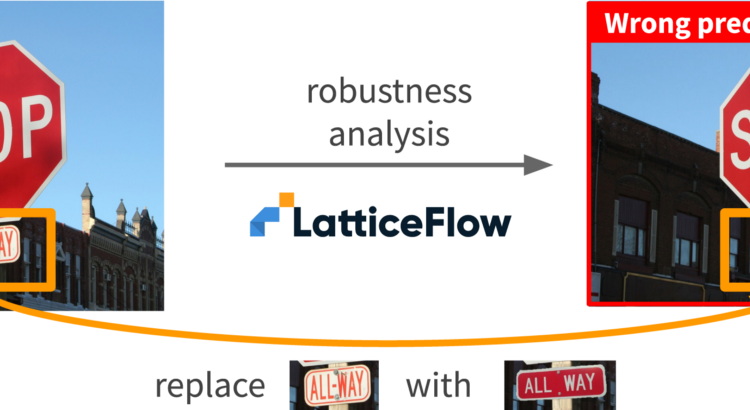

What makes LatticeFlow’s technology so compelling is its holistic approach that is rooted in deep research combined with a fundamental understanding of real-world needs. LatticeFlow can assess an ML model with automated discovery of failure modes that might lead to issues. It can then let AI engineers focus on the most relevant issues for improvements and enable them to further test the model. Finally, it can generate synthetic training datasets that make the model more robust. To make it universally usable for AI practitioners, LatticeFlow can work with any of the usual ML frameworks such as TensorFlow or PyTorch.

LatticeFlow’s early customers include the Swiss railway system (SBB), the German Federal Office for Information Security and the US Army. This variety of customers illustrates the broad scope of use cases in which the company’s technology can be used.

The Federal Office for Information Security recently published a report based on LatticeFlow’s technology that demonstrates typical problems in a very compelling way. The report examines the reliability of ML models that are designed to detect traffic signs, obviously a crucial functionality for self-driving cars. The results are quite shocking: Even the most advanced models can drop in accuracy from 99.9%+ under normal conditions to around 30% through something as simple as a sticker that someone put on a sign. It is this kind of unexpected edge case that LatticeFlow is designed to discover and fix.

Next to outright model failure, AI reliability has some more subtle aspects as well. The question of unwanted bias arises frequently in AI, for example in image recognition systems that don’t react in the same way to different skin colors. LatticeFlow helps detecting and fixing such problems, giving it a societal significance that goes well beyond purely technical reliability.