(Originally published in LinkedIn — December 2020)

I had two meetings this week with fellow risk professionals, one is with audience from my home country (Indonesia) and the other one is more diverse (from US and HK). The subject is more or less the same, over technological improvements on financial sectors, especially related to AI/ML. In both meetings, I have this gut feeling that the main message both audiences believe is technological improvement is a necessary mean of survival for any financial sector company right now, in which I am also a proponent of this idea. Innovation is necessary. However, I kept voicing my concern in both meetings: how good our understanding over our own model really is, while it seems that we’re promoting AI/ML implementation?

Several years ago, when I started Colossus CWS together with other two co-founders, we were just sort of playing around with the idea of “How cool is it if we let computers work for us, especially in trading!”. We were using TradingView back then and coded several pieces already, up until we learnt that there are some limitations to its backtesting platform, mostly related to difficulties to import/export data. Then, we started using demo account of one prominent broker to test strategies and things started to move. We started getting great backtesting results by combining our knowledge in trading, quantitative finance, and machine learning.

In our first year working, the trading algo looked running well for the first several months, then it started making losses. Fortunately, all was done in paper trading. We learnt afterwards how the danger of solely relying on backtesting performance can be from this experience. Starting from this moment, we began to read more and more scientific materials that can support our work, from machine learning, optimization, down to various trading strategy possibilities (e.g. relative value trading). By the end of 2019, we ended up having 3 working trading algos with satisfying and positive results, until Coronavirus struck in Q1–2020.

We decided to shutdown all the 3 trading algos that we report to potential customers and use the time to analyze our trading algo behavior without manual intervention at all from us, where it started to show stable and promising results in recent weeks. As an illustration of my confidence, our flagship Gen3 trading algo still ended up flat YtD after we shut it down in Q1–2020 and the “no-intervention” run survived, with its demo account current balance at around 72.5k, where starting balance is around 100k at beginning of this year. This removed the doubt of a complete wiped out account scenario, even when running without supervision, no stop-loss, with leverage up to 12x, and in midst of “once a century” kind of event. I am very much confident now that Colossus team will thrive in the upcoming years with this “lessons learned” moment.

So, what’s to share from this experience? Both the trading algo experience and the two meetings that I had this week reminded me of a famous quote from George Box that leads me to write this article:

“All models are wrong, some are useful”

In fact, I would like to put this quote as the header of this article, but I found out there’s another quote holding quite similar spirit by Pablo Picaso, in which when I changed to word “art” with “model”, and word “artist” with “modeller”, it became:

We all know that model is not truth. Model is a lie that makes us realize truth, at least the truth that is given us to understand. The modeller must know the manner whereby to convince others of the truthfulness of his lies.

Though it has quite similar spirit to the famous Box quote that all modellers normally know, this second quote, after reworded to our context, somewhat gave me a sort of a chill. It chills me, at the part of “must know the manner whereby to convince others of the truthfullness of his lies”. This specific part reminds me the weaknesses in economic/finance models, even the very traditional assumption like R.E.M. (Rational Economic Man, not the band), where some of these weaknesses even have led us into recession situation (Tech Bubble in 2000, MBS in 2008). This, I believe, is something that all modellers should know, including those who are currently active in AI/ML field.

If you have watched AlphaGo, there is a statement made by David Silver, AlphaGo’s lead researcher, when analyzing the 2016 AlphaGo match vs Lee Sedol, Go World Champion:

For Move 37, the probability was one in ten thousand. In other words, AlphaGo knew this was not a move that a professional Go player would make

This statement, in one hand is expressing how powerful the analytical power that AlphaGo holds for it to won against Lee Sedol, but on the other hand also revealed the very fact that it can be so unpredictable, that humans may not be able to know how such option/decision was chosen. Lee, at one point, even explained in this match that he cannot believe that he was playing against a machine, as that move felt so “human”…

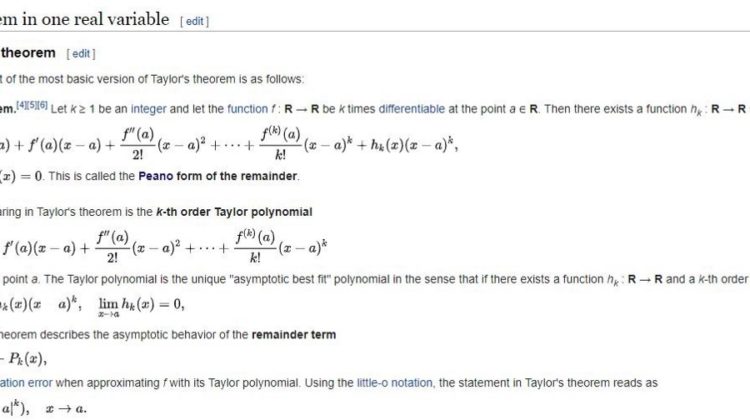

Now, back to my discussions this week with my fellow risk professionals. I can confirm that AI/ML is indeed a game changer in this industry, it improved (and will further improve) lots of things. However, just like having an Excel template to automate manual tasks, there is always a moment when someone started to ask “Do you know what this line is for? It doesn’t look important to me…”, and the most senior person in the team started to say “Don’t change anything! If it works, then IT WORKS!”. If such moment started to feel familiar in your data scientist team, risk modellers team, or whatever team dealing with data or models in your company/team, you need to remind yourself that even Taylor’s polynomial expansion formula comes with an error term (see below — courtesy of Wikipedia). So, just to remind you, if you ever had dealt with such situation, I am afraid that your team is becoming a cult, no matter how sophisticated the model is.

For more information about me and my professional work, please visit www.finrisk.eu