Multi-label classification sounds similar to multi-label classification but is completely different. These are one of the few terms that when you read about you get the idea completely but when you try to implement your brain goes like multi… what? What was that? Well, I can assure you that feeling confused is quite normal as there are a lot of fancy terms in machine learning and the purpose of this blog is to clarify one of them.

The process of identifying an object from a set of objects. For example, when a baby stops crying when picked up by his/her mother, it’s brain has identified his mother from a set of other people. That’s it. That is classification. Why does ML need to hype everything up? In our daily lives, we classify thousands of objects. We classify ripe bananas from unripe ones. We classify a sports car from an average sedan. These are things that come naturally to us. Our brain has evolved over the years to be extremely proficient in it. It’s the most primitive behaviour of any living being including us. We need to classify things in our surroundings to survive. I hope I have addressed the elephant in the room and now whenever you see the word classification in any research paper, post, etc. you won’t freak out.

Now you’ll say I get it that it’s not a big deal in human beings but what about machines? To that my answer’s gonna be it ain’t that much different or convoluted. In machines, it follows the same principle. You teach the machine from scratch that this object is a banana and the other object is an apple just like you yourself get taught when you are young. Your brain is extremely powerful to learn from demonstration without using any programming language or framework 😀 and then some people say that machines gonna take over the world. It ain’t gonna happen any time soon. We are too intelligent to get replaced. Coming back to the topic, to teach the so-called “machines” how to classify objects we need to give them data.

Let’s take the following example:

We can see that first two files have single label each but the third file name has two labels. Is it even possible? Yes it is and the following image shows you why?

In this image, you can clearly see that the labels are correct. There is indeed a person and a horse. You’ll say that’s alright Gautam but how are you gonna train it?

Training it is quite easy actually! First, you need to make sense of the labels. For a moment forget that we have multi-labels. Let’s assume we have three images.

- One of cat

- One of cog

- One of a human

Now suppose I have prepared a machine learning model. It takes in an image and spits out three probabilities [p1, p2, p3]

p1 : probability that the image is cat

p2: probaility that the image is dog

p3: probability that the image is human

Now comes the interesting part. How are you gonna compare these values with your labels in order to do backpropogation? Mind you your labels are strings like “Dog”, “Cat” etc. How are you gonna do backpropagation on it?

The answer is that you need to convert these labels to something that the machine understands.

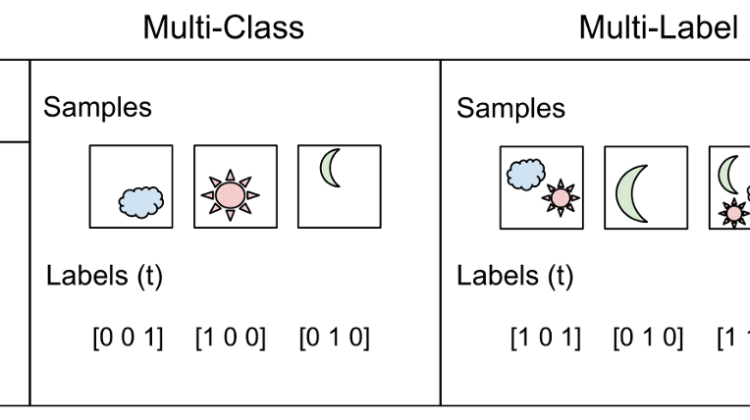

One hot encoding is the process with which you will convert these labels to something that the machine understands i.e. numbers. The transformation will be as follows: