WHAT IS A NEURAL NETWORK?

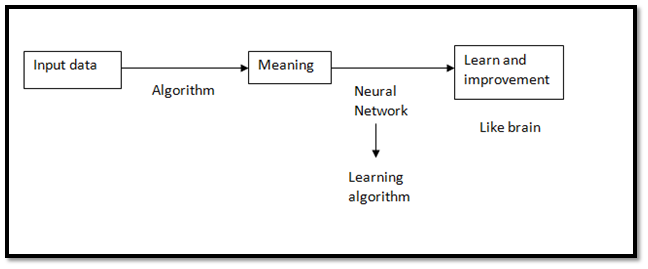

A neural network is either a system software or hardware that works almost like the tasks performed by neurons of human brain. Neural networks include various technologies like deep learning, and machine learning as a part of AI.

WHAT ARE ARTIFICIAL NEURAL NETWORKS (ANN)?

The inventor of the first neurocomputer, Dr. Robert Hecht-Nielsen, defines a neural network as −

“…a computer system made from variety of straightforward, highly interconnected processing elements, which process information by their dynamic state response to external inputs.”

An artificial neural network (ANN) is that the piece of a computer system designed to simulate the way the human brain analyzes and processes information. It is the inspiration of AI (AI) and solves problems that might prove impossible or difficult by human or statistical standards. ANNs have self-learning capabilities that enable them to supply better results as more data becomes available.

HISTORY

As computers advanced into their infancy of the 1950s, it became possible to start to model the rudiments of those theories concerning human thought. Nathanial Rochester from the IBM research laboratories led the primary effort to simulate a neural network. That first attempt failed. But later attempts were successful. In 1959, Bernard Widrow and Marcian Hoff of Stanford developed models they called ADALINE and MADALINE. These models were named for their use of Multiple ADAptiveLINear Elements. The first neural network to be applied to a real-world problem was MADALINE. It eliminates echoes on phone lines with help of an adaptive filter. This neural network is still in commercial use.

A SINGLE NEURON

The basic unit of computation during a neural network is that the neuron, often called as a node or unit. It receives input from another node or from an external source and computes an output. Each input has an associated weight (w), which is assigned on the idea of its relative importance to other inputs.

ACTIVATION FUNCTIONS

The output Y from the neuron is computed as shown in the diagram of single neuron. The function f is non-linear and is named the Activation Function. The purpose of the activation function is to introduce non-linearity into the output of a neuron. This is important because most real-world data is nonlinear and we want neurons to learn these non-linear representations. Every activation function (or non-linearity) takes a single number and performs a certain fixed mathematical operation on it. Here are some important activation functions:

Ø Sigmoid: takes a real-valued input and squashes it to range between 0 and 1

Ø Tanh: takes a real-valued input and squashes it to the range [-1, 1]

Ø ReLU: ReLU stands for Rectified Linear Unit. It takes a real-valued input and thresholds it at zero (replaces negative values with zero)

BASIC STRUCTURE OF ANNS

The idea of ANNs is predicated on the assumption that working of human brain by making the proper connections are often imitated using silicon and wires as living neurons and dendrites.

The human brain consists of 86 billion nerve cells called neurons. They are connected to thousand other cells by Axons. Dendrites accept stimuli from external environment or inputs from sensory organs and the inputs create electric impulses, which quickly travel through the neural network. A neuron can then send the message to other neuron to handle the difficulty or doesn’t send it forward.

ANNs are composed of multiple nodes, which imitate biological neurons of human brain. The neurons are connected by links and that they interact with one another. The nodes can take input file and perform simple operations on the info. The results of these operations are passed to other neurons. Activation or node value is output at each node.

Weight is associated with each link. By altering weight values, ANNs are capable of learning,

ARCHITECTURE OF ARTIFICIAL NEURAL NETWORKS:

To understand the architecture of an artificial neural network, we’d like to know what a typical neural network contains. In order to explain a typical neural network, it contains an outsized number of artificial neurons (of course, yes, that’s why it’s called an artificial neural network) which are termed units arranged in a series of layers. Let us take a look at the different kinds of layers available in an artificial neural network:

Input layer:

The Input layers contain those artificial neurons (termed as units) which are to receive input from the surface world. This is where the particular learning on the network happens, or recognition happens else it’ll process.

Output layer:

The output layers contain units that answer the knowledge that’s fed into the system and also whether it learned any task or not.

Hidden layer:

The hidden layers are mentioned hidden in between input layers and the output layers. The only job of a hidden layer is to rework the input into something meaningful that the output layer/unit can use in a way

Most of the artificial neural networks are all interconnected, which suggests that every of the hidden layers is individually connected to the neurons in its input layer and also to its output layer leaving nothing to hang in the air. This makes it possible for an entire learning process and also learning occurs to the utmost when the weights inside the artificial neural network get updated after each iteration.

There are two Artificial Neural Network topologies –

· FeedForward

· Feedback.

In this ANN, the information flow is unidirectional. A unit sends information to other unit from which it doesn’t receive any information. There are no feedback loops. They are used in pattern generation/recognition/classification. They have fixed inputs and outputs.

Here, feedback loops are allowed. They are used in content addressable memories.

In the topology diagrams shown, each arrow represents a connection between two neurons and indicates the pathway for the flow of data. Each connection features a weight, an integer number that controls the signal between the 2 neurons.

If the network generates a “good or desired” output, there’s no got to adjust the weights. However, if the network generates a “poor or undesired” output or a mistake , then the system alters the weights so as to enhance subsequent results.

Artificial neural networks have the power to find out but they ought to be trained. There are many learning strategies namely:

· Supervised Learning: It involves a scholar. For example, the scholar gives examples while preaching for better understanding of the moral. In the same way, ANN implements pattern recognition where it starts guessing while recognizing. Then, the trained data patterns provide the ANN with the answers.

· Unsupervised Learning: It comes to action when there is no sample data set with known answers. Searching hidden pattern is one such example. The concept of clustering involves dividing the elements into sets of groups, is based unknown pattern that are carried out using existing data sets.

· Reinforcement Learning: It is a strategy built based on observation. The ANN takes decision by considering its environment. If the observations are supposed to be negative, the network adjusts its data to make a different decision for next time.

ADVANTAGES OF NEURAL NETWORKS

Some of the advantages of neural networks are listed below

· Self-Organization: An ANN generates representation of its own of the information that it receives at the time of learning.

· Real Time Operation: calculations of ANN may be done simultaneously, and some special (hardware) devices are manufactured to take advantage of this capability.

· Adaptive learning: Capability to solve tasks is based on the training set data.

· Redundant Information Coding Through Fault Tolerance: Semi destruction of a network leads to degradation of corresponding performance. Moreover, some network will have the power to retain data even when a serious network damage occurs.

From technical point of view, one of the biggest challenges is the amount of time it takes to train networks, which often require acceptable amount of computing power for even complex tasks. Second most issue to be considered is that neural networks are black boxes, in which the user groups the trained data and receives answers. They are allowed to tune the answers, and drawback is that they have no access to the exact process of decision making. This is the reason why researchers are working actively, but artificial neural networks play a very big role in changing day-to-day lives.

Artificial neural networks are paving the way for life-changing applications to be developed to be used altogether sectors of the economy. Artificial intelligence platforms that are built on ANNs are disrupting the normal ways of doing things. From translating sites into other languages to having a virtual assistant order groceries online to conversing with chatbots to unravel problems, AI platforms are simplifying transactions and making services accessible to all at negligible costs.

Recognition of Image was the primary area where neural networks were successfully applied, but the technology expanded to several areas like

· Natural language processing, translation and language generation.

· Drug discovery and development.

· Stock market prediction.

· Delivery driver route planning and optimization.

· Chatbots.