Let’s start by considering an example- Suppose we train a classifier on the image dataset of Cats and Dogs and find it is good enough to differentiate between a cat and a dog with an accuracy of 92%. Now consider real-life images and we want to predict whether it is a cat or a dog using this classifier. We see that not all the images we encounter are of cats and dogs, some may be of a horse or cow also. But when we will use these images on the classifier it will predict the image of the horse as a cat or a dog only. It will not say that it is neither a dog nor a cat.

These problems are very common, and we would definitely encounter them in near future.

So what’s the solution to these problems -It is Open World Classification.

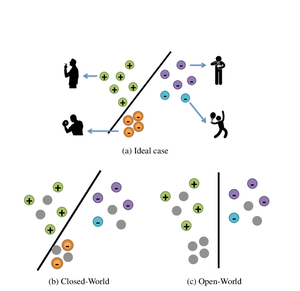

In simple words, Open World Classification is where the classifier not only classifies the examples it has seen i.e the examples that have been in the training dataset but also has the ability to reject the unseen examples like in the above case rejecting the example of the horse.

Discovering the hidden classes of rejected data is difficult without prior knowledge. However, we do have the data from the seen classes, which can tell us what kind of similarity/difference is expected for examples from the same class or from different classes. It is reasonable to assume that this knowledge can be transferred to the rejected examples and used to discover the hidden unseen classes in them.

So our main objective is to build a model that can classify each example to the seen class or reject it as unseen. To solve this problem, we first propose a model that is a neural network: an Open Classification Network (OCN) for seen class classification and unseen class rejection.

Open Classification Network (OCN)

We will use the latest DOC algorithm for our OCN network. Although it was designed for open text classification, it also performs well on images and is significantly better than the latest OpenMAX method for open image classification. OCN contains a CNN representation learning part shared with the other networks, followed by a fully-connected layer and a 1-vs-rest layer of sigmoid functions. It does not use the usual softmax as the output layer as the softmax function does not have the rejection capability given its normalized probability distribution on seen classes and thus is more suitable for closed-world classification.

The 1-vs-rest layer naturally can reject unseen class examples because if a test example cannot be classified to any existing/seen class based on the 0.5 probability threshold, it should be rejected. However, DOC does not use 0.5 as the threshold for each seen class. It uses a much bigger probability threshold computed based on the idea of outlier detection in the context of Gaussian distribution.

Open-world learning is required which can not only classify examples from the seen classes but also reject examples from unseen classes. What is also important is to identify the hidden unseen classes from the reject examples, which will enable the system to learn these new classes automatically rather than requiring manual labeling.

References

[1]UNSEEN CLASS DISCOVERY IN OPEN-WORLD CLASSIFICATION Lei Shu, Hu Xu, Bing Liu Department of Computer Science, University of Illinois at Chicago {lshu3, hxu48, liub}@uic.ed