Tackling class imbalance

We dealt with problems where we had a similar number of data points in all our classes. In the real world, we might not be able to get data in such an orderly fashion. Sometimes, the number of data points in one class is a lot more than the number of data points in other classes. If this happens, then the classifier tends to get biased. The boundary won’t reflect the true nature of your data, just because there is a big difference in the number of data points between the two classes. Therefore, it is important to account for this discrepancy and neutralize it so that our classifier remains impartial.

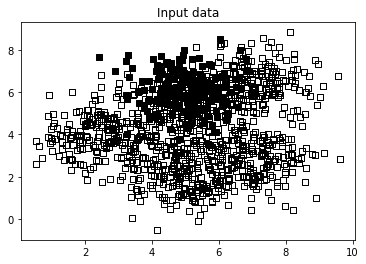

we will use a dataset, named data_multivar_imbalance.txt , in

which there are three values for each line; the first two represent the coordinates of the point, the third, the class to which the point belongs. Our aim is, once again, to build a classifier, but this time, we will have to face a data-balancing problem.

The data file is available here: https://github.com/appyavi/Dataset

Let’s see how to tackle class imbalance:

- Let’s import the libraries:

import numpy as np

import matplotlib.pyplot as plt

from sklearn.svm import SVC

import utilities

2. Let’s load the data ( data_multivar_imbalance.txt ):

input_file = 'data_multivar_imbalance.txt'

X, y = utilities.load_data(input_file)

3. Let’s visualize the data

# Separate the data into classes based on 'y'

class_0 = np.array([X[i] for i in range(len(X)) if y[i]==0])

class_1 = np.array([X[i] for i in range(len(X)) if y[i]==1])

# Plot the input data

plt.figure()plt.scatter(class_0[:,0], class_0[:,1], facecolors='black',

edgecolors='black', marker='s')

plt.scatter(class_1[:,0], class_1[:,1], facecolors='None',

edgecolors='black', marker='s')

plt.title('Input data')

plt.show()

4. Let’s build an SVM with a linear kernel.

from sklearn import model_selection

X_train, X_test, y_train, y_test = model_selection.train_test_split(X, y, test_size=0.25, random_state=5)

params = {'kernel': 'linear'}

classifier = SVC(**params, gamma='auto')

classifier.fit(X_train, y_train)

utilities.plot_classifier(classifier, X_train, y_train, 'Training dataset')

plt.show()

5. Let’s print a classification report:

# Evaluate classifier performancefrom sklearn.metrics import classification_reporttarget_names = ['Class-' + str(int(i)) for i in set(y)]

print("n" + "#"*30)

print("nClassifier performance on training datasetn")

print(classification_report(y_train, classifier.predict(X_train), target_names=target_names))

print("#"*30 + "n")

You might wonder why there’s no boundary here! Well, this is because the

classifier is unable to separate the two classes at all, resulting in 0% accuracy for Class-0. You will also see a classification report.

7. As we expected, Class-0 has 0% precision, so let’s go ahead and fix this! replace params = {‘kernel’: ‘linear’} by params = {‘kernel’: ‘linear’, ‘class_weight’: ‘balanced’}

from sklearn import model_selection

X_train, X_test, y_train, y_test = model_selection.train_test_split(X, y, test_size=0.25, random_state=5)

params = {'kernel': 'linear', 'class_weight': 'balanced'}

classifier = SVC(**params, gamma='auto')

classifier.fit(X_train, y_train)

utilities.plot_classifier(classifier, X_train, y_train, 'Training dataset')

plt.show()

8. The class_weight parameter will count the number of data points in each class to adjust the weights so that the imbalance doesn’t adversely affect the

performance.

# Evaluate classifier performancefrom sklearn.metrics import classification_reporttarget_names = ['Class-' + str(int(i)) for i in set(y)]

print("n" + "#"*30)

print("nClassifier performance on training datasetn")

print(classification_report(y_train, classifier.predict(X_train), target_names=target_names))

print("#"*30 + "n")

9. The classification report:

Class-0 is now detected with nonzero percentage accuracy.

- We have used an SVM classifier to find the best separating boundary between a dataset of points.

- To address a data-balancing problem, we once again used the linear

Kernel method, but we implemented a class_weight keyword in the fit method. - The class_weight variable is a dictionary in the form {class_label: value}, where value is a floating-point number greater than 0 that modifies the C parameter of the class (class_label), setting it with a new value, obtained by multiplying the old C value with that specified in the value attribute (C * value).

C is a hyperparameter that determines the penalty for the incorrect classification of an observation. So, we used weight for the classes to manage unbalanced classes. In this way, we will assign a new value of C to the classes, defined as follows:

Ci = C * wi

Where C is the penalty, wi is a weight inversely proportional to class i’s frequency, and Ci is the C value for class i. This method suggests increasing the penalty to classify the less represented classes so as to prevent them from being outclassed by the most represented class.

In the scikit-learn library, when using SVC, we can set the values for Ci automatically by setting class_weight=’balanced’.