Attention Branch for Various Kernel Sizes, Outperforms SENet

In this story, Selective Kernel Networks, SKNet, by Nanjing University of Science and Technology, Momenta, Nanjing University, and Tsinghua University, is reviewed.

In the visual cortex, the receptive field (RF) sizes of neurons in the same area (e.g., V1 region) are different, which enables the neurons to collect multi-scale spatial information in the same processing stage.

RF sizes of neurons are not fixed but modulated by stimulus.

In this paper:

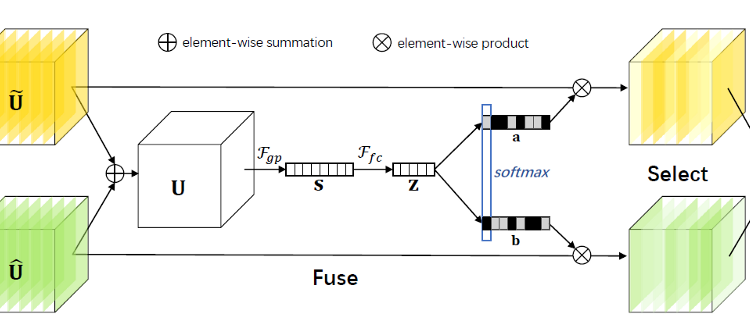

- A building block called Selective Kernel (SK) unit is designed, in which multiple branches with different kernel sizes are fused using softmax attention that is guided by the information in these branches.

- Different attentions on these branches yield different sizes of the effective receptive fields of neurons in the fusion layer.

- Multiple SK units are stacked to a deep network termed Selective Kernel Networks (SKNets).

This is a paper in 2019 CVPR with over 200 citations. (Sik-Ho Tsang @ Medium)