Weka makes learning applied machine learning easy and efficient. It is a GUI tool that allows you to load datasets, run algorithms and design and run experiments with results statistically robust enough to publish.

Visit the Weka Download page and locate a version of Weka suitable for your computer (Windows, Mac, or Linux).

Weka requires Java. You may already have Java installed and if not, there are versions of Weka listed on the download page (for Windows) that include Java and will install it for you.

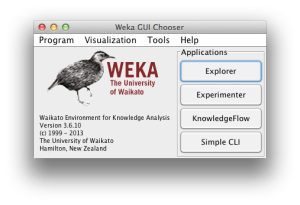

This may involve finding it in program launcher or double clicking on the weka.jar file. This will start the Weka GUI Chooser.

The Weka GUI Chooser lets you choose one of the Explorer, Experimenter, KnowledgeFlow and the Simple CLI (command line interface).

Click the “Explorer” button to launch the Weka Explorer.

This GUI lets you load datasets and run classification algorithms. It also provides other features, like data filtering, clustering, association rule extraction, and visualization, but we will not be using these features right now.

Click the “Open file…” button to open a data set and double click on the “data” directory.

Weka provides a number of small common machine learning datasets that you can use to practice on.

Select the “iris.arff” file to load the Iris dataset.

The Iris dataset is a famous dataset from statistics and is heavily borrowed by researchers in machine learning. It contains 150 instances (rows) and 4 attributes (columns) and a class attribute for the species of iris flower (one of setosa, versicolor, and virginica).

Now that you have loaded thedataset, its time to choose a machine learning algorithm to model the problem and make predictions.

Click the “Classify” tab. This is the area for running algorithms against a loaded dataset in Weka.

You will note that the “ZeroR” algorithm is selected by default.

Click the “Start” button to run this algorithm.

The ZeroR algorithm selects the majority class in the dataset (all three species of Iris are equally present in the data, so it picks the first one: setosa) and uses that to make all predictions. This is the baseline for the dataset and the measure by which all algorithms can be compared. The result is 33%, as expected (3 classes, each equally represented, assigning one of the three to each prediction results in 33% classification accuracy).

You will also note that the test options selects Cross Validation by default with 10 folds. This means that the dataset is split into 10 parts: the first 9 are used to train the algorithm, and the 10th is used to assess the algorithm.

The ZeroR algorithm is important, but not very interesting.

Click the “Choose” button in the “Classifier” section and click on “trees” and click on the “J48” algorithm.

This is an implementation of the C4.8 algorithm in Java (“J” for Java, 48 for C4.8, hence the J48 name) and is a minor extension to the famous C4.5 algorithm. You can read more about the C4.5 algorithm here.

Click the “Start” button to run the algorithm.

After running the J48 algorithm, you can note the results in the “Classifier output” section.

The algorithm was run with 10-fold cross-validation: this means it was given an opportunity to make a prediction for each instance of the dataset (with different training folds) and the presented result is a summary of those predictions:

Firstly, note the classification accuracy. You can see that the model achieved a result of 144/150 correct or 96%, which seems a lot better than the baseline of 33%.

Secondly, look at the confusion matrix. You can see a table of actual classes compared to predicted classes and you can see that there are a total of 6 errors. This table can help to explain the accuracy achieved by the algorithm.