The 2021 McGill AI Learnathon was held virtually on 6th February, and brought together over 140 high school, undergraduate and graduate students passionate about AI. We had a blast organizing the event, but we know that not everyone could attend all the presentations. As such, we’ve summarized each talk below, and uploaded their recordings to YouTube. We hope that these resources are helpful to those who couldn’t make the event live, and we look forward to seeing you at future events!

The McGill AI Learnathon kicked off with a brilliant keynote talk on Machine Learning and Creativity, by Pablo Samuel Castro, a McGill Alum who currently works at Google Brain. Whilst Dr. Castro’s academic background is in fundamental reinforcement learning (RL), he is also a passionate musician.

Dr. Castro presented music as an inherently structured art, citing the circle of 5ths , tones, and sonatas as examples of this form. This structure, he suggested, is prime for extraction by machine learning (ML) models. He played an example utilising Bach and counterpoints, where if a melody is given, the counterpoint rules provide the harmonisation. These ideas have culminated in Magenta; Dr. Castro’s brain child that strives to work at the intersection of art and music, ML, and human creativity and feedback.

Dr. Castro’s initial experiments with music and ML began with combining transformers and music to get a model capable of doing two things:

– Conditional Generation: Where you feed a snippet of music into the model and it outputs a longer piece.

– Unconditional Generation: The model produces an original piece of music without you having to feed it an input. (He presented some very cool visualisations of models learning from music at this point).

From here, LyricAI was created. In collaboration with David Usher, a British-Canadian musician, this model was able to rewrite a version of the song ‘Sparkle and Shine’. Castro’s goal is ultimately to create tools with machine learning that can support and help artists, so that the two work together.

Pablo Samuel Castro reinforces here that musicians should always be the ones in charge. Machine learning models should only act as tools to support the artist. In this manner, he hopes to make music more accessible to people from all sorts of backgrounds.

David Rolnick is an Assistant Professor in the School of Computer Science at McGill and at Mila. In his talk, he discusses how technology and machine learning can be used to tackle the problem of climate change, whose effects include flooding, extreme heat, fires, and droughts, to name a few.

He defines two key terms in the field:

1. Mitigation: Reducing greenhouse gas emissions

2. Adaptation: Resilience to consequences of climate change

David Rolnick highlights that although tech is not as important as many other things like policy, it provides tools for policy and other needed climate action. Tech’s role can be broken down into five overarching themes:

1. Distilling observed raw data into usable information

2. Improving efficiency using ML

3. Forecasting — proactive planning and scheduling

4. Accelerated simulations — fast physical models

5. Accelerated experimentation — faster creation of next-gen climate tech

In each of these cases machine learning is only one part of the strategy; many tools are needed to fight climate change, and ML is just one. Many areas don’t need ML at all. Sometimes simple methods work best (impactful applications are often unsexy). Perhaps the most important thing for computer scientists and machine learning engineers to keep in mind is this: Work with domain experts from the start of a project. Collaboration with people in the relevant domains is vital, and you must consider different stakeholders and interests in the work that you do.

Read more about Tackling Climate Change with Machine Learning and check out climatechange.ai to get involved!

Sandi Mak is a mathematics teacher at Vanier College. Her talk is about the mathematics and algorithms behind machine learning, with a specific focus on the Transformer, a neural network model which is widely used in natural language processing. She gives a detailed step-by-step explanation of how the Transformer works on tasks such as dialogue completion. She walks through the Transformer’s encoder-decoder architecture and deconstructs the embedding and self-attention layers contained within in an intuitive and accessible way. Finally, she shows how the Transformer can be applied to dialogue from movies to obtain interesting results.

Professor AJung Moon is a Professor at McGill researching robot-human interaction, roboethics, and AI ethics. Her talk is about building a resilient technological society with interactive robots.

Professor Moon began with a discussion of how technology disrupts our society. While engineers believe in the power of technology to improve the world, reports such as the 2020 Edelman Trust Barometer indicate that the majority of people are unconvinced of the positive impact of AI. In Canada, for example, 29% of those surveyed think the effect of AI will be mainly negative, while 27% think the effect will be a mix of negative and positive.

Given these statistics, we as engineers need to keep in mind that our markets might not trust the tech we’re coming up with. In addition, the concerns of these markets might be valid: We have already seen many ways in which technologies are either in conflict with, or disrupting, our moral values.

Professor Moon breaks down the types of technological ethical issues into four categories:

1. Investment & application: where should we deploy the machines? Do we want robots in military?

2. Design & Deployment: once we’ve decided to build machines for X, what decisions should we make?

3. Governance: How should machines be governed in our society?

4. Philosophy & Sci Fi: robot rights? Singularity? Ai safety?

In the rest of her talk, Professor Moon focuses on the second category, Design and Deployment, and outlines some of the work she has done researching how we might design and deployment of robots that create more resilient technological societies.

KC Tsiolis is a third-year Mathematics Computer Science undergraduate student at McGill. He is an Academic Lecturer at the McGill AI Society, where he co-teaches the semesterly machine learning bootcamp. He also has research experience in natural language processing, which is the topic of his workshop. He provides an overview of the field of natural language processing with a discussion of the key concepts, applications, research questions, and implementation of natural language processing systems. The second half of the workshop consists of a live coding session, where a simple natural language processing model for text classification is implemented using the scikit-learn Python library.

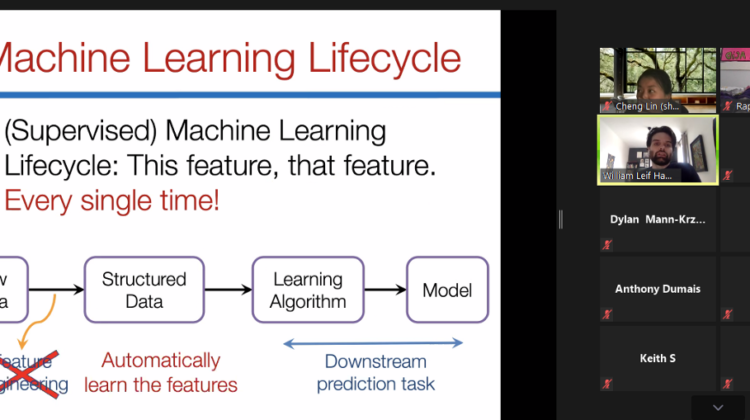

Professor William Hamilton is a Professor at McGill, currently focused on researching graph neural networks. In his talk, Professor Hamilton provides an introduction to graph representation learning, explaining the field’s motivation, reasons why the problem is difficult, and some basic graph representation learning algorithms. Specifically, he walks us through “shallow” node encoding by using different node similarity metrics, random walk embeddings, and node2vec.

The AI Experiences Panel brought together three current McGill undergraduates and one recent McGill alumni to discuss their journey through AI research and industry. Topics discussed ranged from how to enter the field of AI, what research is like, and strategies when applying to positions. While no recording is available, here are some of the recommendations from our panelists:

Learning about AI

- Online resources like Coursera and Udemy are great! Textbooks are also fantastic.

- Once you obtain foundational knowledge, you can begin reading and reimplementing more state-of-the-art papers.

- If you’re just getting started with coding an ML project, working from a well-maintained open source library or project (Keras, Tensorflow, Kaggle notebooks) might be more digestible.

Securing Research

- Find professors who do work you are really interested in and reach out with an email. All panelists agreed you shouldn’t be shy!

- Even if you haven’t done research before, you can demonstrate relevant experience through projects and coursework.

Securing Internships

- Apply to as many internships as possible.

- If you don’t have work experience, list relevant side projects.

- When pursuing side projects, dig into all the nitty gritty details of your code and implementation; in interviews, you want to be able to show that you didn’t just copy-paste the code from a Medium article, but rather that you really understand all of the engineering you had to do.

Make sure to check out https://mcgillai.com for more information about our upcoming events!

Writing credits for this piece also attributed to KC Tsiolis and Cheng Lin.