Andrew Ng, a machine learning educator and researcher said that Artificial Intelligence (AI) is the new electricity. Like the dot com stock exchange crash of 2000, there probably will be an AI stock exchange crash in the near future as companies are slapping the “AI” tag to their product lines, we can say companies are “AI” ing their way out of products. Samsung in its 30 minutes CES-2021 keynote, used the term AI, Machine learning and deep learning at least 25 times. Nevertheless, the development of these technologies is growing exponentially, so we need to be abreast of this field and be aware of the threats it possesses.

Before the AI revolution, the computers were explicitly told what to do by software programs. Thus computers were limited in their functionality. But AI helped computers to “think” by themselves. Here we feed in real-world data to the system along with target labels. Then we let the model establish a relation between the input data and the target label. The model is called a neural network. Like neurons in our brain communicate with each other in a hierarchical order, similarly, neural networks learn from the data by having layers of neurons stacked upon each other. So, pertinent data is essential to have a good model, both qualitatively and quantitatively.

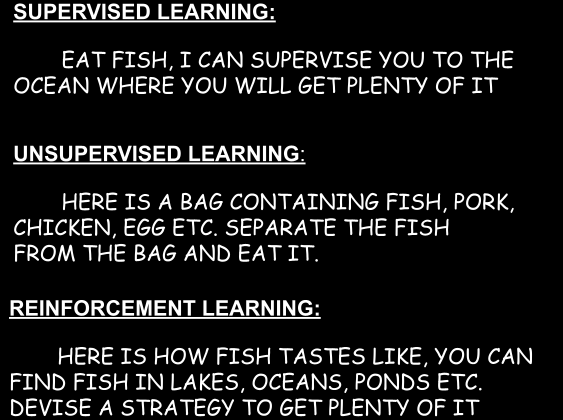

In AI lingo it is called Supervised learning, the target label as a supervisor (teacher) guides our model (student) to learn and reach to its target. We have other types of learning like Unsupervised learning, where the model does not have a target label and it tries to group similar input data. So here teachers don’t help, like in online classes. Another type of learning is reinforcement learning, where the model is motivated by rewards and its primary purpose is to come up with strategies to maximize its reward.

Note-: I used AI, neural networks and sometimes computer interchangeably, they are not the same thing but writing AI all the time was redundant.

Now computers are on par with human accuracy, even in some cases exceeding average humans. So, WHAT IS YOUR BIGGEST FEAR WITH AI? On top of your head, it would be job losses or terminator movie becoming real. Let’s discuss these two common fear.

As computers start to become better at things compared to human and corporations which are profit-maximizing entities would find AI’s more lucrative, people could lose jobs. Corporations need not pay health benefits, AI’s can work at a larger scale without enervating and no washroom breaks. People would argue that we can off-load all mundane tasks to AI’s and humans can work on the Big picture stuff like travelling to mars or starting a business. But certainly, it is not for everyone and we will have job losses in the future. This can be solved at a personal level by learning new and relevant skills without stagnation. Also, the government institutions are looking for alternatives like UBI (Universal Basic income). Here, every citizen would be given a fixed amount at the end of each month which can help them to pay the bills, learn new skills or start a business. It would be direct financial help by the government to cope with the changes and job losses moving forward. Job losses are coming but not a primary concern for now. We are off by at least 10–15 years before this becomes real, also we need people to work on these systems, for maintenance and improvements. So it would be a gradual process and also there would be government interventions to save jobs and maintain a quota of human workers in companies as people have the power to vote not AI (Not sure about this now, they can manipulate results).

The other fear is, AIs will achieve general intelligence and would dominate the planet as the superior beings. In 2005 researchers concluded that we share 99% of DNA matches with chimpanzees. Just 1% difference puts a cage between us and them, where we are entitled and the superior beings on this planet. Now as AI achieves general intelligence, we may get a terminator style dystopian universe. But today’s AI is far from being human-level intelligent. No doubt a self-driving would be a better driver than a human. Humans drive with impatience, intuition and insurance ( the 3I’s of driving). But computers are getting good at specific tasks only, for now. General level intelligence is technologically limited and we are off by at least 56 years (you were cyberpunked !!).

So all these common fears are far-fetched for now and we don’t have an imminent threat from these. What if I tell you that the real threat of AI has already started and it is slowly getting out of our hand. Let me elucidate in the second part of this blog.