- Create an empty directory name as

SpeechTextAppand now go to root terminal of project and initiation of node project with the below command.

***Note:– Must be installed the node environment in your system.

npm init

- Fill out the required information for the initialize of the project.

- Create a file named

index.js— this file will have the main code for our application. - After initiation of the node app, Now we’ve to installing the required below packages for this project.

npm install microsoft-cognitiveservices-speech-sdk@1.14.0 --save

npm install express@4.17.1 --save

npm install request@2.88.2 --save

npm install ejs@3.1.5 --save

npm install consolidate@0.16.0 --save

npm install https-proxy-agent@3.0.0 --save

npm install multer@1.4.2 --save

npm install mustache@4.0.1 --save

npm install readline@1.3.0 --save

npm install wavefile@11.0.0 --save

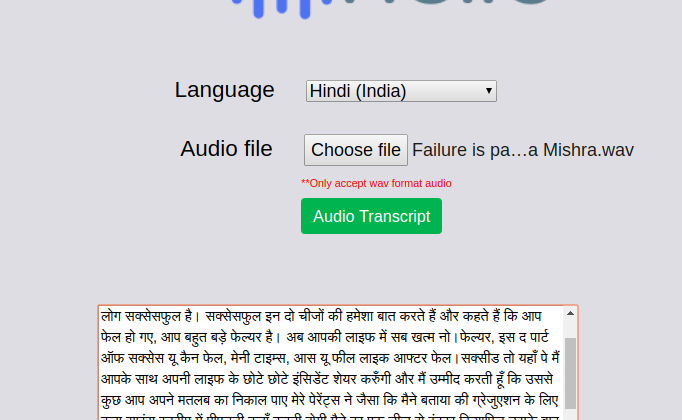

We have to start with HTML views for a single page, Lets create a new folder name as "views” on the root of the directory. Now create the "index.html” & "404.html” file & copied the following:

We have to add the "public” folder on the root directory, and add the app styles css file name as "project_styles.css” & download the “Bootstrap” file from github.

Then add the "js” & "uploads” folder inside the public directory, add the “jquery min” file inside the js folder.

Go to the root directory of the project, now create a new file “index.js” & follow the below step, it is the heart and soul of our web app.

Import the required modules:-

var express = require("express");

var bodyParser = require('body-parser');

var multer = require('multer');

var path = require('path');

var fs = require("fs");

var engine = require('consolidate');

const WaveFile = require('wavefile').WaveFile;

var sdk = require("microsoft-cognitiveservices-speech-sdk");

const cons = require("consolidate");

Define the App Variables & Configuration:-

var app = express();

var router = express.Router();

const port = process.env.PORT || 8081;

app.use(bodyParser.json());

app.use(express.static('public'));

app.engine('html', require('ejs').renderFile);

app.set('view engine', 'html');

app.set('views', __dirname + '/views');

var path1 = __dirname + '/views/';

router.use(function (req, res, next) {

next();

});

app.use("/", router);

Add the Cognitive Service Key and Variable:-

Added the cognitive service"subscriptionkey”and "serviceRegion”, which you’ve already saved it on initial step.

var subscriptionKey = "*******************************";

var serviceRegion = "*******";

var selectedlang ;

Declare the storage path:-

var storage = multer.diskStorage({

destination: function(req, file, callback) {

callback(null, './public/uploads');

},

filename: function(req, file, callback) {

callback(null,"audio.wav");

}});

var upload = multer({storage: storage});

Define the Routes:-

//// Get Method

app.get("/", function (req, res) {

res.sendFile(path1 + "index.html");

});

//// Post method

app.post('/',upload.single('userFile'), function(req, res) {

var htmlBody = req.body;

selectedlang =htmlBody.langsel;

let wav = new WaveFile(fs.readFileSync("./public/uploads/audio.wav"));

wav.toSampleRate(16000); //convert audio wav into 16000

fs.writeFileSync("./public/uploads/16000Hz-file.wav", wav.toBuffer());

var filename ="./public/uploads/16000Hz-file.wav"; // 16000 Hz, Mono

var pushStream = sdk.AudioInputStream.createPushStream();

fs.createReadStream(filename).on('data', function(arrayBuffer) {

pushStream.write(arrayBuffer.slice());

}).on('end', function() {

pushStream.close();

});

var audioConfig = sdk.AudioConfig.fromStreamInput(pushStream);

var speechConfig = sdk.SpeechConfig.fromSubscription(subscriptionKey, serviceRegion);

speechConfig.enableDictation();

speechConfig.speechRecognitionLanguage = selectedlang;

var recognizer = new sdk.SpeechRecognizer(speechConfig, audioConfig);

processmsg =".";

recognizer.recognizing = (s, e) => {

processmsg = processmsg + ".";

console.log("processing ."+processmsg);

};

var outputmsg="" ;

recognizer.recognized = (s, e) => {

outputmsg =outputmsg + e.result.text;

if (e.result.reason == ResultReason.RecognizedSpeech) {

console.log(`RECOGNIZED: Text=${e.result.text}`);

}

else if (e.result.reason == ResultReason.NoMatch) {

console.log("NOMATCH: Speech could not be recognized.");

}};recognizer.sessionStopped = (s, e) => {

console.log("n Session stopped event.");

console.log("Final Output : ",outputmsg);

fs.writeFileSync("./public/uploads/output.docx", outputmsg);

console.log("write file");

res.render(__dirname + "/views/index.html", {data: {status:"completed", contents: outputmsg}});

console.log("Send status into html");

recognizer.stopContinuousRecognitionAsync();

};

recognizer.startContinuousRecognitionAsync();

});

for more detail about the speech to text sdk. follow the below links:-

Server Activation:-

At final, we’ve to activate the server

app.use("*", function (req, res) {

res.sendFile(path1 + "404.html");

});

app.listen(port, function () {

console.log(`Example app listening on port localhost: ${port} `);

});

The full code of “index.js” is the below following:-

Now the app is ready for a run.

Open the terminal from the root of directory and run the below “command”

node index.js