Same word can have different meanings in different contexts. For example:

I arrived at the bank after crossing the _____

we cannot know whether this “bank” refers to a river or a financial institute without having knowledge of the end word.

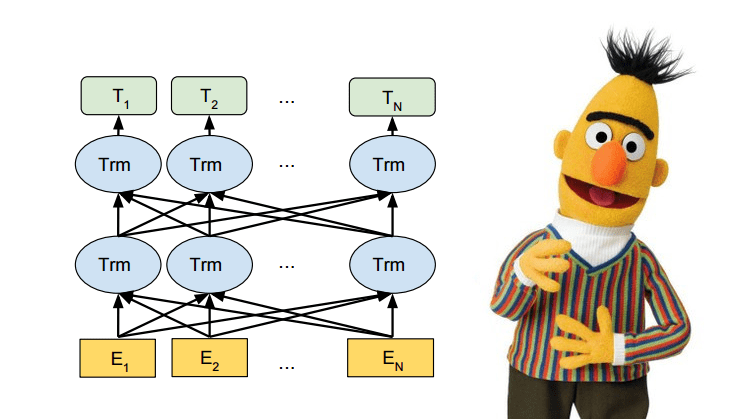

BERT learns word context based on all its surrounding words, just like humans! This is achieved using self attention. Let us understand it

What does “it” in this sentence refer to? Is it referring to the street or to the animal? It’s a simple question to a human, but not as simple to an algorithm.

Self-attention solves this problem. It allows the model to look at the other words in the sentence to get a better understanding of “it”.

Associating each word in a sentence with every other word is called self-attention. Let’s see how we can calculate self-attention by an example.

Consider this phrase — “Action gets results”.

- We created three vectors: Query Vector, Key Vector, Value Vector for the given phrase. These vectors are trained and updated during the training process. We’ll know more about their roles once we are done with this section

- The score for the first word “Action” is calculated by taking the dot product of the Query vector (q1) with the keys vectors (k1, k2, k3) of all the words.

- Then, these scores are divided by 8 which is the square root of the dimension of the key vector

- Next, these scores are normalized using the softmax activation function.

- These normalized scores are then multiplied by the value vectors (v1, v2, v3) and sum up the resultant vectors to arrive at the final vector (z1). It is then passed on to the feed-forward network as input:

So, z1 is the self-attention vector for the first word of the input sequence “Action gets results”.

That’s it — attention is just a fancy name for weighted average!