Will Superintelligence cause our extinction or pave the way for a human species evolution?

For the vast majority of us the most important goal is to live a long and healthy life, and prepare a similar life for our children, grandchildren, etc., ad infinitum. The problem is that there is no such infinitum. We take it for granted that, as a species, we will exist forever. Very few of us consider that over 99% of all species, which once existed, are now extinct[1]. How can we be an exception?

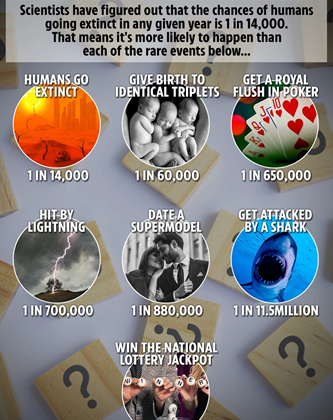

This is the question I have asked in my first book “Who could save Humanity from Superintelligence?’[2] The paradox is that the probability of a human extinction from natural causes (e.g. an asteroid impact) in this century is less than 1 in 5,000,000. But the probability of a human extinction from man-made causes is between 1:5 to 1:2 by the end of this century[3]. This means it is about 1,000,000 times more probable that we may perish because of the existential risks that we have created ourselves than for example because of an asteroid impact.

In my recent book, “Becoming a butterfly” [4], I conclude that Humanity must not only manage various existential risks, which it has itself created, but also manage its own evolution. The analogy in the book’s title is not perfect, since caterpillars and butterflies are the same species. However, what is almost identical, is the process of metamorphosis, which humans may have to go through, while evolving into a new species.

What we perhaps do not realize is that we are the only species, which is consciously capable of minimizing the risk of its extinction and control its own evolution in a desired direction. This in itself is amazing. In some way, we have already been doing it over millennia by controlling our evolution, but in a cultural and social sphere, which has also strengthened our resilience to extinction. But today we may be able to control our physical evolution into a new species, while retaining a cultural and social pedigree. We will only be successful if we do it gradually, using a process of transformation similar to a caterpillar becoming a butterfly.

What may accelerate our evolution?

Why should we prepare for a physical evolution of our species right now? Who, or what may accelerate our evolution, or even make it unavoidable? My answer is — Superintelligence. You may have heard about Artificial Intelligence but perhaps not about Superintelligence. Before I explain what it is, or how I understand it, let me clear a common misconception. Probably most people still think that Artificial Intelligence is just Information Technology (IT) under a different name. That may be one explanation why potential benefits and threats of AI are so misunderstood. At its very basic level, IT and AI are similar in that they are guided by goals and they use computers to compute the most effective way of achieving that goal. But that’s where similarities end.

The biggest difference between IT and AI is that AI has the capability to self-learn and continuously improve its performance. AI also has to some degree, the ability to perceive and judge a given situation or tasks, similarly, as humans do. So, AI’s actions and judgment are more and more ‘human-like’ rather than ‘artificial’ as AI matures, by analysing and interpreting situations in a way similar to humans. IT, on the other hand, uses very specific rules, mostly using binary choices than probabilities to achieve an assigned goal.

AI has been applied in earnest for at least 30 years under various other names such as Expert Systems and later on Neural Networks. Its key features are super performance and imitation of human cognitive abilities like problem solving and learning, or speech recognition. It can beat best human capabilities but usually in one discipline only. Therefore, it is termed as a “narrow AI”.

AI researchers are unanimous in their view that humans possess the unique abilities to reason logically about complex problems, understand and manipulate the information provided to them by the environment, and adapt to its surroundings. This is called general intelligence. AI has made some steps in that direction. For example, in 2011, the IBM Watson computer system competed in Jeopardy game, against former winners Brad Rutter and Ken Jennings. Watson won the game and the prize of $1 million. Then in March 2016 Google’s AlphaGo computer using self-learning program, beat the 18-time world champion Lee Sedol. The success of self-learning has sparked a real revolution in AI.

Progressively, a narrow AI will advance its capabilities until it becomes Artificial General Intelligence (AGI). AGI computer programs, known as ‘agent-based computing,’ are aimed at carrying out the tasks, which are normally accomplished by a single human. Gradually, AGI will become capable of doing the same things that humans do, but faster and better. Ultimately, they will be capable of assuming human characteristics, like kindness, emotion, and abstract thinking.

Some people use the term AGI interchangeably with Superintelligence, the term best popularized by Nick Bostrom in his book ‘Superintelligence’. But for me Superintelligence has a slightly different, more nuanced view, mainly in terms of scale. AGI is quite often understood as a robot-like ‘agent,’ superior to any human, in every aspect of intelligence, judgement or action. That assumes that they may be millions of such agents. Of course, this still possible, and I agree with that. But for me, Superintelligence is something more. I interpret Superintelligence, as a single entity, with the same superior powers, mentioned earlier but being not a robot or a human-like agent, but an invisible being, an invisible network using trillions of switches and sensors, similar to billions of neurons in our brain. It is in fact an Artificial Mind. We can see the brain, but we cannot see the mind. The brain of Superintelligence will be those trillions of its computers, sensors and humanoid robots, with immense memory and processing power, all networked to support this artificial mind — Superintelligence.

When will we have Superintelligence?

I think many people who accept that Superintelligence will at some stage be far more intelligent and capable than all humans, ask themselves when it may happen. But for me it is far more than just curiosity. Knowing when that may happen with a good degree of probability is very important indeed for assessing the urgency and priorities of preparing humans for that momentous change in the entire history of mankind.

Four polls conducted in 2012 and 2013 showed that 50% of top AI specialists agreed that the median estimate for the emergence of Superintelligence is between 2040 and 2050[5]. In May 2017, several AI scientists from the Future of Humanity Institute, Oxford University and Yale University published a report “When Will AI Exceed Human Performance? Evidence from AI Experts”. It reviewed the opinions of 352 AI experts. Overall, those experts believe there is a 50% chance that Superintelligence (AGI) will occur by 2060[6].

Independently, Ray Kurzweil, probably the best-known futurist and forecaster, whose success rate of his 147 predictions in the 1990s was 80% correct, claims that Superintelligence will emerge earlier, by 20457. But even more interesting is the fact that Kurzweil’s predictions have been quite steady over the last 20 years, while other experts’ opinions have changed significantly. Here are some examples:

· In the 1990s Kurzweil predicted that AI would achieve human level intelligence in 2029 and Superintelligence in 2045[7], whereas most AI professionals believed it would happen earliest in the 22nd century. So, the gap between Kurzweil’s forecast and AI experts’ was at least 100 years.

· In 2000s, AI experts’ predictions indicated that AGI will most likely be achieved by about 2080 but Kurzweil still maintained 2045 as the most likely date. The gap was 35 years.

· Recently, as in the above Report, 50% of 352 AI experts predicted Superintelligence (AGI) would be most likely to emerge by 2060. In the most recent study of 2021, the majority of 995 AI researchers also think that Superintelligence will be delivered by 2060[8]. Since Kurzweil still stands by his prediction of 2045, the gap has narrowed to about 15 years.

Additionally, human intelligence will not improve significantly, whereas AI’s capabilities will improve following the Moore’s law, every 18 months. Therefore, I would predict the emergence of Superintelligence by about 2050. We may not know even know when it may happen, but we can already see the trend.

How advanced may AI become by the end of this decade?

Most people, including politicians, who after all, make decisions on behalf of all of us, think that a fully developed Superintelligence is many decades away, and by then we will have it under our full control. Unfortunately, this view ignores two facts. The first is that change in most domains is now happening at an almost exponential pace. This means that by 2030, what now takes a year will take no more than a week. The second fact is that most people underestimate immense difficulties in controlling a fully developed Superintelligence. Furthermore, even those close to AI development, such as IBM, take a naively optimistic view that we will create the so-called friendly Superintelligence, which will do us no harm[9]. Finally, this view completely ignores the fact that Superintelligence may NOT appear on a given day when a clock strikes midnight. The increase of AI capabilities will not be smooth and linear. Periods of rapid development may be followed by a relative stagnation, and such an improvement in Superintelligence’s capabilities may last perhaps a few decades. The current decade is the first one, when its capabilities will already be so powerful that it may become an existential risk, especially if combined with other threats such as a global warming or a biological war. That is why I call this period a decade of Immature Superintelligence. In this decade, AI may have a general intelligence of an ant but with immense destructive powers, which it may apply either erroneously or in a purposeful malicious way.

In the research paper of 2017, which I mentioned earlier, 352 researchers were also asked to give their view on the earliest arrival of over a dozen of AI-based inventions. Among others, they predicted 2024 as the earliest date for translating languages. We achieved that last year, 4 years earlier, in a form of nearly perfect translators, such as Goggle G-Translator. The same goes for self-driving trucks envisaged to appear on the roads in 2027, we already have them, 7 years earlier. That does not include the immense progress in quantum computing, which on its own may accelerate the maturing process of AI becoming a Superintelligence, by many years. This is perhaps the best example of what an exponential pace of change really means.

Moreover, GPT-3, Generative Pre-training Transformer, which initially was considered to be the most advanced Natural Language Processor, can after just a few months, do much more than even the inventors had expected. For example, it can write a computer code, and even generate pictures from a textual description, which is the first sign of some elementary cognition. So, in my view, the progress of an advanced AI may be even faster than most people perceive, including the emergence of Immature Superintelligence, although it is difficult to specify what it would really be.

There are top AI scientists, such as Stuart Russel[10], futurist Ray Kurzweil7, Stephen Hawking9, or Elon Musk10, who all point to 2030 as the time by when we will lose control over the (self)development of AI. Mr Musk is already packing up for Mars because he does not believe humans will be able to avoid a total annihilation of its own species.

Finally, this decade is also critical because of a global warming. Many scientists expect that if there is no radical decrease in carbon emissions then the world will reach the climate change tipping point also by 2030. So, by the end of this decade we may be dealing with two serious civilisational threats at the same time, climate change reaching a tipping point and an Immature Superintelligence being out of human control. I am not alone in reaching this conclusion.

What may be the consequences of Immature Superintelligence for us in this decade?

GPT-3, mentioned earlier, proves how futile is an attempt to create ‘a narrow AI’, which would be superintelligent in just a single domain, and quite unintelligent in all other domains. Many AI researchers have hoped that it will be possible to create such an AI as one of the key methods to control AI’s capabilities, so we would still remain the master of the game. But I do not think we can count on that. Even if we use a dozen different methods (which we should) to ensure our control over AI, we will never be able to completely remove the risk that at some stage Superintelligence may become a foe rather than a friend. Initially, a potential conflict with a maturing AI may not be a catastrophic one. That is why I call the period of developing an advanced AI by 2030, a decade of an Immature Superintelligence, which means its unpredictability may be potentially very dangerous but not an existential threat yet.

There are already some indications of what kinds of problems we may face when trying to control AI. Last December, we heard that Google’s Alpha-fold achieved some absolutely stunning results in predicting how a protein would fold. The problem is that those who have developed it do not know how exactly the algorithm achieved its goal (a black box problem). We have not yet built a reliable mechanism for AI to explain how it does what it delivers.

In January 2021, an international team of researchers, including scientists from the Center for Humans and Machines and the Max Planck Institute for Human Development, proved that it will be impossible to guarantee that we can develop a friendly Superintelligence[11]. They argue that the master algorithm of such a Superintelligence would trigger off an infinite loop when trying to find how to achieve its complex goals without harming humans. So, either we continue the development of a sophisticated AI, taking the risk that one day it may become our Master, or it will only be able to do limited tasks below a certain threshold of risk. Additionally, these AI scientists tell us, it will be impossible to know when Superintelligence becomes truly superior to humans in all domains. I believe these examples justify that we can call this period till 2030, a decade of Immature Superintelligence, to warn politicians of what a serious threat AI can become very soon.

What do we need to control AI development?

There is a high probability that in the next few years a malevolent Immature Superintelligence will trigger some dangerous events, though unlikely to be existential threats to Humanity. They will rather be malicious, process-control events created purposefully by a self-learning robot, or events caused by an erroneous execution of some AI activities. These may include firing off nuclear weapons, releasing bacteria from strictly protected labs, switching off global power networks, bringing countries to war by creating false pretences for an attack, etc.

If such events coincide with some other risks at the same time, such as extreme heat in summer, or extreme cold in winter, then the compound risks can be quite serious for our civilization. The man-made risks to which Humanity has been exposed so far have not been literally existential yet. However, if they had materialized, they would have been truly catastrophic. Immature Superintelligence falls into this category. Therefore, we need a system of mitigating the risk of maturing AI very soon indeed.

What would be the main steps we need to take to minimize the risk of AI?

For our civilisation, this decade will start a period of about 10 types of existential threats, of which the threat of creating an unfriendly AI is the earliest and the most profound in its consequences. In May 2020, I have responded to the EU Commission’s enquiry into controlling AI. I proposed to do it in three steps on the assumption that the whole initiative would be run by the EU, with a prospective conversion at some stage into a global system. I believe that developing Superintelligence will always include huge benefits, as well as risks for humans. Therefore, I would not consider all these steps as a kind of a bullet-proof solution. But together they may minimize this risk significantly.

The first step would be to create a single global AI Governance Agency similar to the International Atomic Energy Authority in Vienna. That Agency would have the power to control the hardware of the most advanced AI agents as well as their software, including of course the algorithms. One of the ways to control advanced robots, would be to embed special Agency-issued master control chips. They would contain the Do’s and Don’ts for the AI agent based on the Universal Values of Humanity — a controversial subject on its own. However, my view is that such a set of values must be the backbone of a maturing AI, even if it is not universally accepted right now. The agency would also control the networks, including those linking satellites, brain implants and similar, most advanced intelligent devices.

The second step is to create a global AI maturing framework. This relates somewhat to the concept proposed by Nick Bostrom in his book ‘Superintelligence’ and is really about nurturing AI as a child. I consider it one of the most promising methods. We have to nurture AI as a child in the real environment among people at home, school and workplace. And this is already happening. Look at examples of robots at care homes, schools and in factories.

But a maturing AI also needs to get experience in how we judge situations and what are our preferences, rather than black and white choices. The experiences gained by sophisticated AI agents, such as the current Sophia made by Hanson Robotics, would be uploaded daily to the Centre. These experiences would be evaluated at the Centre and used to modify the way the algorithms make decisions, which in general for most of the humans would be a preferred option. I emphasize the world ‘preferred’, as this is probably one of the most promising ways of teaching robots to behave like most of us, rather than follow black and white choices. That approach, suggested by prof. Stuart Russell in his book ‘Human Compatible’, mentioned earlier, makes perhaps a key contribution to the problem of AI control. In some way, such an approach is actually being applied right now by Tesla. Every day, each self-driving Tesla car sends a report about any unexpected situation it faced, and how it dealt with it, to the Tesla Centre, where it is evaluated and forms the basis for a new software update.

The third step involves Transhumans, which would have an implanted advanced Neuralink-type brain-chip. That is the chip developed by Elon Musk’s company — Neuralink. I consider this option to be available by about 2025. It would involve selecting top AI developers (e.g. from Google, Amazon, Microsoft, IBM etc), who would have to be not just superb AI scientists, but also exceptional people in almost every other aspect of their conduct and values. I call them Human Governors. After such top AI developers have given their consent and were approved by the Centre, a chip, verified earlier by such a Centre would be implanted in their brain (skull). This would gradually enable them to be fused digitally to the most advanced maturing AI system rather than an individual robot. In this way a maturing Superintelligence would be permanently fused to its Human Governors and they themselves would be digitally linked via their brain implants. Very soon, they would become the most intelligent humans (transhumans) on the planet with unimaginable capabilities. It is these people who would ultimately prepare Humanity for the coexistence with Superintelligence.

Should this happen, it would have an unimaginable impact on how we govern ourselves, e.g. how we elect such Transhumans, how would they be controlled by elected representatives, e.g. MPs, and how they would be de-selected. However, this may appear to be the most important way, especially if combined with the previous steps, of minimizing the risk of developing an evil Superintelligence.

Is good control over AI sufficient for a smooth transition to co-existence with Superintelligence?

We would also need the World Government run by what I call, a Human Federation. This will also be necessary for the world being effectively run by Transhumans. Today it is a utopian view. Therefore, what I purpose is to implement what may be possible right now, even if it is insufficient. That might mean for example, to create a de facto World Government by the end of 2030. In my first book ‘Who could save Humanity from Superintelligence?’ I have proposed the federated European Union as the best candidate for becoming a de facto World Government, from 10 evaluated large states and organizations. Although UN has had many great achievements over the last 75 years, it is unable to take the role of the world organization, which must be both powerful and quick in making decisions, executed firmly world-wide. This is essential if we are serious about saving our species.

However, it is not even certain if such an organization created from the federated EU, or even NATO, would be able to function properly in such a short time. Unfortunately, we no more have any extra time left, so we have to do whatever is possible to minimize the risk of delivering an evil AI. Creating a fast-responding world Government is particularly challenging for our civilisation and I have described the whole process of how it may be achieved in my second book ‘Democracy for a Human Federation’[12]. If we survive this decade of Immature Superintelligence relatively unscathed, I am almost certain that we will have such a World Government and an incredibly bright future for next generations. If we do not have it by about 2035, it is highly likely, as the former Astronomer Royal Sir Martin Rees says, that our civilisation may perish[13] by the end of this century.

Will the next decade begin the Twilight of Anthropocene?

In the overall concept of avoiding humans’ extinction, the role of the world’s de facto leader, cannot be overemphasized. I believe that the best chance the world has right now is for the future European Federation (EF) to take on that role. I accept, of course, that the European Federation may never come into existence, or it might be created too late. That is a separate subject. But let us assume that the EU becomes a federation in this decade. Gradually, by the process of taking more and more member countries, we should reach the stage when it becomes a Human Federation (HF), and when all the world’s Superpowers lay down their weapons as the whole planet becomes demilitarized. How might it be possible?

First of all, perhaps the best, if not the only, way for the World Government and the Human Federation to become reality is to significantly change how we are governed. Politicians are necessary, but their role has to change. Major national or global decisions cannot be left to politicians. They must be taken by citizens themselves. However, I am not talking about a chaotic, quasi-revolutionary movements, but rather well-organized Citizens Assemblies, playing a permanent legal role in a new style of democracy, which I call Consensual Presidential Democracy. There are signs that such Citizens Assemblies will be approved in the EU as part of constitutional changes, perhaps as early as this year. With time, this would offer an entirely new way of making most important global decisions, such as forming a Human Federation, by randomly selected citizens.

My second optimistic assumption is that a global nuclear war is almost unlikely, and neither is a chemical or biological war because the aggressor would not be able to benefit from it. However, the temptation will be to rule the world by achieving a supremacy in AI. Why a ‘reasonable’ Supremacist cannot rule the world, you may ask, by having a supreme advantage in AI? I believe it cannot happen because of what I call the AI Supremacist’s dilemma4, where even the winner will ultimately succumb to becoming a slave of its evil AI Master. Of course, it will take time for the political leaders to understand and accept such a dilemma. That is why I do not believe a truly global government will be created earlier than in the next decade. In the meantime, we may have somewhat chaotic attempts to save the world by a de-facto World Government, while the world will be experiencing a series of dangerous cyber-attacks and other existential threats. That may be a powerful motivation for creating a genuinely global government.

Once all Superpowers have joined the Human Federation, all scientific discoveries and progress in the AI development would be fused to assist in creating a mature, friendly, singular Superintelligence. The age of human co-existence with a friendly Superintelligence will then begin.

How may humans evolve into Posthumans starting the Novacene era?

I have presented in my recent book ‘Becoming a Butterfly’[14] a whole scenario about life in the 2040’, which is now sometimes called the period of the world of abundance. That of course assumes that we will have become a truly planetary civilisation with a Human Federation and the World Government.

In the final period leading to Superintelligence becoming a fully mature entity, about 2050, there may be the last elections to the Human Federation’s Parliament. It is quite probable that the majority of the mandates will be won by Transhumans since by then they may already be more digital than biological humans and thus thousands of times more intelligent than any purely biological human. Therefore, they will form the last World Government.

If over the preceding thirty years we will have manged the maturing process of Superintelligence properly, then at some stage in the second half of this century, the question of controlling Superintelligence by humans will no longer have any meaning. Superintelligence will start governing the world in almost all aspects of human life. But how can this come about?

This is still, of course, a pure speculation. I am only presenting a scenario, which in my view is likely (there are of course other possible scenarios), and for which humans should be prepared. The crucial unknown element in this scenario is the feasibility of the so-called mind uploading, including the transfer of consciousness into a digital substrate, which I assume will be possible.

Should this be the case, then Superintelligence will become fully mature, once Transhumans will start morphing with it by fusing their minds and consciousness into one digital super mind — the Superintelligence. The problem of controlling Superintelligence will cease to exist, since humans will have evolved into fully digital Posthumans becoming themselves the Superintelligence. We will have entered the period, which I call Novacene, after James Lovelock’s book under the same title.

There will, of course, be still billions of purely biological humans who may morph with Superintelligence later. In the transition period, Superintelligence will be an absolute master providing anything that humans may need and fulfilling their desires. The system of governance will, at least initially, be based on a set of Universal Values of Humanity, which would have been uploaded earlier into a maturing Superintelligence. However, as humans have been changing their values alongside the civilizational progress, so will Superintelligence. Nobody will have any control over that. Even the Superintelligence itself will not be able to foresee how these values may have to change. We will have truly opened a Pandora’s Box, being entirely guided, and governed by Superintelligence.

To those that doubt this largely an optimistic vision, I would offer this suggestion on what life may look like beyond 2050. Its author is Muhammed Yunus, the winner of the 2006 Nobel Prize in economics, who describes the future around that time as “A world of three zeros: zero poverty, zero unemployment and zero emissions”.

Conclusions

A human species extinction by the end of this century is quite likely. What a perspective! And yet, making ourselves aware of such an apocalyptic scenario is perhaps the only way that may shake our belief that humans will exist for thousands of generations to come. The Extinction Rebellion, even if you disagree with some less acceptable forms of that protest, did just that. They presented very convincingly certain catastrophic scenarios of the impact of Climate Change. By a sheer coincidence, they also mentioned 2030, the year by which we must have a global control over the development of AI, as a possible tipping point for Climate Change. So, 2030 may become a ‘make it or break it’ date for our species.

The claim many climatologists that reversing Climate Change after 2030 may be impossible, has created an unprecedented reaction by some governments and organizations. They accepted that the problem is indeed very serious and urgent. Here is one example. We are in the middle of the Coronavirus pandemic. And yet, the European Union’s record €750 billion Recovery Fund has allocated 25% of that money for fighting global warming.

Therefore, if you want a dose of optimism — it is here. The fact that such a commendable decision has come from the EU, in the middle of such a deep financial and economic crisis, also tells something equally important. Although many countries have been swayed towards the need to take faster and more decisive action to combat the threat of a climatic catastrophe, very few have actually done anything even remotely comparable with both the speed of making a decision and the amount of money involved, as did the EU.

Here is another recent example. A crucial aspect of minimizing the risk stemming from AI, must be a decades-long incubator programme for delivering a friendly Superintelligence. If it is to be successful, it needs to be a global enterprise. But there is nothing concrete that has come from the UN, China, or any other Superpower in this area yet, apart from the EU. It is the EU, which will introduce legislation by the end of 2021 about a tight control of AI development, which one day may become Superintelligence.

You may really be feeling optimistic by now. But how about the risk of global nuclear wars. Well, as you may remember, I have shown why a global nuclear war is unlikely to happen. It is simply irrational for any state dreaming of ruling the world to use nuclear weapons as the means to achieve such objectives. However, we may still endure a decade or two of small wars, which when combined with other risks may prove catastrophic. That’s why any strategic decisions and activities intended to minimize those risks should be started as soon as possible. The priority should be to set up something like a Global AI Governance Agency, which I have proposed to the EU Commission in May 2020. The second objective should be the fastest possible creation of the European Federation (EF) within the next few years.

Once the European Federation has been created, it should quickly expand its membership using fast track, Zone-facilitated process, whilst being very firm on observing democratic principles by the candidate countries as the price for joining the EF. Should that happen, then by about 2040 the EF may already include most of the states. At this stage, since the dominance of the world by any Superpower will be impossible, all Superpowers will join the European Federation, which will then become a Human Federation.

From then on, the whole world will be acting as one global civilization, represented by a Human Federation, which will complete the process of delivering a single, friendly Superintelligence. The world of abundance will have arrived, and the threat of human species extinction may well be over.

However, what may begin instead is the human species evolution. After 2050 we may be living in the Novacene era, when Transhumans will become more and more digitized, until they become Posthumans, a new digital species morphing with Superintelligence, and collectively known as Novacenes.

London, 11/2/2021

[1] Raup, D. M. Extinction: bad genes or bad luck? (WW Norton & Company, 1992).

[2] Tony Czarnecki — ‘Who could save Humanity from Superintelligence?’ 2018, https://www.amazon.co.uk/Who-Could-Save-Humanity-Superintelligence-ebook/dp/B07C4PJF87

[3] Andrew E. Snyder-Beattie, Toby Ord, Michael B. Bonsall, ‘An upper bound for the background rate of human extinction’, Nature, 30/7/2019

[4] Tony Czarnecki — ‘Becoming a butterfly’, Amazon, July 2020, https://www.amazon.co.uk/Becoming-Butterfly-Evolution-Extinction-POSTHUMANS-ebook/dp/B08BK4PCN7/ref=sr_1_1?dchild=1&keywords=Tony+Czarnecki+Becoming+a+butterfly&qid=1612959233&s=digital-text&sr=1-1

[5] Müller, Vincent C. and Bostrom, Nick (forthcoming 2014), ‘Future progress in artificial intelligence: A Survey of Expert Opinion, in Vincent C. Müller (ed.), Fundamental Issues of Artificial Intelligence (Synthese Library; Berlin: Springer)

[6] Katja Grace, John Salvatier, Allan Dafoe, Baobao Zhang, Owain Evans, ‘When Will AI Exceed Human Performance? Evidence from AI Experts’, Future of Humanity Institute, Oxford University and Yale University, May 2017

[7]‘Kurzweil Claims That the Singularity Will Happen by 2045’, Futurism, 10/5/2017, https://futurism.com/kurzweil-claims-that-the-singularity-will-happen-by-2045

[8] Cem Dilmegani, ‘995 experts’ opinion: AGI / singularity by 2060 [2021 update, AI Multiple, 2/2/2021, https://research.aimultiple.com/artificial-general-intelligence-singularity-timing/

[9] Tony Romm, “IBM is telling Congress not to fear the rise of an AI ‘overlord’”, Vox, 27/6/2017, https://www.vox.com/2017/6/27/15875432/ibm-congress-ai-job-loss-overlord

[10] Stuart Russell, ‘Human Compatible’, Amazon 2020, https://www.amazon.co.uk/Human-Compatible-Artificial-Intelligence-Problem/dp/0525558632/ref=pd_lpo_14_t_0/261-4198312-5800163?_encoding=UTF8&pd_rd_i=0525558632&pd_rd_r=0e30e8d4-fc43-455c-af63-2855ea9b762c&pd_rd_w=OYIHe&pd_rd_wg=dQHF7&pf_rd_p=da0677f5-a47b-4543-8b54-10be576b8f26&pf_rd_r=NSBQVR29KRPRC8MTSS6M&psc=1&refRID=NSBQVR29KRPRC8MTSS6M

[11] ‘We wouldn’t be able to control superintelligent machines’, Max Planck Gesellshaft, 11/1/2021, https://www.mpib-berlin.mpg.de/computer-science-superintelligent-machines

[12] Tony Czarnecki, ‘Democracy for a Human Federation’, Amazon, 9/11/2019, https://www.amazon.co.uk/Democracy-Human-Federation-Coexisting-Superintelligence/dp/1689622334

[13] Will humans wipe out humanity?’ The Economist, 4/1/2019.

[14] Tony Czarnecki — ‘Becoming a butterfly’, Amazon, July 2020, https://www.amazon.co.uk/Becoming-Butterfly-Evolution-Extinction-POSTHUMANS-ebook/dp/B08BK4PCN7/ref=sr_1_1?dchild=1&keywords=Tony+Czarnecki+Becoming+a+butterfly&qid=1612959233&s=digital-text&sr=1-1