One of the biggest challenges I have had during my self study of machine learning is trying to run before I could walk. Towards the end of 2019 I read the book AI Superpowers: China, Silicon Valley, and the New World Order by Kai-Fu Lee, which catapulted me into the field of data science. With much enthusiasm, I started a hands-on machine learning course from Udemy. After about one months time, I was equipped with knowledge of many modern machine learning models, and eagerly wanted to start numerous personal side-projects with this exciting technology. Unbeknownst to me, there was still much to learn.

Before diving into the relevance of algorithms and data structures in data science, it is important to understand their importance in computer science. If I were to describe their importance with a single word, it would be efficiency.

Computers are fast, very fast. Much faster than humans in many tasks. So fast that many people wrongly assume that they are infinitely fast. I know I wrongly reached that conclusion as a freshman in university. Thinking back to my first programming class, the subject of “inefficient code” came up, but I never fully grasped the concept until learning about algorithms and data structures. In that class, we used C++ to write simple programs. On occasion, a TA would inform me that a certain syntax was inefficient, but I would immediately question this statement, thinking “but it runs in milliseconds, how is this inefficient?”. Here is the catch: if you are writing 10 lines of code to parse a 50 character string once, then you will not be able to appreciate algorithms and data structures. In contrast, if you are working with a text file with millions (or more!) lines, and you do not know how to use algorithms and data structures properly, then your program will either take significantly longer to complete, or perhaps not even complete in your lifetime.

This pitfall became very apparent to me when I was attempting to do preprocessing on a dataset I had taken from online. I had found a csv file with over 20 years of FOREX data for USDCAD pairs. Each line represented a single minute window of exchange prices. Doing some quick maths, one can determine that 20 years of FOREX data with a data point at every minute roughly equals 10,512,000 data points. That is, by all measures, an appreciable number of data points. It is not even close to being considered big data, but it is enough data to quickly bog down a processor during preprocessing if inefficient algorithms and data structures are utilized.

When reviewing my data, I noticed that many data points were missing. I thought to myself “this is normal, and I can handle this no problem!”. The first statement is very true, when practicing data science, one of the most time consuming tasks is performing data preprocessing.. The second statement, could not have been further from the truth.

In my novice ignorance, I opened up a Spyder IDE, imported pandas, and loaded my entire csv text file into a pandas dataframe. Then, I wrote a few lines of code, involving nested for loops, which would insert into the dataframe the missing data points with the values immediately preceding the missing data points as a best approximation. I reviewed my code to ensure proper algorithmic logic, and when satisfied that it would run without errors, I started the script. Seven hours later I stopped the script, unsure why it had not completed on its own. I reviewed the IDE environment, and realized that I had not even gotten through 10% of the dataframe. There was no way that I could afford to take in excess of 70 hours to clean just a single dataset, but I did not even know what I was doing wrong! There was something fundamental missing from my skill set to allow me to be able to complete this crucial step of data preprocessing.

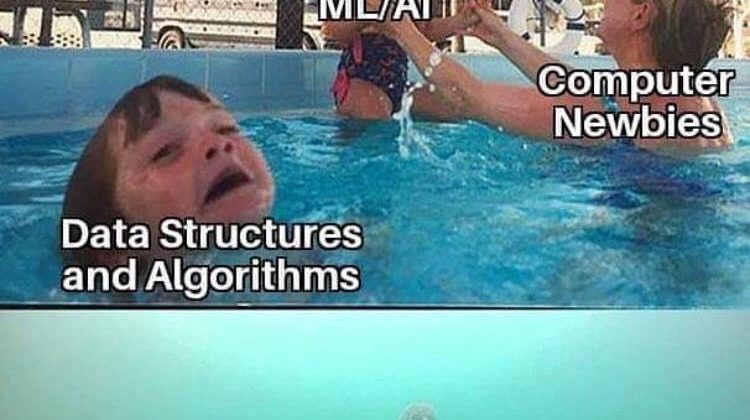

Sometime mid summer 2020, I was browsing Reddit and came across the following meme.

Ironically, this was not the first time that this meme would haunt me. About a week later, my girlfriend sent me the exact same meme. She is an computer engineering graduate from The University of Toronto and working as a software developer for a major telecommunications company in Canada. Despite the obvious humor of the meme, I was starting to realize that algorithms and data structures were a piece of the puzzle in my data science journey.

In my quest to better my skill set with data science and machine learning, I reached out to Graham Taylor, an associate professor at The University of Guelph who had learned under the renowned Geoffery Hinton at The University of Toronto. I told Dr. Taylor my story, and asked for guidance on my journey. Dr. Taylor told me that he often is asked about machine learning skills and resources, and that of course I want to make sure that I have the necessary pre-requisites. Specifically, linear algebra, calculus, probability and statistics, and algorithms and data structures (wow, exactly like the meme).

At the first opportunity possible I enrolled in a online course to conquer the study of algorithms and data structures. I registered in the Stanford Online Algorithms Specialization on Coursera, taught by Tim Roughgarden, an associate professor of computer science at Standford University. The specialization consisted of four individual courses, covering both a broad and detailed range of subjects in algorithms and data structures. Not to be a Coursera schill, but if you are in the same boat I was, I highly recommend this specialization. Be warned though, to succeed you need both a high level mathematical and programming background, and you will be lost without.

Dr. Roughgarden did an excellent job teaching, and the unanimous theme of the specialization is the guiding question “can we do better?”. This principle is applicable whenever one is writing code, and data science is no exception.

To best illustrate the importance of algorithms and data structures, I have included the below video. This video demonstrates 24 different sorting algorithms, all running at the same rate. Notice how dramatically the completion times vary.