Artificial neural network:-

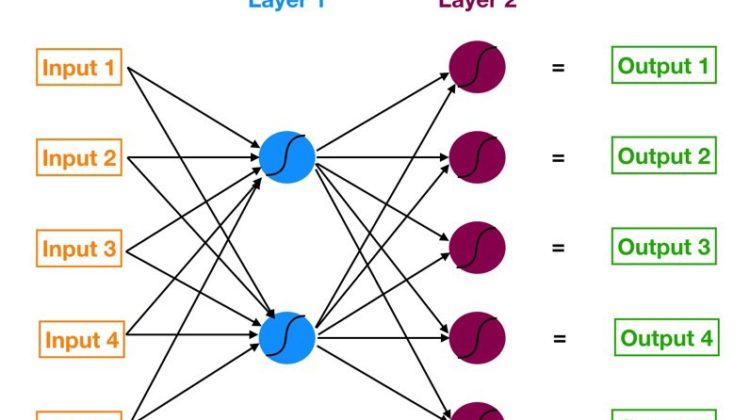

Artificial neural networks, usually simply called neural networks, are computing systems vaguely inspired by the biological neural networks that constitute animal brains. An ANN is based on a collection of connected units or nodes called artificial neurons, which loosely model the neurons in a biological brain.

Neural networks are sets of algorithms intended to recognize patterns and interpret data through clustering or labeling. In other words, neural networks are algorithms. A training algorithm is the method you use to execute the neural network’s learning process.

ANNs are composed of artificial neurons which are conceptually derived from biological neurons. Each artificial neuron has inputs and produce a single output which can be sent to multiple other neurons. The inputs can be the feature values of a sample of external data, such as images or documents, or they can be the outputs of other neurons.

Google is taking complete advantage of Neural Network, deep learning and AI. Google had launched multiple AI chatbots that answer messages for you — like a more sophisticated auto-response email — in a range of contexts, including Skype, Slack and Twitter direct messages.

But Google’s strongest point in this area is probably the range of cloud-based services it offers developers, including the Google Cloud AI machine learning tools.

ANN can be used to solve problems related to:

- Tabular data

- Image data

- Text data

Artificial Neural Network is capable of learning any nonlinear function. Hence, these networks are popularly known as Universal Function Approximators. ANNs have the capacity to learn weights that map any input to the output.

One of the main reasons behind universal approximation is the activation function. Activation functions introduce nonlinear properties to the network. This helps the network learn any complex relationship between input and output.

Perceptron

As you can see here, the output at each neuron is the activation of a weighted sum of inputs. But wait — what happens if there is no activation function? The network only learns the linear function and can never learn complex relationships.

- One common problem in all these neural networks is the Vanishing and Exploding Gradient. This problem is associated with the backpropagation algorithm. The weights of a neural network are updated through this backpropagation algorithm by finding the gradients:

Backward Propagation

So, in the case of a very deep neural network (network with a large number of hidden layers), the gradient vanishes or explodes as it propagates backward which leads to vanishing and exploding gradient.