Lianmin Zheng (an intern here at OctoML while working on autoscheduling) just pushed out a blog post on the new autoscheduling capabilities that are now upstreamed and available today in TVM.

Autoscheduling enables higher performance end to end model optimization from TVM, while also enabling users to write custom operators even easier than before.

Make sure and check out the detailed post above for details, but I wanted to give a quick summary here for those of you in a rush.

What is autoscheduling?

First off, if you’re just using TVM to optimize your end to end model performance, then just follow the tutorials below to enjoy the increased performance. But if you want to write custom operators, or understand what TVM is doing under the hood to compile your model then read on.

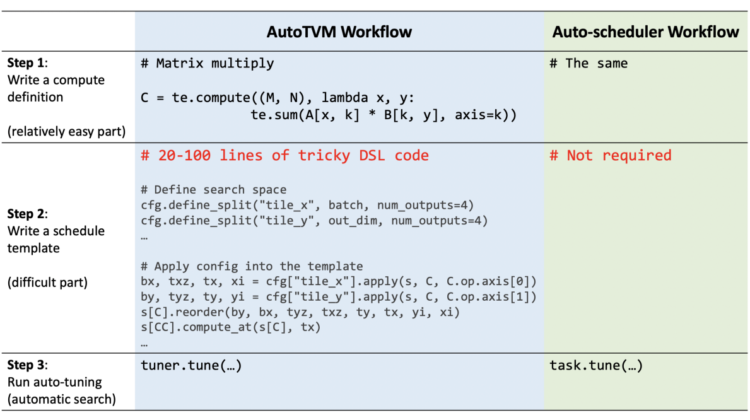

Today, as you can see from the figure below, AutoTVM makes it easy to describe a compute definition, and then provide the structure (but not specific parameters) for how to lower that computation onto a class of hardware (eg GPUs). Then AutoTVM tuning can be applied to find specific instances of that schedule for high performance execution for a particular operator (with instantiated shapes and parameters) and hardware.

Autoscheduling in TVM makes this even easier by removing the need to describe even the high level schedule template and go straight form high level compute definition to high performing binary output.

Not only does this make TVM easier to use and more powerful, it also improves performance by up to 9x on some workloads.