Machine Learning are numerical predictions and don’t have so much to show except the confusion matrixes. The best way to evaluate our models’ performance is to look and different accuracy scores.

Classification Accuracy is what we usually mean, when we use the term accuracy. Probably the most straightforward and intuitive metric for classifier performance. It is the ratio of number of correct predictions to the total number of input samples. It works well only if there are equal number of samples belonging to each class.

F1 Score is the Harmonic Mean between precision and recall. The range for F1 Score is [0, 1]. It tells you how precise your classifier is (how many instances it classifies correctly), as well as how robust it is (it does not miss a significant number of instances).

Area Under Curve(AUC) is one of the most widely used metrics for evaluation. It is used for binary classification problem. AUC of a classifier is equal to the probability that the classifier will rank a randomly chosen positive example higher than a randomly chosen negative example.

Recall is the number of correct positive results divided by the number of all relevant samples (all samples that should have been identified as positive), which means the percent of truly positive instances that were classified as such.

Precision is the number of correct positive results divided by the number of positive results predicted by the classifier, which means percent of positive classifications that are truly positive.

Mean Absolute Error (MAE) is the average of the difference between the Original Values and the Predicted Values. It gives us the measure of how far the predictions were from the actual output.

Mean Squared Error (MSE) is quite similar to Mean Absolute Error, the only difference being that MSE takes the average of the square of the difference between the original values and the predicted values. The advantage of MSE being that it is easier to compute the gradient.

Root Mean Squared Error (RMSE) is more appropriate to represent model performance than the MAE when the error distribution is expected to be Gaussian. It avoids the use of absolute value which is highly undesirable in many mathematical computations.

R-squared (r2) is the percentage of the response variable variation that is explained by a linear model. The maximum value of R² is 1 but it may take a negative value.

Variance inflation factor (VIF) is a measure of the amount of multicollinearity in a set of multiple regression variables. It is calculated for each independent variable. A high VIF indicates that the associated independent variable is highly collinear with the other variables in the model.

All in one, it looks like the Artificial Neural Network model Multi-Layer Perceptron reached the best results in all accuracy scores, and it has the lowest error rates (MAE, MSE). However, the VIF for this model is above 5, it means that my model also has the highest multicollinearity level.

I will keep working with this one, trying to find a way to make its predictive work even better.

Machine Learning work great. But after going through this workflow and given that the model results looks sensible, I have the feeling that something was missing to answer my problematic. As you might have noticed, one severe shortcoming is to account for certainties of the model and confidence over the output.

Inferential statistics use the data to learn about the population that the sample of data is thought to represent, in my case the highly engaged citizens of a Smart-City. With statistical inferences, it is possible to reach conclusions that extend beyond the actual dataset.

If the machine learning models are used to make predictions inside the dataset, probabilistic models are used to make predictions about extensive data, using probability of an event to occur and statistical hypothesis testing.

In this third part of my study, I’ll use probabilistic models and the analysis of variance ANOVA in order to frame my predictions on highly engaged citizens in the most accurate way. Whatever level of assumption is made, a correctly calibrated inference in general requires some assumptions to be correct. I will present them first, then I will be able to compare my actual results with the predicted ones. If both are very close, so my inferences would be right and I will be able to validate my ranking model at a wider scale.

Estimating probabilities with probabilistic model is to formulate my problem like that : I am investigating citizens of a city in the look for highly engaged citizens. I know that the overall population of a city can be classified in highly engaged citizens and non-highly engaged citizens, but I don’t know how many of each there are. By conducting a survey study on a random sample population I found that highly engaged citizens approximate 4,4 % of my whole dataset (16 on 366 respondents). Assuming that this class of citizen had an equal chance to appear in my sample, I want to estimate their prevalence in the whole population of citizens in Smart-Cities.

At least two questions pop up to my mind :

– How can I be sure that my sample is representative of the whole population ? I need to include uncertainty in my estimation, considering the limited data.

-How can I incorporate prior beliefs about highly engaged citizens into this estimation ?

The inferential statistics method called Bayesian inference allows to express uncertainty and prior beliefs.

To solve this problem with a Bayesian model, I will need to assume that:

– The chances to reach a highly engaged citizen are independent from each others. (I am not spreading my survey in some niche of engaged citizens)

– Any citizen can potentially be highly engaged and match with my definition. (There is no bias that would reserve this class to a niche population)

Since my population can be divided in two classes of citizens : the highly engaged and those who are not, the probability distribution of all situation respects a binomial model of distribution. In the probability theory, the binomial distribution with parameters n and p is the discrete probability distribution of the number of successes in a sequence of n independent experiments, each asking a yes-no question, and each of its own boolean-valued outcome: success (with probability p) and failure (with probability q = 1-p). In my problem, p is the ultimate objective: I want to figure out the probability of meeting highly engaged citizens in the whole Smart-City citizens, from the observed sample data. In statistics, a single success/failure experiment is drawn from a Bernoulli Distribution, which forms the prior distribution for the sample of size n drawn with replacement from a population of size N.

My sampling distribution helps to estimate the population statistic. The overall system of my interest, where a population of citizens can be divided in 2 discrete classes (highly engaged and non-highly engaged citizens) and 366 independent respondents, has a Probability Mass Function shown on the binomial distribution below :

The Central Limit Theorem states that, no matter the shape of the population distribution, the shape of the sampling distribution will remain the same. This gives us a mathematical advantage to estimate the population statistic. The number of samples have to be sufficient (generally more than 50) to satisfactorily achieve a normal curve distribution. Also, care has to be taken to keep the sample size fixed since any change in sample size will change the shape of the sampling distribution and it will no longer be bell shaped. As we increase the sample size, the sampling distribution squeezes from both sides giving us a better estimate of the population statistic since it lies somewhere in the middle of the sampling distribution (generally).

As for my study, the proportion of 4,4 % percent of highly engaged citizens over a random sample from a city population is already a mean generated out of my 3 case studies. The Central Limit Theorem allows me to assume that if I would investigate 100 Smart-Cities of the world with the same protocol of data collection, the final mean of the proportions of Highly Engaged citizens would keep close to 4,4 %.

As normally distributed, the means of the proportion of highly engaged citizens in Smart-City populations fit inside a confidence interval to be determined by our hypothesis testing.

Hypothesis testing is all about the validity of making claims from a sample. With the advent of data-driven decision making in business, science, technology, social, and political undertakings, the concept of hypothesis testing has become critically important to understand and apply. This method allows a sample statistic to be checked against a population statistic.

Hypothesis testing is defined in two terms : a Null Hypothesis and an Alternate Hypothesis. The Null Hypothesis usually claims that the inference is wrong, the Alternate Hypothesis says the exact contrary. In my case :

– Null Hypothesis : I can’t use the highly engaged variable to rank citizen engagement in Smart-City because randomly selected citizens in a Smart-City do not have 4,4% chances to be highly engaged.

– Alternate Hypothesis : I can use the highly engaged variable to rank citizen engagement in Smart-City because randomly selected citizens in a Smart-City do have 4,4% chances to be highly engaged.

The Null Hypothesis is assumed to be true and Statistical evidence is required to reject it in favour of an Alternative Hypothesis. Really, what I am asking is how confident am I in the claim that my ranking model is correct ?

In other words, I want to answer the question : “What is the chance for any random sample of inhabitants in any Smart-City worldwide to reach 4,4% of highly engaged citizens ?”

This chance is represented by the p-value (or probability value) and this is what I want to evaluate. If this p-value is less than a pre-determined Critical Value, usually at a significance level α = 0.05, I gained the evidence that the alternative hypothesis is likely to be true; then I can reject the Null hypothesis and accept the Alternative Hypothesis.

My issue is that I don’t know the real proportion of highly engaged citizens in the whole population of citizens in Smart-Cities worldwide, and so I can’t compare my prediction with the reality.

When the population parameters (mean and standard deviation) are not known, the best I can do is to estimate it from my samples. Is there a significant difference of proportion of highly engaged citizens between cities ?

Composition of Data:

Overall proportion of highly engaged citizens is 4,37%.

Taipei has 6,55% of highly engaged citizens,

Tallinn has 4,92% of highly engaged citizens,

Tel Aviv has 1,64% of highly engaged citizens.

Statistical Test :

The Analysis of variance or ANOVA test is a way to find out if survey or experiment results are significant when you we want to compare more than two groups at the same time. In other words, ANOVA test helps you to figure out if you need to reject the null hypothesis or accept the alternate hypothesis, based on a the results coming out of different independent samples. Basically, it tells whether two or more groups are similar or different based on their mean similarity and f-score.

Performing an F-test is quite straightforward: you simply divide the variances together and compare your answer with a critical value obtained from a table. The main thing to note is that the value should be more than one, and so we put the larger value on the top of the division.

INTERPRETATION :

The purpose of this statistic is to test for a difference between the proportion of engaged citizens between cities. Conducting a One-Way ANOVA using SciPy library, I found F-Statistic = 1.83 and a p-value=0.16. Considering the usual significance level at 0.05, I can’t find enough evidence to reject the Null Hypothesis. There is a statistically significant difference between my samples.

However, this statistically not significative results are not a point to an end of my study. We can think about an irregularity in the data collection process which led to fake unbalance of target group. I notably think about the fact that I collected my answers to the survey in Tel Aviv during the first Covid-19 pandemic. This unprecedented situation affected a lot of people, especially in a mediterranean city where the way of life is a lot about enjoying outdoor activities, social contact and night life. The lockdown measures could have significantly affected the way people perceived their engagement feeling, and other correlated variables like the total meeting places, or the easiness to share its opinion in public. This could certainly affect the end score of highly engaged citizens.

By the way, there are still some rooms to manoeuvre in order to finish on the reject of a Null Hypothesis of a similar genre. The first way I am thinking about is to reconsider my definition of highly engaged citizen, in order to reach less imbalance of them in between my three case studies. Other leads could be to collect more case studies on others cities, or maybe more respondents on my current case studies to vary the sample size. I could also to reformulate my Null Hypothesis, and maybe think about a different statistical test that I could conduct on my hypothesis to test.

Models are approximations of the complex dynamics that drive the observable phenomena in the world around us. They provide the setting in which we can formalise learning and decision making and hence are a foundational aspect to any rigorous analysis.

In this study, I have used 366 answers of inhabitants of 3 different Smart-Cities of the world : Taipei (Taiwan), Tel Aviv (Israel) and Tallinn (Estonia).

First I have had to clean and transform the data in order to make them easily interpretable and conduct different calculations on their potential relationship. I have been able to identify many correlation between the variables of my hypothesis, like the relationship between the engagement feeling and the wish and frequency off engagement. I also showed a like between the ability to share its opinion in public and the ability to change its opinion in contact to others, both related to the level of engagement feeling, leading to the conclusion that the most people feel engaged, the more they engage in their city by getting in touch with most peer citizens. I also broke some pre-conceived opinions like on the age, showing that young citizens feel more engaged than older ones, and that the age has no influence on the openness to voting apps as a channel for citizen engagement. Even more surprising for me: the engagement feeling has no relationship with the ability to meet peers in the neighbourhood, which says a lot about most participative methods tending to promote in-person meeting groups rather than online.

After revealing the evidence of correlated variables, I have created a combined variables matching with a definition of a highly engaged citizen in a Smart-City. Recursive Feature Elimination technique allowed me to select the best features in order to represent my variables in a model while avoiding redundancy between related variables. I found out that Engagement Feeling, Total Sources of information to shape an opinion, and Total Messaging Apps used to communicate with peers, are the three most important variables to catch the best representativeness of highly engaged citizens population. These informations has been the input for four classification models of Machine Learning: Logistic Regression, K-Nearest Neighbours, Multi-Layer Perceptron and Support Vector Machines. These four models have been used to predict the results of 2/3 random sample out of my data, after training on 1/3. Three predictive models on four reach a satisfactory accuracy score, but the best of them is the Multi-Layer Perceptron, an Artificial Neural Network used to classify data points.

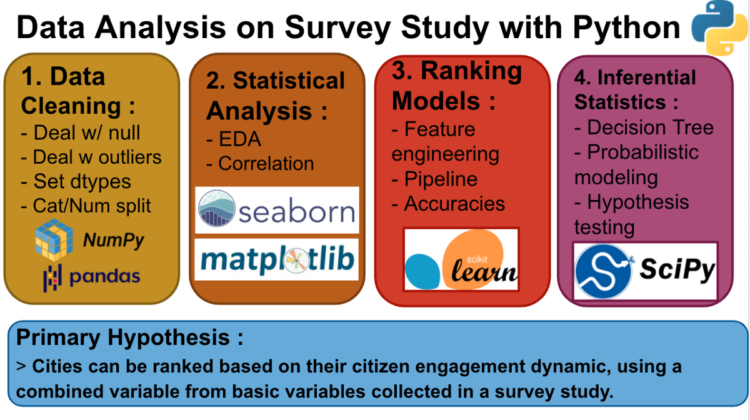

However this great results in the automation of learning to rank my citizen population in between the highly engaged and those who are not needed to be evaluated at a wider scale. In a third part I used the inferential statistics methods to test the generalisability of my assumption : My definition of Highly Engaged citizens allows me to classify the whole population of citizens of Smart-City worldwide. To test this last hypothesis, I have conducted an analysis of variance ANOVA on my three case studies. It finally appears that the imbalance in the spread of highly engaged citizens between my three case study cities do not allow me to statistically validate the model of ranking. I will have to conduct some more analysis to prove my inference.

Julien Carbonnell: After some years as Real Estate Developer in Perpignan, South of France, I founded first startup dedicated to gather neighbors in decision-making on local urban planning. I finally engaged in a PhD thesis on “Stakeholder Engagement in Smart-Cities” to get a wider look to this global market. My approach consists in comparing the Smart-City models and the Civic-Technology strategies for participatory decision-making in three culturally far cities in the world: Taipei, Taiwan (Asia), Tel-Aviv, Israel (Middle-East) and Tallinn, Estonia(North-Eastern Europe). My academic research laboratory of reference is centralized in the University of Cergy-Pontoise, Paris area, France.