For those of you who don’t know me — Hi! My name is Matt, I am a senior at Columbia University and a die hard S.T.E.M guy.

This semester I enrolled in Professor Washburne’s Salsa, Soca, & Reggae class which marked my first soiree into the realm of humanities and, unknowingly, the start of my own musical journey. During this class, I developed a specific skill set that allowed me to explore my culture and roots through music — a practice that I came to know as Sonic Archaeology.

Through this skill, I was able to realize that the connections between music equates to connections between people and that the metamorphosis of song genres hides within it the story, the struggle and the growth of those who came before us. As I continued through the course, I also began to notice that many of the histories, and consequently the songs, had similar motifs and components — which is obvious given the interdependent history that these genres all share.

However, this hindsight only came to me after many hours of diligent listening to a variety of genres and musical styles and even more hours reflecting upon what I heard. As an avid programmer however, I began to wonder — we know that it is possible for anyone to develop these skills and train your ears sharp enough in order to obtain these insights, but would it be possible to automate this? Instead of taking weeks and hours, is it possible to achieve the same skill in minutes with a machine instead? Or does the practice of Sonic Archaeology have an unstated axiom that precludes a programmatic translation? Thus arose the motivation for my field work project — is it possible to codify characteristics of the various genres I came to learn throughout the semester and create an algorithm that is able to distinguish these characteristics as accurately as I could?

The first challenge in this endeavor was creating a usable data set that was large enough to represent the subset of music that was focused on in class. In order to do so, I decided to look into the various characteristics of the genres and see if there were ways to create analogous digital forms. But where to start? To further break this problem down — I decided to start with a particular songs and began to scan for repeatable elements that mapped to certain sound wave fragments that can be extracted and fed into the algorithm.

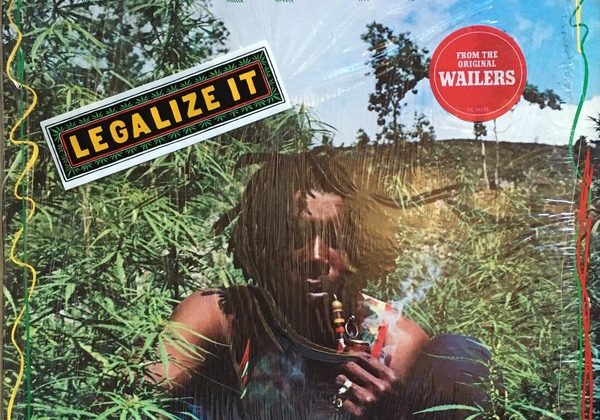

In the case of the codification of reggae, I chose to analyze Peter Tosh’s “Legalize It”. I searched for elements of the song that remained consistent throughout. The first element that fit the bill was the ska stroke that was repeated for the entirety of the song ( and is also present in a wide selection of other reggae songs). The use of the “one drop” drum rhythm was also of interest since it is one of the other core elements that give reggae songs their defining feature. However, I soon ran into another wall. Although I had found these two elements, there were usually many more that were not necessarily consistent throughout. Furthermore, I noticed that although the vocals for followed a similar cadence in this song, there were still some irregularities due to the structure of the song. Choruses and call and responses are often sprinkled throughout resulting in further distortion of the consistent elements.

What I then decided to turn to was the instrumentation analysis instead — search for particular instruments within certain genres in order to classify them.

This time around I decided to approach Salsa and chose to try to break apart Machito’s “Yo Soy La Rumba”. In similitude to the Reggae approach, I tried to find defining elements but rather than finding consistent musical techniques I decided to hone in on particular instruments. So in the case of salsa, consistent elements that I had found were the guiro and the cowbell. As I had learned in class, these two elements are usually the time keepers for the entire band which would leave me to believe that their presence would help define pattern in the sound wave — turns out this wasn’t the case either. In many of the modern New Yorican salsa songs that have trumpet ( or piano ) based solos interrupt the time keeping of the guiro. In these songs, certain instruments act as cues for other instruments to start, revealing a temporal dependence that makes this method of codifying non-feasible. What did remain constant (the majority of the time) throughout these solos however, were the base instruments. This led me to another idea — extract the base lines for each genres by passing the songs through a low pass filter ( a mechanism that only allows low-frequency sounds ). However, the filtering resulted in non-ideal samples that still contained other instruments such as congas, drums, and guitars.

Having been seemingly stumped, I decided to look up other methods of analysis when I stumbled upon this paper. To give a brief summary instead of breaking down the music via instrumentation or finding a particular pattern, the researchers decided to instead sample thirty second segments from the songs from different sections of the song and used different classifying algorithms in order to best determine the musical genre of the sample fed in. A graphical summary is seen below

After incorporating my own processed data into the already existing Latin Music Database referenced in the paper and removing the genres that fall outside the scope of the class — namely Axé, Forró, Sertaneja, Gaúcha or Pagode.

Machine learning has many sub branches. The algorithms I ran during the fieldwork project fall under the supervised learning branch. Supervised learning originates from the idea that training this type of algorithm is like having a teacher supervise the whole process. The data I am feeding to my algorithms are songs paired with the correct genre classification . I first partitioned the processed data into two sets: a training set and test set. During the training portion, the algorithms will search for patterns in the training data set that correlate with the desired output. Afterwards, the learning algorithm will take in unseen inputs in the testing set and will determine which label the new inputs will be classified as based on the patterns it learning on the prior training data.

I implemented three Machine Learning Models : KNN, Decision Trees, and Naive Bayes and subsequently fed in sets composed of segments taken from the beginning, middle, and end of the song. A portion of the results as a percentage of confidence are shown below.

I tested the algorithms on a set of a hundred songs but decided to include the first five songs fed into the algorithm and their respective results.

Starting with the first song, Elegba rezo, we see that the confidence percentages are overall in the lower side when compared with the other four entries. This lower percentage could also be attributed by the fact that the training data had a low percentage of Haitian Folk music labeled pairs/songs. However a particularly interesting detail is that the predicted genre was Rumba. Although this is not the correct genre, I found it interesting that the algorithm was able to assign a genre that I would consider closer to the actual genre of Haitian Folk music. This is probably because of similar percussive patterns at a quicker tempo found in rumba and abakua. Further more, the vocals shared between the two music genres bear some similitude. Cadence, high amplitude -energy filled shouts with subsequent responses can be found more common in rumba/abakua than for example reggae. The heavy use of percussion instruments also probably distinguished it from other Afro-Cuban genres like son or salsa — since those have wind instrument dominant instrumentation (i.e the farting trumpets present in the aforementioned genres).

The subsequent three results appear to be relatively confident classification with most of the hovering around 90% confidence. This high confidence is again probably because of the high percentage of salsa and reggae songs included in the set.

The last result is one that really left me confused . With relatively high confidence, it assigned the genre of Chan Chan by the Buena Vista Social club to salsa. Although the vocal cadence bear some resemblance to those of the salsa genre, the song itself is much lower energy. Further more the song’s dominant instrument are the congas as well as the Cuban Lute which strums consistently throughout the background of the song. What may have confused the algorithms was the large portions of the songs that have solos. Both the Cuban lute as well as the trumpet take the “auditory stage” for around twenty to thirty second. A possible explanation for this erroneous assignment may be the method of sampling the segments of the songs. In the case of Chan Chan a trumpet solo occurs around the three minute ten second marks which is nearing the end thus causing the majority of the sample to be dominated by the trumpet.

If we include the rest of the hundred songs, my algorithms were able correctly classify the song genres approximately 68% percent of the time.

As you can see, my attempts at algorithmically classifying music genres was, if we’re being generous, average at best. Perhaps my accuracy may have improved if the dateset I acquired was perhaps a little bit larger, but I think even if I did succeed I wouldn’t be doing any of these genres justice. Sure a machine can sort out the music but what it overlooks is the rich history that underlies the notes of what we listen. My algorithm, nor any algorithm in the world for that matter, is capable of appreciating all the connections that exists between the genres and the stories that they tell. Thank you to all who have made this musical journey truly an unforgettable one.

Acknowledgments and references:

Silla, Carlos & Kaestner, Celso & Koerich, Alessandro. (2006). Automatic Genre Classification of Latin Music Using Ensemble of Classifiers.

Harrison, Onel. “Machine Learning Basics with the K-Nearest Neighbors Algorithm.” Medium, Towards Data Science, 14 July 2019, towardsdatascience.com/machine-learning-basics-with-the-k-nearest-neighbors-algorithm-6a6e71d01761.

“The Latin Music Database (LMD) — Carlos N. Silla Jr. (Carlos Silla) Homepage.” Google Sites, sites.google.com/site/carlossillajr/resources/the-latin-music-database-lmd.

Wilson, Aidan. “A Brief Introduction to Supervised Learning.” Medium, Towards Data Science, 1 Oct. 2019, towardsdatascience.com/a-brief-introduction-to-supervised-learning-54a3e3932590.