The technique uses a very novel approach to hyperparameter optimization.

I recently started an AI-focused educational newsletter, that already has over 65,000 subscribers. TheSequence is a no-BS (meaning no hype, no news etc) ML-oriented newsletter that takes 5 minutes to read. The goal is to keep you up to date with machine learning projects, research papers and concepts. Please give it a try by subscribing below:

Training and optimization of deep learning models are some of the most challenging aspects of any modern machine intelligence (MI) solution. In many scenarios, data scientists are able to rapidly arrive to the correct set of algorithms for a specific problem just to spend countless months trying to find the optimal version of the model. A few years ago, DeepMind published a new research paper that proposes a new approach for training and optimizing deep learning models known as population based training.

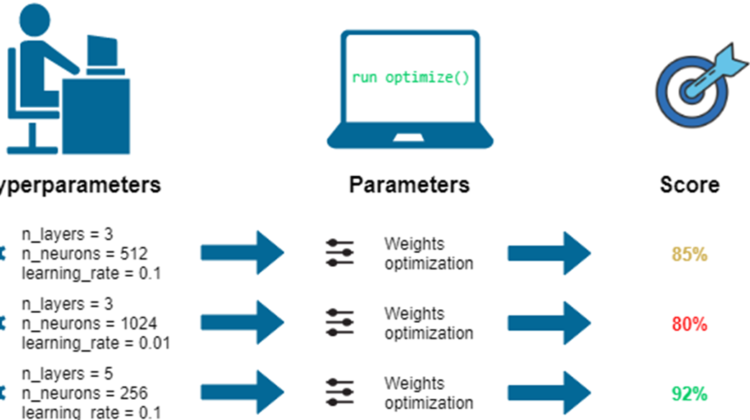

The optimization of traditional deep learning models is focused on minimizing its test error without drastically changing the core components of the model. One of the most important approaches in deep learning optimization centers around tuning elements that are orthogonal to the model itself. Deep learning theory typically refers to these elements as hyperparameters. Typically, hyperparameters in deep learning programs include elements such as the number of hidden units or the learning rate which can be tuned to improve the performance of a specific model.

Optimizing hyperparameters is a game of finding the right balance between the performance of a deep learning function and its cost. Algorithms such as stochastic gradient descent and its variations have become the center of deep learning optimization but still have major challenges when applied in large scale scenarios. Typically, there are two major approaches to deep learning hyperparameter optimization: random search and hand-tuning. In random search scenarios, a population of models are trained independently in parallel and at the end of training the highest performing model is selected. Typically, this means that only a small fraction of the population will be trained with good hyperparameters while the rest will be trained with bad ones, wasting computer resources.

The hand-hunting approach is based on sequential optimization processes. ) Sequential optimization requires multiple training runs to be completed (potentially with early stopping), after which new hyperparameters are selected and the model is retrained from scratch with the new hyperparameters. This is an inherently sequential process and leads to long hyperparameter optimization times, though uses minimal computational resources.

As you can see, there are advantages and limitation to both random search and hand-haunting techniques. Recently, the DeepMind team published a research paper advocating for a new optimization technique that tries to combines the best of both approaches.

Introducing Population Based Training

Population based training(PBT) uses a similar approach to random search by randomly sampling hyperparameters and weight initializations. Differently from the traditional approach, PBT runs each training asynchronously and evaluates its performance periodically. If a model in the population is under-performing, it will leverage the rest of the model population and replacing itself with a more optimal model. At the same time, PBT explores new hyperparameters by modifying the better model’s hyperparameters, before training is continued.

The PBT process allows hyperparameters to be optimized online, and the computational resources to be focused on the hyperparameter and weight space that has most chance of producing good results. The result is a hyperparameter tuning method that while very simple, results in faster learning, lower computational resources, and often better solutions.

In the research paper, the DeepMind team applies PBT across different scenarios such as deep reinforcement learning or machine translations. The initial results have been very encouraging with PBT showing great improvements over the traditional techniques.

With the research paper, DeepMind open sourced an initial implementation available in Github. Since then, PBT has been adopted in many different hyperparameter optimization toolkits.