Get your ML app up and running in a matter of minutes

Streamlit has proved out to be an outstanding opportunity for developers to share their machine learning instantly without having to worry to much about the underlying infrastructure.

In the following paragraphs, I’ll demonstrate the code written from the basis of a TensorFlow model, what changes you have to make and how I deployed my neural style transfer application in the most elegant manner with Streamlit Sharing.

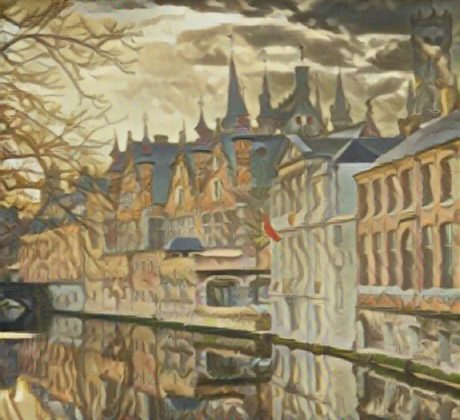

And yes, this is Angelina Jolie as if painted by Gustav Klimt.

I know you’ve been dying to see the end result and without further ado:

Go ahead and try it out for yourself 🙂

As any machine learning developer, I started writing the code. Here’s how envisioned my website from the beginning. I’ll have my TensorFlow model in the background ready to make predictions and the user will be able to insert two image URLs, one for the content and the other for the style image. New style transferred image will be displayed.

All the code is available on Github. The load_image function is very important indeed because it recieves the URL and returns a 2D tensor.

As I was deploying my app, I have realised that my app is running out of resources. The reason for that was that I was keeping my memory busy all the time. The solution? Cache!!!

If you’re unfamiliar with these funny syntax on the top, feel free to dive into python decorators.

Streamlit provides a caching mechanism that allows your app to stay performant even when loading data from the web, manipulating large datasets, or performing expensive computations. This is done with the @st.cache decorator.

The prompts text_input are provided to fetch in user input.

And this is all that it takes to display an image on Streamlit. Our model does all the work in the background and if the user presses the button, he just assured himself a brand new image.

As simple as it gets. Here’s a quick stepthrough:

- Create a Github repo and allow streamlit to parse with it.

- Have a requirements.txt. Why?

Because it tells Streamlit what packages along with their versions you’re running on and it provides stability. Don’t make the same mistake I did. I used pip freeze and copied all packages installed with pip into my requirements.txt. There is no need to do that. Streamlit will be overwhelmed.

In my case, it’s as simple as the above gist suggests.

Also I got a warning that matplotlib was not supported. You can resolve this issue by either checking versions, installing matplotlib using conda, creating a a package.txt file where you define the backend architecture or in my case, never use matplotlib in Streamlit applications at all. All of the amenities matplotlib has regarding plotting, so does Streamlit.

Don’t reinvent the wheel! Remember, your goal is to run efficient code and deploy ML models to the world.

- Limit yourself to as little packages and as little CPU and memory consumption you can.

- Don’t hold massive datasets or TensorFlow Hub modules in memory

Once your request is granted, you are one button away from deploying the app. I think it’s pretty straightforward, right?

Note: Each app has 1 CPU, 800 MB of RAM, and 800 MB of storage.

By definition, Neural Style Transfer algorithms are characterized by their use of deep neural networks for the sake of image transformation. This is implemented by optimizing the output image to match the content statistics of the content image and the style statistics of the style reference image. These statistics are extracted from the images using a convolutional network.

I’m here to propose two versions so you can get a more intuitive grip on the method. Okay, here goes the simpler version:

- Neural style transfer is an optimization technique used to take two images — a content image and a style reference image (such as an artwork by a famous painter) — and blend them together so the output image looks like the content image, but “painted” in the style of the style reference image.

And a slightly more complex goes like this:

- Neural Style Transfer employs a pretrained convolution neural network (CNN) to transfer styles from a given image to another. This is done by defining a loss function that tries to minimise the differences between a content image, a style image and a generated image.

For those of you whose curiosity is still not satisfied, I encourage you to read about it in the following pages:

As machine learning engineers, our goal is satisfied the day the fine-tuned model is deployed to the public. Streamlit makes that super-easy and I encourage you to take advantage of this great package. The feature is still in beta, so if you want to try it out, get in touch with the developers on the Streamlit website.

Happy coding and I wish to see your apps deployed as soon as possible!!!

Feel free to connect with me, or leave a comment, all suggestions, ideas and critics are welcomed.

LinkedIn, Medium, GitHub, Gmail

For more, take a look at some other stories: