Follow these steps to get Driver’s License important information.

I’ve collected the data from open online sources, which is our case was for the US Driver’s License. I’ve compiled all the images in one folder and have got it ready for the data preprocessing part.

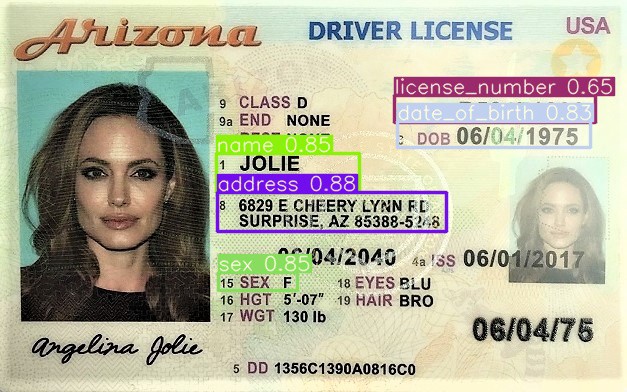

The collected data must be annotated/labeled with the bounding boxes of all regions which we want to detect from the driving license for eg. Name, Address, DL number, gender, and date of birth. Therefore, we label all images with the interested detection over images. The number of labels per image constitutes to total classes which in this case is '5'.

I’ve used Roboflow for labeling, we can also use LabelImg for the same.

My Dataset is small which constitutes a Driver’s license from all 50 states each. The dataset being small since getting real licenses wasn’t practicable.

We can use state-of-the-art models for all sorts of Deep learning tasks, it’s just limited to personal preference and use case. You can check the models offered by PyTorch here: https://pytorch.org/vision/0.8/models.html

I personally like YOLO for Object detection problems. With the evolution of YOLO architecture, the possibilities are endless.

The Pretrained Checkpoints are used for training the architecture for the custom Dataset. This is called transfer learning. Transfer learning is a research problem in machine learning that focuses on storing knowledge gained while solving one problem and applying it to a different but related problem. For example, the knowledge gained while learning to recognize cars could apply when trying to recognize trucks.

Select a pretrained model/weights to start training from. Here we select YOLOv5l. We have selected a larger model to get better precision scores.

You can simply clone the yolov5 project:

$ git clone https://github.com/ultralytics/yolov5 # clone repo$ cd yolov5$ pip install -r requirements.txt # install dependencies

Note: If you have a GPU you can follow these steps to setup the pytorch CUDA enabled then, follow the steps above.

After downloading any weights, we have to edit a number of classes in the Neural Network .YAML file of the same weights. For eg. we have downloaded yolov5l weights so, we have to edit the number of classes from the default(80) to our use case(5) which is Name, Address, DL number, gender, and date of birth.

When we download our Dataset from Roboflow and have extracted it in the yolov5 folder where we get, another .YAML file that contains the location of the train + test data with the class name we have given it.

The folder structure should look like this:

Note: Don’t forget to extract the dataset in yolo format from Roboflow webapp.

Train a YOLOv5l model on our dataset, batch-size, image size, and either pretrained --weights yolov5l.pt (recommended). Pretrained weights are auto-downloaded from the latest YOLOv5 release.

We

# Train YOLOv5l on DL for 300 epochs$ python train.py --img 640 --batch 4 --epochs 300 --data US_DL.yaml --cfg yolov5l.yaml --weights yolov5l.pt

To get better insights and live feedback of training over the web you can install weights & biases(wandb.ai) using this command $ pip install wandb

The complete wandbai logging can found here: https://wandb.ai/thinkersloop/YOLOv5/runs/2ahw81wk/overview?workspace=user-thinkersloop

Results:

The best-trained model can be found in the runstrainexp folder.

Now, let’s test it out. detect.py runs inference on a variety of sources.

$ python detect.py --weights runs/train/exp/weights/best.pt --img 640 --conf 0.5 --source test/images

Resulted images are stored in the runsdetectexp folder.

(YOLO-v5) C:UsersluckieProjectsDreamDL data extractYOLO-v5yolov5>python detect.py --weights runs/train/exp/weights/best.pt --img 640 --conf 0.5 --source test/images

Namespace(agnostic_nms=False, augment=False, classes=None, conf_thres=0.5, device='', exist_ok=False, img_size=640, iou_thres=0.45, name='exp', project='runs/detect', save_conf=False, save_txt=False, source='test/images', update=False, view_img=False, weights=['runs/t

rain/exp/weights/best.pt'])

YOLOv5 torch 1.7.1+cu101 CUDA:0 (GeForce RTX 2070 SUPER, 8192.0MB)Fusing layers...

Model Summary: 392 layers, 46622106 parameters, 0 gradients, 114.2 GFLOPSimage 1/2 C:UsersluckieProjectsDreamDL data extractYOLO-v5yolov5testimagestest1.jpg: 416x640 1 addresss, 1 date_of_births, 1 license_numbers, 1 names, 1 sexs, Done. (0.050s)image 2/2 C:UsersluckieProjectsDreamDL data extractYOLO-v5yolov5testimagesAlabama-s_jpg.rf.fa47b8af18b6c8263fa5f4c9b7ae760f.jpg: 448x640 1 addresss, 1 date_of_births, 1 license_numbers, 1 names, 1 sexs, Done. (0.048s)Results saved to runsdetectexp

Done. (0.136s)

Congratulations if you have successfully completed the above steps. Cheers!