…And Capture Concepts In User Input

A conversational interface is an invitation you extend to the user to input their data in an unstructured and conversational manner.

It is imperative that the digital assistant is able to take the unstructured input from the user and ideally structure the input correctly on the first pass.

The less ideal situation is where the chatbot prompts for information already volunteered by the user.

Hence, forcing the user to input data in a structure acceptable by the chatbot. Keeping in mind, a structure not visible to the user.

The conversation to the left is a classic example of how, only the basic intent of the user is detected by the chatbot on the first pass.

The entities are disregarded and asked for, again; one at a time.

The way to approach this, is to handle it, as we do in human-to-human conversation.

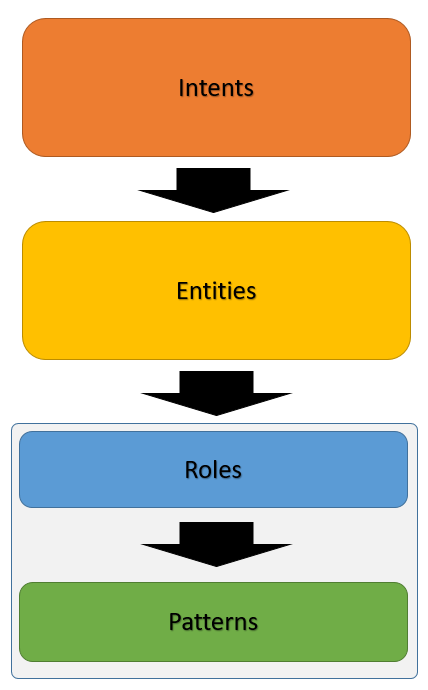

City is an entity, but there are actually two types of cities, the from/departure city and the to/destination city. Hence the entity of city has two roles. And these two roles are arranged in a certain pattern including the date and possibly the mode of travel.

This is very close to how we as humans extract entities; detecting the patterns and identifying the roles.

The basic premise is to create roles for an entity, and then establish patterns in which those roles might be used.

These patterns then serves as a guide for the Conversational AI platform to detect patterns in user utterances. And subsequently useful conversational data.

Let’s have a look at how Roles and Entity Patterns can be implemented by using a simple banking example project.

So, different roles can be seen as subsets or types of the entity Cities.

Multiple roles can be added to an entity.

Contextual information is maintained for an entity in this way. We are also going to combine the roles defined for the entity with a pattern.