Suppose you have trained a few classifiers, each one achieving about 80% accuracy. You may have a Logistic Regression classifier, an SVM classifier, a Random Forest classifier, a K-Nearest Neighbors classifier, and perhaps a few more .🧑💼

A very simple way to create an even better classifier is to aggregate the predictions of each classifier and predict the class that gets the most votes. This majority-vote classifier is called a hard voting classifier. 👨🏭

Somewhat surprisingly, this voting classifier often achieves a higher accuracy than the best classifier in the ensemble.

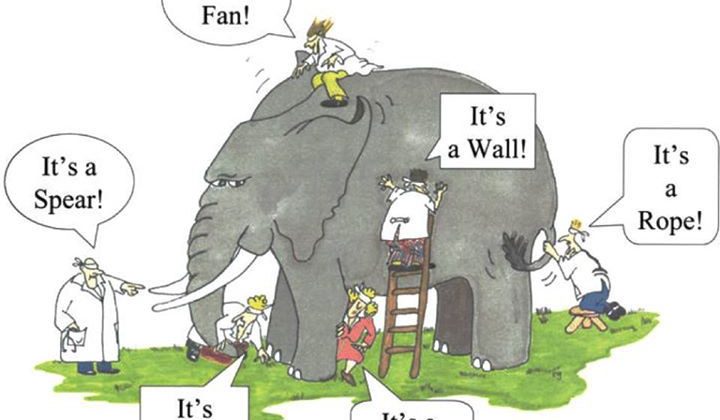

How is this possible? The following analogy can help shed some light on this mystery. 🧑🍳 Suppose you have a slightly biased coin that has a 51% chance of coming up heads, and 49% chance of coming up tails.

If you toss it 1000 times, you will generally get more or less 510 heads and 490 tails, and hence a majority of heads. If you do the math, you will find that the probability of obtaining a majority of heads, after 1000 tosses is close to 👨🍳 75%. The more you toss the coin, the higher the probability. This is due to the Law of Large numbers. 👨🔧 As you keep tossing the coin, the ratio of heads gets closer and closer to the probability of heads.

Ensemble methods work best when the predictors are as independent from one another as possible. One way to get diverse classifiers is to train them using very different algorithms. This increases the chance that they will make very different types of errors, improving the ensemble’s accuracy.

from sklearn.ensemble import RandomForestClassifier

from sklearn.ensemble import VotingClassifier

from sklearn.linear_model import LogisticRegression

from sklearn.svm import SVClog_clf = LogisticRegression()

rnd_clf = RandomForestClassifier()

svm_clf = SVC()voting_clf = VotingClassifier(

estimators=[('lr', log_clf), ('rf', rnd_clf), ('svc', svm_clf)],

voting='hard')voting_clf.fit(X_train, y_train)>>> from sklearn.metrics import accuracy_score>>> for clf in (log_clf, rnd_clf, svm_clf, voting_clf):

... clf.fit(X_train, y_train)

... y_pred = clf.predict(X_test)

... print(clf.__class__.__name__, accuracy_score(y_test, y_pred))

...LogisticRegression 0.864

RandomForestClassifier 0.896

SVC 0.888

VotingClassifier 0.904

There you have it! The voting classifier slightly outperforms all the individual classifiers.