Nowadays almost everyone can “do” ML …

A Machine Learning model step by step:

- Download your favorite popular open-source language, either Python or R will do the trick(minimal programming skills are required whatsoever apart from data importing, sweeeeeeeeet)

- Google and install some ML package (f.e. import pytorch as ptch or library(neuralnet))

- Import your favorite data set (cool right? You don’t even need to specify some real world problem to solve!)

- Run your model.fit() & model.predict() (Don’t know how your neural network works? Chill dude, Adam Paszke, Sam Gross, Soumith Chintala and Gregory Chanan from pytorch and Stefan Fritsch and Frauke Guenthe from neuralnet have already defined a minimal interface for you to have fun)

- Report your findings to your supervisor and update your LinkedIn skills

Sadly this [not-so-satirical] 5 point list is alarmingly close to reality, close to a great deal of Machine Learning and Data Science new comers, close to a great deal of ML /DS boot camps and close to what employers actually expect from you as a professional.

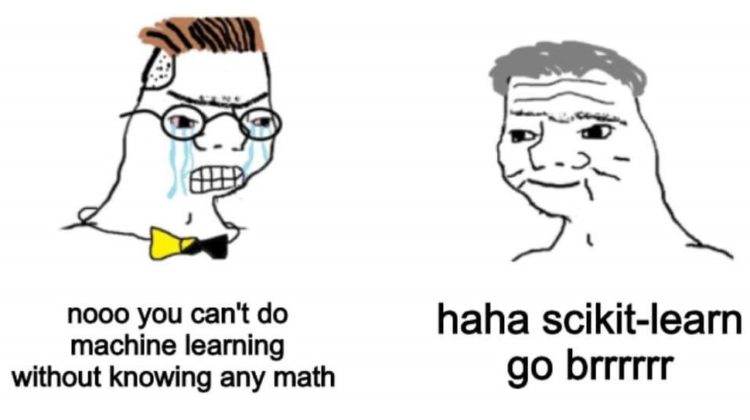

As of today I kind of have a cringe around what we call Machine Learning and Data Science. Don´t get me wrong, Machine Learning and Data Science, as disciplines, are of great deal of importance. They are incredibly useful in every knowledge field. Then, what in god’s name is my problem? You may ask. My problem is almost precisely depicted in the meme beneath this article’s title, however I do not particularly identify with the first speaker in our opening meme. I’m not some sort of statistics orthodox or anything then, I still need to elaborate on my point…

One of the greatest upsides [and ultimately the biggest downside] of science democratization (particularly derived from the rise in the availability of computer power and open source tools) in statistics and computer science, is how easy is for anybody to fit some ridiculously complex model. Both the Comprehensive R Archive Network (CRAN) and the Python Package Index (PyPI) have done some undeniably neat work establishing some of the most strict requirements for documentation and maintenance procedures for package development in the industry (like, seriously there are banks and governments with more lenient requisites). Even though it’s all about open — source projects, usage of this kind of tools is growing exponentially because those severe prerequisites inspire trust. Developers have also lived up to that standard sticking to those rules. However this story of successful [ethical] responsibility is quite different for most of the users of these tools.

Take these two [real life] situations:

- Data Analytics lead at XYZ Inc., the biggest advertising services in the world, deletes outliers to improve her/his linear model r-squared from ~.7 to ~.9, analysis is sold as insights for a third party. Data analytics lead fits a neural network to XYZ stock price using a model.fit() workflow, she/he presumably ignores the model mechanics behind the model and the numerical method.

- Bank ABC implements some selector function to discriminate from some pool of distributions to model returns to measure risk. This function does not specifies which criterion is used for model selection nor details the algorithm it uses, however manager instructs Jr. Analysts to use such functions even though it was not internally developed.

Do you guys see what does 1 and 2 have in common? You see, nowadays being a “Data Scientist/Machine Learning Engineer” has become some sort of nobility title, some status item in many people resume, a marketing stunt which is backed up only by this model.fit() workflow, however it seems to me that very few really grasp what is really at stake in all these analytics positions out there. 1 & 2 are not a laboratory examples from you ML class, they are not some final project for you course. The first one has some real economic value as XYZ is charging for these analytics and the second one influences directly the bank’s clients wealth through risk management policy. Model existence is not restricted to a piece of silicon inside you PC (paraphrasing Richard McElreath in his book Statistical Rethinking), models have tangible effects in the real world.

As analysts we can’t forget that, ultimately, our output is going to be used for decision making. What do I mean by that? I mean that your model.fit() object is going to be used for credit scoring from which loan interests and amounts are going to be computed, is going to be delivered as insights to a client (recognized as revenue by your firm) or to senior management (from which capital budgeting is going to be done), it may be used even used to design public policy. Models are real, they affect real people through the decisions we make using them. Because of this, as machine learning engineers, data analysts, data scientist or whatever your fancy designation is, are liable for any shortfall our model implementation/design may result in.

Some of you may argue that is not that simple to deploy some model at a corporate level, which I can agree on. There are tests to be passed, several checks and balances to be cleared, some managerial overview (depending on the case) and obviously the compliance department (f.e. in the case of financial institutions which is directly enforced by government authority). Nevertheless which manager is actually verifying outlier treatment from his/her analysts? Is management comprehension of ML/AI enough to thoroughly asses ML/AI models? And finally take, for instance, example number 2 from which bad practice comes directly from the manager.

One the features of ML models is both their mathematical and numerical complexity, however they are as smart as the analyst behind them. They are valid as long as their mathematical assumptions are fulfilled and as the algorithm’s output is coherent with reality, they are as useful as they are readily available to end user and, in the end, they are constrained by the same limits of the numerical method which gave birth to them. So, if you believe doing ML/DS is just running a fancy sci-kit learn one liner, I honestly believe that you’re kind of doing it all wrong. Machine Learning and Data Science are all about infrastructure and deployment to have model insights timely at hand.

All of this doesn’t seem to matter much as long as things go smoothly, but what if something goes the other way? We ought to, at least, know what the hell are we doing and how the actual heck are we doing it right? Machine Learning is becoming increasingly important in modern days, the number of ML/AI models being deployed into production is growing and there is a shortage of qualified professionals to fill those analytics positions. Data science and machine learning engineering are one of the mayor players of the field now, and for that we can’t afford to be a mediocre profession.

In the end, it all comes down to professional ethics. If you can’t name your model’s main assumptions and you can’t at least loosely describe how the algorithm works you may be not as qualified as you think. There is some saying in fiance that reads “Don’t invest in thing you don’t understand”, I believe we could say something similar in DS/ML “Don’t $%&#?! fit some model you don’t understand!”. Impressing your manager, taking into account the big picture, is just not worth it.

However if we really wanted to improve things, this article (as noticed by Arturo Gonzalez) cannot be just about complaining. So, in the spirit of providing (not just critizing) I’ll to answer the question that, to this point, remains: where do I start?

The following list, in my personal opinion, contains useful resources from which one can acquire quality information. However, there is nobody as resourceful as the ML/DS community, so any addition to this list will be appreciated. This list is not meant to be definitive but just some place to start.

(1/4) Theoretical resources:

- Machine Learning a Probabilistic Perspective by Kevin P. Murphy. In my opinion one of the best books ever written. Here you can find from an intro to probability, through Bayesian and Frequentist statistics to the accurate mathematical representation of almost all ML model.

- Package Documentation by Every Package Developer. Read package documentation! First insights on what models do are described usually with lots of detail along with primary theoretical references in the package documentation.

- Introduction to Numerical Methods by Steven G. Johnson. Through MIT Open Courseware you can access MIT designed courses, this one in particular (available here) introduces how calculations are done from a computer perspective.

(2/4)Programming Resources

*As some good friend (Carlos Alberto Haro) once said “Choose your venom”. I would only add, once you’ve chosen, drink it well.

- R4DS by Hadley Wickham. I dare to claim that nowadays R is all about Tidyverse (& Tidymodels) workflow and syntax. This book is an absolute must when you are a R user and it’s a completly open source initiative (available here).

- Python for Data Analysis by Wes Mckinney. Just as R is all about Tidyverse Python is all about Pandas and SciKit Learn. Just as for the previous book this one is an absolute must for Python users.

- Python Data Science Handbook by Jake Vanderplaas. Another excellent open source resource (available here) for python users (suggested by Carlos Alberto Haro).

- SQL Zoo (available here) or SQL Bolt (available here) or w3schools. Although SQL programming is part of thebroader topic of databases, what you need to manipulate them you can learn in these platforms, which are both really neat.

(3/4)Infrastructure Resources

*As we mentioned before DS/ML is all about end-to-end solutions. This section here is an attempt to show some basic tools for model deployment.

- Shiny tutorial by RStudio, Flask Quickstart by Pallets Project or Streamlit (again suggested by Carlos Alberto Haro). Although they may not be suited for high transit & computer intensive models, Shiny (for R users) and Flask/Streamlit (for Python users), provide some first approach to deploying models into apps or dashboards.

- Database design and relational theory : normal forms and all that jazz by Chris J. Date. Excel and CSV files are not databases. Real projects are not usually static which is an attribute inherited from the data itself. Data base concerns data storage and availability however database structure, design and maintenance is not as straight forward as you may think.

- Cloud Computing with AWS/Azure. Although certifications have a cost, materials are actually free. Migration towards cloud based infrastructure is a clear trend. Resources are available even for Database Administrator Certifications.

- Version control with Git. Maybe one of the most difficult skills to develop, and mostly done on-the-run basis. However Github has great documentation available to learn version control workflow.

(4/4)Communicating Resources

*Transparency should be one primary characteristics of every data product, however the complexity of the analysis may impose a real challenge here.

- Ggplot2: An Elegant Grammar for Data Analysis by Hadley Wickham. Images are one of our more powerful tools. Although ggplot is a R based package, this books covers the philosophy behind the package’s construction which can be generally applied to good and effective visualizations practices.

- Interpret-able Machine Learning: A guide for Making Black Box Models Explained by Christoph Molnar. This book (another suggestion by Arturo Gonzalez) specifically addresses how to add transparency to Machine Learning models and introduces several tools to accomplish this goal.

…end of thread

As analysts we should not do things just because we can. Thorough comprehension of the theoretical framework behind the model is a must, at least feeling comfortable with the basic mechanics of the numerical procedure and effective communication skills are needed. As managers the prior still applies plus, having clear ethical principles readily available and explained to all the analysts which depend from us. As professionals, knowing the scope of our work and clearly identifying stakeholders into the big picture is critical.

Like uncle Ben once said… “With great power comes great responsibility”. We should really bare that in mind.