Face Recognition has always been on the top when it comes to the domain of computer vision or image analysis. If you are an aspiring computer/data scientist or you have been working or learning about this domain for some time, you might have some idea about facial recognition systems or you might be familiar with facial recognition attendance systems, but those systems have limited capabilities and most of them match images, not facial features.

“…face recognition comes from the idea of converting analog features of the face such as eyes, nose, chin, etc. to digital or computer-readable format…”

So, face recognition has been around for quite some time and in the last ten years, it has been progressing quickly and has matured so much that we have many sophisticated systems. You might have noticed, recently Facebook conducted an experiment on its users by starting a trend called ‘Ten Year Challenge’ where every user-posted a side-by-side picture of themselves from ten years before and now. What this challenge was for the purpose of data collection and training their models so they can identify faces more efficiently and enhance the functionality of people tagging other people in photos on Facebook. Many other companies such as Google, IBM, Apple, etc. have been working closely in this domain.

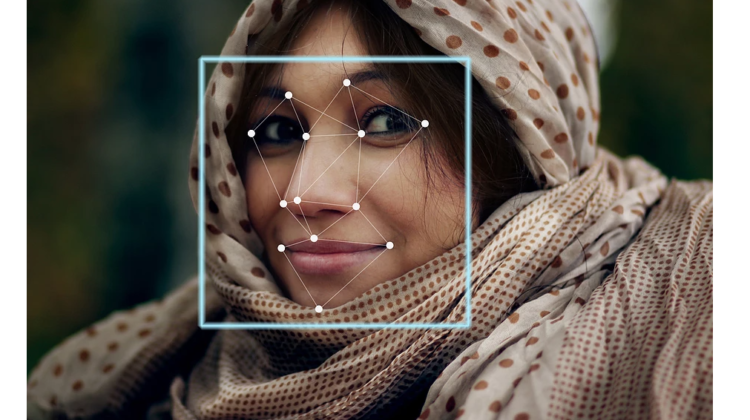

We can say that face recognition comes from the idea of converting analog features of the face such as eyes, nose, chin, etc. to digital or computer-readable format or in number. We see it everywhere in the current era of technology. Apple has integrated it in their mobile devices for Biometric Facial Recognition to unlock the phone. Many mobile applications use it for personal verifications. Also, it has been the most adopted method for attendance systems during this whole COVID pandemic due to its accessibility without touching the device physically. We can say that facial recognition comprises of two main applications. The first one is verification where it verifies the person who is currently there with the previous records. Here the mobile phone unlocking example is applicable as it is the same mechanism. The second one is recognition, now this can be further divided into many use cases as it can be used for surveillance, traffic maintenance, road safety, law enforcement, criminal identification, and so on. So, we can say that it is more of a security purpose.

What goes on in a Facial Recognition system is in simple terms is that an input image goes inside the system, the system creates facial embeddings of the image or converts the facial features to numbers and then matches those embedding to the embedding previously known to it.

As we jump to more details in face recognition, we see more possibilities as there are many. To train a face recognition system, we need a good number of facial images, which we can further prepare to feed to our model either a Convolutional Neural Network(CNN) or Deep Neural Network(DNN). I would suggest you learn Deep Learning at this point if you want to go to an advanced level in this domain. So, we know Face Recognition is being used in many domains such as Health, Automobiles, Mobile Phone, Surveillance, Policing, etc. With so many applications come more work to do. It not only about identifying a face, human face has a range of facial expressions that are also to be considered, we cannot just train a neural network on simple images and expect it to identify the same person with a different facial expression. It might be able to do that, but the probability is low. So, facial emotion recognition systems play an important role, what it does is that we feed four sample images for each person in our data which is used to be trained for such system, the four basic emotions such as Happy, Sad, Neutral and Angry. So, after that, we can say that it might identify emotions as well which has helped many teachers in their online classes during the pandemic to see how their students are feeling during the classes.

You can also see many other use cases for that, as much work is being done on live face recognition and in videos also. China has in fact implemented such a system for their public surveillance systems where they see each person walking on the sidewalks or in public places. Google has trained their system called FaceNet in 2015 which was trained on a dataset called Labelled Faces in the Wild which achieved a record-breaking accuracy of 99.63%. They use this system in Google Photos to sort photos based on face recognition automatically and they have an unofficial opensource version of it known as OpenFace. Do check that out.

So, if you want to learn and practice more about it, I suggest you pick problems from Kaggle and try to solve them. Also here are some of the important resources you might find useful as these are some research papers and courses:

The original story was written on computervizion.com