As a Kaggler-for-fun, one of my favorite topics in the machine learning process is feature engineering. The process of generating, altering, and pruning data amalgamates the practical purpose of increasing model predictability and the exciting sensation of investigation. While the implementation of models is often similar in nature with the help of libraries, feature engineering is always iterative. It is an ever-exciting exploration to the depths of data where patterns and important relationships lay waiting to be discovered. Put simply, feature generation is important and fun!

So what exactly is feature engineering? And how do we use it for machine learning? To answer these questions, we need to become explorers ourselves. We’ll begin by uncovering the basics of machine learning. Next, we’ll examine the fundamentals of feature engineering. Finally, we’ll discover practical implementations that will take our models to the next level. I love feature engineering, and I hope you will too!

To understand what feature engineering is, we must start by understanding the nature of machine learning. In essence, a machine learning model takes in a group of data points, each with their respective features (columns of data), and either returns a prediction for a target value or finds relations between variables in the absence of a target value (e.g. clustering). The former is called supervised machine learning and the latter is called unsupervised machine learning (not to be confused with AI robots taking over the world due to a lack of oversight). For our purposes, we will be using feature engineering to maximize prediction power and thus will be focusing on supervised learning. If this description felt abstract, let’s take a more concrete look at an actual data set involving supervised learning.

One of the most common supervised machine learning tasks is to predict the survival of individuals from the unfortunate Titanic disaster given data for most passengers (Sex, Age, Class, etc). The Titanic Data Set Competition is also one of the most popular novice Kaggle competitions, and it is especially helpful to learn how to implement feature engineering.

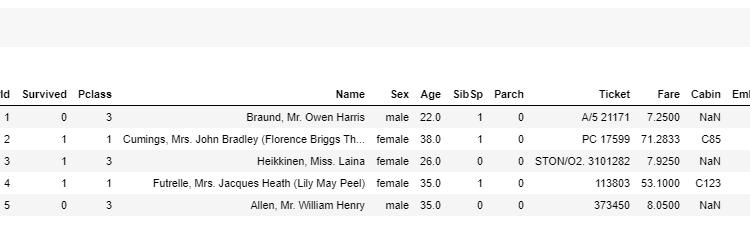

Using Pandas’ .head() function in Python, we can examine the first five data points and gain a better understanding of the data:

We can consider each column of data (“PassengerId”, “Pclass”, etc.) to be a feature. Our goal is to create a machine learning model that can predict the “Survived” value when presented with an individual who the model has not seen before using that individual’s features. These potential machine learning models (logistic regression, decision trees, neural networks, etc.) are too numerous to fully list. However, all of them benefit from feature engineering.

Feature engineering is the process of extracting additional data from our current data or simply altering our current data to make it easier for our model to find relationships that help with predicting a target value (like “Survived” in the case of the Titanic example). Not every model will be able to find these relationships immediately, so feature engineering makes them explicit.

Here’s a simple analogy: a student named Timmy, analogous to a supervised machine learning model, has spent the last few weeks studying for a math test so that he can answer questions correctly. Feature engineering, in this case, might involve changing his studying environment such that he can learn the material more effectively. This might involve translating his study materials to his native language, replacing any missing notes, or making him focus only on important theorems.

In the case of the Titanic data set, we can make it easier for our model(s) to learn by making numerous changes to our initial data. In this post, we’ll be covering language processing, data combination, binning, imputation, and dimensionality reduction.

Language Processing / String Extraction

As a Speech and Debater and a youth advocate, one of my favorite types of feature engineering involves utilizing words, sentences, or any strings of text. Words have power, so let’s extend this power to machine learning. Language processing involves extracting details in text strings that provide our model with extremely helpful information.

If you look back at the Titanic Data Set, one thing you will immediately notice is that every name has some sort of title, and every string of cabin information includes a cabin-letter at its start. These are all details that we can feed into our model.

For the first feature generation, we can find the titles of each individual by applying Python’s regular expression extraction to both our train and test data sets. Note: the only difference between train and test is that instances in the test data set lack the “Survived” column since the goal is to predict on the test data set.

After examining the types of possible titles, we can group each type of title together and map it to a number. On an unrelated note, the act of mapping categories to numbers is called label encoding — we do it because most models can only deal with numbers.

As you can observe, we now have a new feature for “Titles” that we extracted from the “Name” feature.

Combining Data

Yet another way to perform feature engineering is to combine features. This will not only provide our model with a more centralized outlook at previously disparate features, but it will also help reduce the number of features to look at (we will discuss the importance of this later).

For instance, we can add the “SibSp” (siblings + spouse count) and “Parch” (parents and children count) to create a new “family_size” feature.

Binning Data

One of the more popular ways to extract data is to place numerical data points into bins. For instance, if we know that someone is taller than 6 feet, we can place them within the “tall” bin. While some algorithms might already be able to draw similar distinctions, making these distinctions explicit will improve model performance.

On the Titanic data set, we can look for important age ranges that have correlations to survival rates, for instance. To implement, we can graphically locate which ranges exist such that the number of deaths or survivals do not cross each other.

For example, in the range from 0–16, we now know that generally more people survived than died. Given the ranges for where to draw our bins, we can create another feature with numbers to represent their respective bins.

Imputation

Not to be confused with amputation, imputation is the process of replacing missing values. Missing data is one of the most common problems in data sets, and there are millions of ways to impute data.

One way is to find the mean or median value from other data points that share similar feature values. Using Pandas’ groupby function, we can do the following to replace missing age values:

Another way is to train a completely new model on the existing data and set the target value to be the missing data. The implementation for this is a bit beyond the scope of this blog, but you can read more about KNN imputation or Random Forest imputation online.

Dimensionality Reduction:

Right now, we just discussed numerous ways to create features to generate more information. However, not all information is useful — oftentimes, features will be redundant or do not provide any ground for models to make decisions on. Moreover, having too many features can cause issues (the curse of dimensionality), so we often want to get rid of unnecessary features.

This can involve simple steps like looking at distributions between data points belonging to different classes (if the distributions are the same, then the feature likely doesn’t introduce much new information). Other simple steps involve looking at the correlations, like the Pearson’s Correlation Coefficient, between the target value and each respective feature. When training the actual models, it is also a good idea to manually try dropping a few features to see if they make an impact on the model predictability.

Another popular, and more automated, way to reduce dimensionality is the perform Principal Component Analysis. While the process of calculating Eigenvectors and Principal Components requires a bit of mathematical background, singular value decomposition PCA is relatively simple to implement with scikit-learn (https://scikit-learn.org/stable/modules/generated/sklearn.decomposition.PCA.html).

Now that we’ve performed the feature engineering, we will be ready to start training our models. Model training is another topic on its own, and each respective model has its own weaknesses and strengths. In broad terms, if it is not immediately clear which model to choose, try a bunch out and see what works the best. Also, try to experiment a bit more with additional feature engineering that might improve a specific model’s performance. This includes finding new ways to deal with categorical data, creating additional bins, or simply getting rid of certain features.

In essence, feature engineering is a fun, investigative, and important part of supervised machine learning. Common implementations of feature generation include language processing, data combination, binning, imputation, and dimensionality reduction.

The creation of just a few features can improve a model’s predictability tremendously. For instance, a LightGBM gradient boosted decision tree model that I trained on the Titanic Data Set improved accuracy from roughly 75% to 78% (on the test set) even without any hyperparameter tuning by using some of the techniques discussed above. However, there are countless additional ways to conduct feature engineering. It is an iterative, investigative, ever-expanding process.