Note:

This article is also available here.(Japanese)

https://zenn.dev/wok/articles/0007_u2net-portrait-drawing

I want to easily run machine learning models in a browser. So I’m working on a library to run various models in tensorflowjs(see this repository). In this article, I will try to run the function to generate a portrait from a photo in a browser.

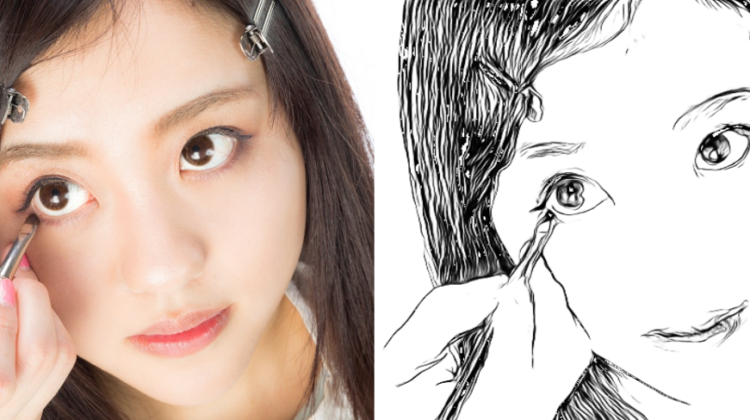

For example, you can generate the picture on the right from the picture on the left, like this. As usual, ( the image on the left was borrowed from Pakutaso. This is Kawamura-san. She’s always cute. )

This time we will use U²-Net to generate a portrait. This is the one that was buzzing a while ago.

It seems that several methods have been proposed for generating portraits using machine learning, including APDrawingGAN, which is referenced by U²-Net. Since portrait generation is one of the image generation methods, I kind of think of it as an extension of GAN. In other words, as APDrawingGAN does, it creates a portrait by fusing an image reconstructed from the features of the whole face, such as where the eyes and nose are located, and an image reconstructed from the individual features of the eyes and nose. However, U²-Net takes a completely different approach: U²-Net actually comes out of research in the field of Salient Object Detection, which is the separation of the object of interest in an image from the background. Therefore, there is no mention of portraits in the main body of the paper at all.

If you refer to the U²-Net repository, you’ll see that the authors found the new way to use their output just after submitting paper. They say, “If we use portraits drawn by imitating photos as teacher data, we can create a model for segmentation. I have both thinkings “Really?” and “maybe that’s true”. But it’s actually working, so I can only say, “Wow!”. However, it was possible because of the U²-Net network, which is able to capture the multi-scale and multi-layer features, and I think it would not work well with segmentation models that use other backends. (If you want to know a little more about the architecture and other details of U²-Net, please read the paper.)

In this article, I would like to try to run a function to generate portraits in a browser using such an interesting model with an unexpected background.

U²-Net is currently only available as a PyTorch implementation. So in order to run it in Tensorflowjs, we need to convert the model. The method is detailed in PINTO’s article on converting PyTorch models to Tensorflowjs. Basically, I’m not going to explain much here because I’m converting according to this article by PINTO. Please refer to the article by PINTO. In the previous article, I explained how to run the conversion process in docker, so please refer to that article as well. After conversion, incorporate it into Tensorflowjs and you are done.

In this section, we will assume that you have already built a docker environment referring to the previous article.

(1) Clone the U²-Net repository from github and download the trained model.

$ git clone https://github.com/NathanUA/U-2-Net.git

$ cd U-2-Net/

$ mkdir ./saved_models/u2net_portrait/

$ curl -sc /tmp/cookie "https://drive.google.com/uc?export=download&id=1IG3HdpcRiDoWNookbncQjeaPN28t90yW" > /dev/null

$ CODE="$(awk '/_warning_/ {print $NF}' /tmp/cookie)"

$ curl -Lb /tmp/cookie "https://drive.google.com/uc?export=download&confirm=${CODE}&id=1IG3HdpcRiDoWNookbncQjeaPN28t90yW" -o saved_models/u2net_portrait/u2net_portrait.pth

(2) Next, we will perform the transformation process. The model name --model-name U2Net and the file name of the trained model —weights saved_models/u2net_portrait.pth are different, but the basic process is the same as the one for U²-Net described in the previous article. The output file name of pytorch_to_onnx.py is the same as in the previous article, so please refer to the previous article for further processing. However, I think that an error occurs when openvino2tensorflow is executed and SavedModel cannot be created. This is probably because SavedModel should be less than 2GB (issue). A frozen model will be generated, so please convert this model to tensorflowjs. The total file size is over 150MB.

$ export PYTHONPATH=/U-2-Net

$ SIZE=512

$ python3 /opt/intel/openvino_2021/deployment_tools/tools/model_downloader/pytorch_to_onnx.py

--import-module model.u2net

--model-name U2NET

--input-shape 1,3,${SIZE},${SIZE}

--weights saved_models/u2net_portrait/u2net_portrait.pth

--output-file u2netp_${SIZE}x${SIZE}.onnx --input-names "x"

--output-names "a/F.sigmoid(d0)"

(3) Once the conversion is complete, you can place it on your website and load it with Tensorflowjs for use. The basic usage is the same as for other models. Please note that normalization and thresholding of input and output are required to generate images with high accuracy. Please refer to the source code available in the repository below for the thresholds I use.

Now let’s use it to generate a portrait. As usual, I will vary the size of the input image to see the processing time and quality. Note that we are experimenting here on a Linux PC with GeForce GTX 1660 & Chrome.

This is what it looks like at 320×320. It’s a pretty solid portrait. Processing time is about 1.5 seconds per frame on the GPU. It’s heavy.

I tried to make it 256×256. It’s pretty broken. Processing time is about 1.1 seconds.

192×192 is a bit impractical. The processing time was about 0.4 seconds per frame.

Make it 512×512. The original training seems to have been done in 512×512, so it is indeed very well done. The eyes look very appealing. Unfortunately, in my environment, the GPU didn’t have enough memory to handle it, so I had to let the CPU handle it, which took about 4 minutes per frame. It takes about 4 minutes per frame to process on the CPU.

I also tried 1024×1024 for fun. It also works very well. However, 512×512 was also good, so people may have different opinions about which one is better. By the way, this was also done with the CPU. It was done after I woke up from taking a nap. I think it probably took about an hour.

That’s all. The quality of the portraits is quite high, but I think the processing time is too long.

This demo is stored in the repository below. You can also run the demo at the following site.

- Repository

https://github.com/w-okada/image-analyze-workers - Demo

https://flect-lab-web.s3-us-west-2.amazonaws.com/P01_wokers/t09_u2net-portrait/index.html

* A note about the demo.

(1) It is very slow. Please wait about a minute for the first image to appear.

(2) I have it set to run on a 192×192 CPU. The controller in the upper right corner allows you to switch to a different resolution and GPU.

(3) Set useTFWasmBackend to Off and press the reload model button to enter GPU mode.

(4) Select a resolution from the modelPath pull-down menu and click the reload model button to process the image at that resolution.

(5) You can change the image to be input from input. You can also use the camera.

(6) When increasing the resolution to 512×512 or higher, it is better not to use GPU mode. There is a risk of memory overflow.

I tried to run the portrait creation function in the browser, and it seems to be a bit difficult to run in real time even on a GPU-equipped machine. If the load is this high, it might be better to use the server instead of the browser.