Artificial Intelligence (AI) and Machine Learning (ML) illustrate amazing victory. Especially Deep learning (DL) approaches hold incredible hypothesis. Unfortunately, the leading performing strategies turn out to be not transparent, so-called “black-boxes”. Such models have no express revelatory information representation, subsequently, have trouble in producing informative and relevant structures. This significantly limits the accomplishment of their full potential in certain application spaces. Thus, in safety basic frameworks and spaces (e.g. within the medical space) we may raise the questions such as “Can we believe these results?” or “Can we clarify how and why a result was achieved?”. Typically, not as it were pivotal for user acknowledgment, e.g. in medication the extreme obligation remains with the human, but it is obligatory since 25th May 2018 due to the European GDPR, which incorporates a “right for an explanation” (human-centred AI, 2019).

AI-infused systems are such systems that have features harnessing AI capabilities that are directly exposed to the end-user. AI-infused systems may illustrate inconsistent behaviours that can be disruptive, confusing, offensive, and indeed unsafe. Such systems may respond differently depending on lighting or noise conditions that are not recognized as particular to clients. Inconsistent and unusual behaviours can confuse clients, erode their confidence, and lead to deserting of AI technology. To solve these issues, the human-AI interaction design guidelines are necessary.

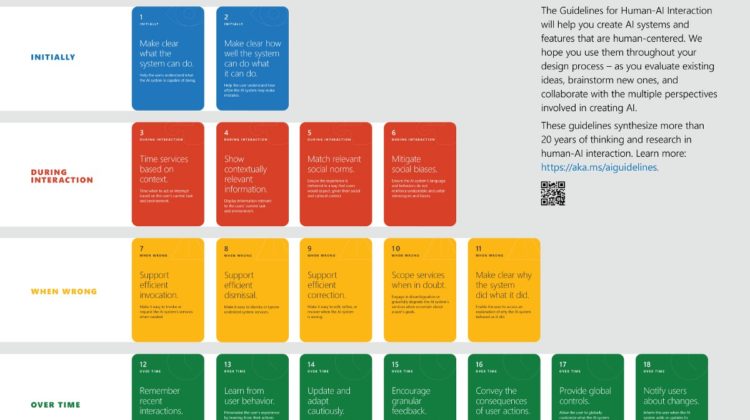

The human-AI interaction design guidelines are grouped into four categories that can be applied during the interaction with users. The guideline categories can be applied upon initial engagement with the system, during the interaction, when the AI service guesses wrong, and over time. Initially, it must be clear what the system can do and cannot do. Also, it must be clear how well the system can do and its capabilities. Similarly, during the interaction, the guidance must include when to act or interrupt based on the user’s current task and environment. Likewise, the system must display contextual information on the user’s current task and environment. Also, it must match the relevant social norms. Social biases must exclude undesirable and unfair stereotypes and biases. Overall, this subset of guidelines considers the user’s context. When the system is wrong, the guideline suggests supporting efficient invocation, dismissal and correction to the AI system’s services when needed. Besides, it must scope services when in doubt and provide a clear explanation of the failure. The guidelines also include the overtime guidelines where it suggests to remember user’s activities, learn the user’s experience, update and adapt the system’s behaviours, enable the user to provide granular feedback, update the consequences of user actions, provide global controls and notify users when AI system adds or updates it features (Amershi et al. 2019).

The development and utilization of such shared guidelines can help with the design and evaluation of AI-infused frameworks that people can get, trust, and can engage with effectively. We require updated guidance on planning intuitive interactions with AI services that give meaningful experiences, keeping the client in control and regarding users’ values, objectives, and consideration. There is a tradeoff between generality and specialization where these guidelines might not be able to address all types of AI-infused systems. To overcome this limitation, future research can be done in the development of domain-specific guidelines.

More about Human-AI interaction guidelines can be found at https://docs.microsoft.com/en-us/ai/guidelines-human-ai-interaction/.

Amershi, S., Weld, D., Vorvoreanu, M., Fourney, A., Nushi, B., Collisson, P., Suh, J., Iqbal, S., Bennett, P.N., Inkpen, K. and Teevan, J., 2019, May. Guidelines for human-AI interaction. In Proceedings of the 2019 chi conference on human factors in computing systems (pp. 1–13).

Human Centered AI, 2019. HUMAN-AI INTERACTION. [Online]

Available at: https://human-centered.ai/intelligent-user-interfaces-2019/

[Accessed 10 September 2020].