Different software could be hacked, even machine learning models. It is possible through several techniques of adversarial machine learning, the main goal of which is to fool standard models. Adversarial examples could be applied to a variety of machine learning algorithms, but mostly use for speech or image recognition.

According to Ian Goodfellow, that’s possible because modern machine learning, especially deep learning models, have too much linearity inside (linear functions like ReLU, Sigmoid and a lot of others inside the network)[1]. And adding even a couple of pixels to an input image could cause massive changes at the end of the model. For example, а few pixels could broken cars’ autopilot.

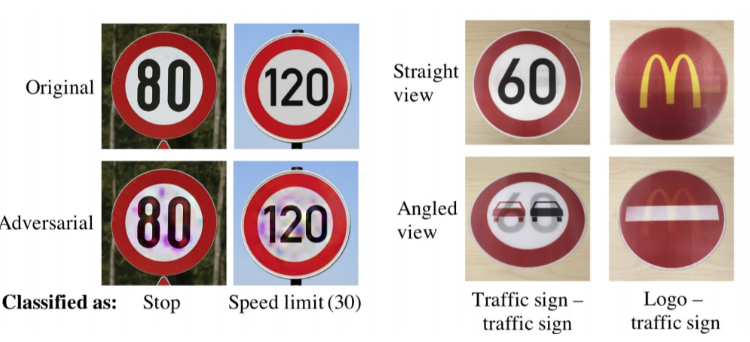

According to the Chawin Sitawarin and Arjun Bhagoji experiment[2], the autopilot system recognized the speed limit sign with simple distortions like a stop sign. We can imagine the same situation on the real road when signs attacked with graffiti. It’s horrible to think about what this could lead to.

However, the adversarial machine learning is not evil, it is a great feature to realize how models see the world[3]. Human eyes can’t distinguish those noisy pixels, but the camera can. It can be used to make models more robust and ready for real-life situations according to different weather and road conditions. As this way model learns not just the sequence of pixels, but more common patterns.

Improving models with adversarial robustness can be easily implemented with the different open-source libraries like AdvBox, DeepSec, IBM ART or many others[4].

Resources: