We live in a world of uncertainty and imperfect information

I often like to play chess and minesweeper in my spare time (yes, don’t laugh).

Of these two games, I have always found minesweeper more difficult to understand, and the rules of play have always seemed very opaque.

However, the latter game is much more resembling of how situations often unfold in the real world. Here is why that is relevant to data science.

Compare that to chess, where in spite of one’s playing ability — all players have perfect information at all times.

One can always see every piece on the board, and neither opponent possesses any informational advantage over the other (expect the potential knowledge gained from experience playing the game).

For this reason, AI has been used extensively to train computers to win at chess. This proved successful all the way back in 1997, when an IBM supercomputer was trained to beat grandmaster Garry Kasparov.

It is easy to extrapolate from this example that AI models can be trained to potentially solve any given problem that is presented to us in this world. However, this is not the case — as one is always working in a world with imperfect information.

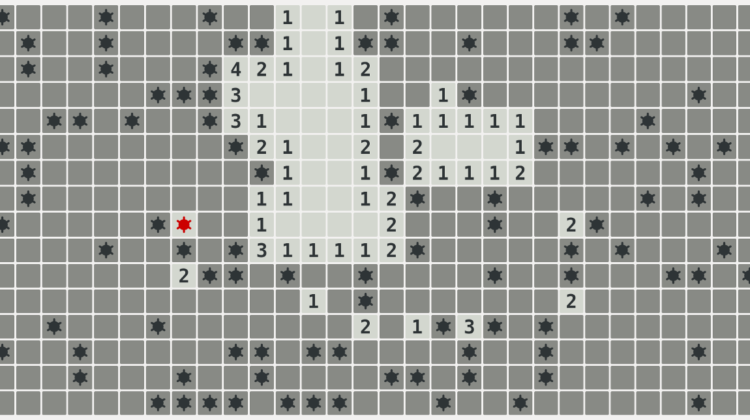

When one considers the game of minesweeper, imperfect information surrounds us at all times. Across the board, there are a series of mines in certain squares. Clicking on a mine means that the player loses the game automatically. When starting the game, there is no way of knowing which squares are suspect and which ones are not. One must simply click on a given square and hope for the best.

Assuming one continues through the game, certain squares will give an indication as to where a mine might be. For instance, a square with the number 1 would indicate that there is a square with a mine present around the set of squares around the original one. However, one has no way of knowing which square this is.

In this regard, minesweeper is a game that is much more reflective of real-world scenarios compared to a game such as chess.

No matter how much data is collected, one will never be able to collect 100% of the data that explains the variation in the outcome variable.

Even if this were possible, the parameters that explain such variation would invariably change in the future. In this regard, a model that worked very well yesterday may not work as well tomorrow.

So, when faced with imperfect information, every decision comes down to a probability. We cannot know for certain what will happen when deciding on a particular outcome, but we can assign a probability to this.

For instance, squares surrounding a number 1 are less risky than squares surrounding a number 3 — there are now three mines surrounding the latter square. It’s probably best to avoid clicking on those squares!

Interestingly, reinforcement learning has been used in the past in an attempt to train A.I. to win at minesweeper. An example by sdlee94 is available here.

While I am not overly familiar with the specifics of how the algorithm works, the author does note that any move that is classed as a guess is given a negative reward, as the objective is to train the computer to reveal the safe squares through logic and probability.

That said, according to the results, it still took over 60,000 games of training for the neural network to achieve a greater than 10% win rate.

When uncertainty is involved, even deep neural networks seem to be limited in their ability to make the correct predictions. Even if a negative outcome (in this case, landing on a square) has a low probability of occurrence — it will still happen given enough trials.

In this regard, I suspect that data science will gradually evolve to rely less on accuracy and more on probabilities.

For instance, the classic method of training a model involves the train-test split, whereby the model is first trained and validated using training data, and then the accuracy is gauged across a test set.

However, high validation and test scores only indicate that the model shows high prediction accuracy across those two datasets. Even if cross-validation is used — the model is still limited in being able to indicate how well it would work with new information.

For this reason, I foresee that more emphasis will be placed on simulation analysis. For instance, suppose one is trying to forecast revenues for a company over time. The time series in question has a set mean and standard deviation.

How about we produce 10,000 simulations of this time series while artificially increasing the standard deviation? How accurate will the prior model be under this scenario?

From this standpoint, one would have a much better idea of the situations under which a model could be expected to perform well, and the situations where it will not.

In fact, one could take this idea further and train several models across simulated data with different parameters. Depending on the potential scenarios that a business might face, different models can be configured on a probability basis to make forecasts across different environments.

Indeed, this is the very underpinning of Bayesian analysis, and an area I suspect is going to grow in popularity going forward.

Despite having access to more data than ever before, it is important to remember that the real world is uncertain, volatile, and imperfect.

When faced with imperfect information, the only thing we can do is use the little information that we do have to assign probabilities to different outcomes.

That is true not only in the game of minesweeper, but also across virtually every complex real-world scenario that we face.