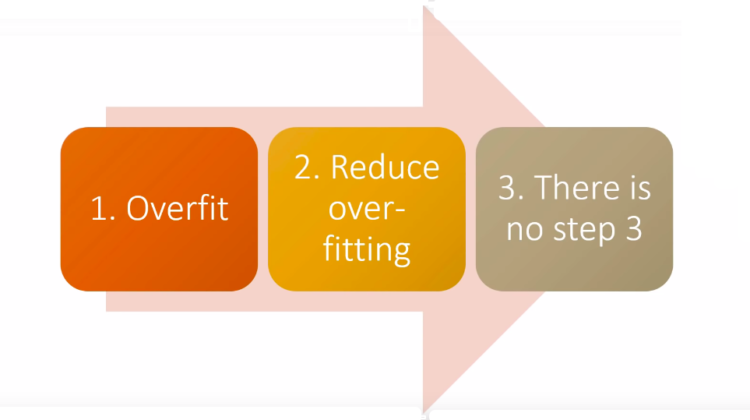

I will discuss the overview of how to build a good Artificial Intelligence model. I won’t be getting into many details of the processes, but you will certainly get the idea behind the excellent model

Overfit your model — Now, I know you people might be thinking that how overfitting can lead to a good model. Hold On! Let us first understand,

What is Overfitting?

A more traditional definition of overfitting states that if your training loss is lower than your validation loss, that means you are overfitting your model. But that is not accurate. Almost every good model has a more moderate training loss than the validation loss. So, the traditional definition of the overfitting is not all correct. But, to get a perfect model instead of gathering a smaller amount of data to avoid overfitting, which is what generally happens most of the time, instead, I would suggest you pick the right amount of data and start to overfit your model.

According to the more recent definition, Overfitting happens when your validation loss starts to decrease due to the overtraining of your model such that your model crams/learns the input rather than learning the behaviour of the input. But it’s ok.

Reduce overfitting — How unprofessional it is sounding, may not be at all. I told you to overfit the model against to train your model on lesser data which people used to do. So, What I want to say is very clear.

- Gather a large amount of data and train your model.

- Let it get overfit.

- If your model overfits, start to reduce the overfitting using the below methods.

Methods to reduce overfitting(in sequential order)

- Reduce the number of epochs — In some situations, when we cannot gather more amount of data, it is better to train the model on lesser epochs. Lesses epochs will help the model to train for lesser time, thus, less amount of interaction time between the model and the inputs and, therefore, less overfitting.

- More Data — It is always the first and foremost approach to reduce overfitting. Try to gather more amount of relevant data to reduce overfitting.

- Data Augmentation — In case of vision classification problems, Apply data augmentation to the images do as to reduce the overfitting. Data augmentation is a type of regularization technique but more broadly defined.

- Regularization — There are a lot of regularization techniques that I have already mentioned here. Take a look at them here.

- Reduce the architectural complexity — This option should be the last preference when you are not able to reduce overfitting using the above three methods.

Now, what happens is that whenever we overfit the model, we start with reducing the architectural complexity by reducing the number of layers in the model unless the model is very complex to handle. But that should be the last approach to manage. Firstly, you should start with more data, followed by Data Augmentation, Regularization, and then, at last, reducing the architectural complexity.

Train your model more and more based on the above steps. Building a good model comes more from practice and the intuitions which come with training.

I will add more and more methods on how to train a useful model with time. Till then, start loving and exploring Artificial Intelligence.