Writing a simple feedforward neural network is probably the first step in your journey towards mastering deep learning. Today, there are countless libraries and frameworks available to develop a machine learning model. The two most famous and go-to frameworks for developing a machine learning model are Tensorflow and PyTorch. Keras has been integrated into Tensorflow2.0 which undoubtedly makes it easier to write any machine learning code with Tensorflow as the backbone. However, if you are a beginner in the field, you probably want to write your code from scratch in order to understand the ins and outs of a model. I searched the internet for sources that would help me in writing a basic code for a simple feed-forward neural network in TensorFlow but couldn’t find any. Therefore, I thought of writing one myself. Following is the way to get it done!

1. Learning difference between a keras model and a basic model

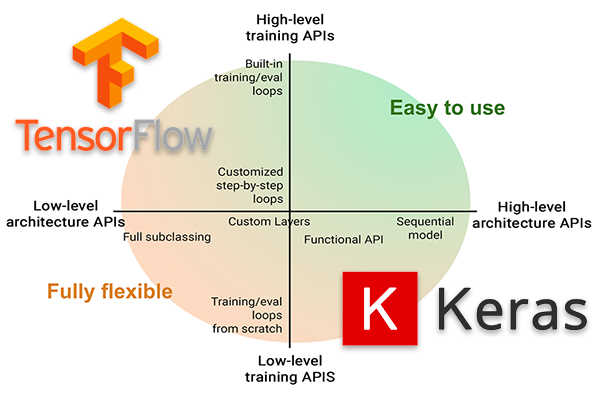

Keras is a very simple library that helps you write machine learning code in python without any hassle. It is quick, easy, and clean! However, under the hood, Keras does a lot of work to provide you this seamless experience. If you look at the backend code of functions like model.fit() or model.train() on Tensorflow’s github repository, you would find that they contain numerous optimizations like warm start and optimized implementation of functions on hardware level. These tricks definitely make your code run faster but that may not always mean your model is learning the way it should learn. For comparison, if you write a simple 2–3 layered neural network code both using keras and without, you would find a major difference between the loss curves of both the programs. The loss curve for a basic code written without using keras would indicate loss values falling from a high number to a low number whereas, the loss value for keras code would begin with a low value in the first place, and unlike the former coder, wouldn’t significantly fall to a lower value. This does not mean that the keras model does not learn but it just means that the magnitude of learning occurring in a basic code is more than that in a keras code. Optimizing keras code is like peeling a strand of hair.

2. Better control over the execution of architecture

Sometimes, your architecture may not be a simple sequential neural network. It may contain residual/skip connections or multiple sub neural networks. In such a case, you need more control over the execution of your architecture. Having a custom code definitely proves handy for such use cases.

Some other sources for the comparison:

1. https://www.javatpoint.com/tensorflow-vs-keras

2. https://www.pyimagesearch.com/2019/10/21/keras-vs-tf-keras-whats-the-difference-in-tensorflow-2-0/

Following is an example of a simple feed forward neural network containing 2 hidden layers that learn to predict mnist digits using gradient descent optimization.

I assume that you have the mnist dataset downloaded already

In the above code snippet, I create a class for a network and initialize it. n_layers contains number of nodes per layer in the network

Each weight and bias matrix is initialized using tf.Variable() function

We save all the parameters in self.params for further use

In the forward pass, we perform linear algebra on the matrices.

P.S. — Due to the nature of the loss function we will be using further, it is a good practice to return just the logits in the forward pass function. You should return the complete output after the activation function in the predict function. In the above code snippet, Z3 are logits and Y is the final predicted output.

As seen above, you could either use tf.compat.v1.losses.sigmoid_cross_entropy() or tf.compat.v1.losses.softmax_cross_entropy() all they do is either apply sigmoid or softmax activation function on the logits and then calculate the loss. The labels should be in one-hot vector format for the use of these functions. For more information, you may refer to the following links,

1. https://mmuratarat.github.io/2018-12-21/cross-entropy

2. https://gombru.github.io/2018/05/23/cross_entropy_loss/

The above function could be used to perform backpropagation. This is perhaps the most important part of the entire program. The above function could be used as an alternative to model.fit() in TensorFlow.

And there you go! that’s how you could build a very basic feed-forward neural network in TensorFlow without using any high-level library like Keras. The rest of it like functions for batch loader and evaluation metric are easy to develop and hence I skipped them.

Peace out ✌️