Earlier I have written about learning math for Data Science and Machine Learning (see the article), where I covered the list of math topics needed, provided some resources for learning math and some insight which I have acquired in this pursuit.

On top of linear algebra, multivariable calculus and probability theory, statistics is one of the crucial math requirements for data scientists, indeed the core one. Actually, Data Science as a field lies at the intersection of Statistics and Computer Science and most bachelor and graduate programs in Data Science, thus, either provided by Statistics or Computer Science departments. There is even long-held debate and popular meme (see below) on the web whether Data Science is just a glorified statistics. Not getting too much into details, however, one thing is obvious that Statistics knowledge is a must for Data Scientists.

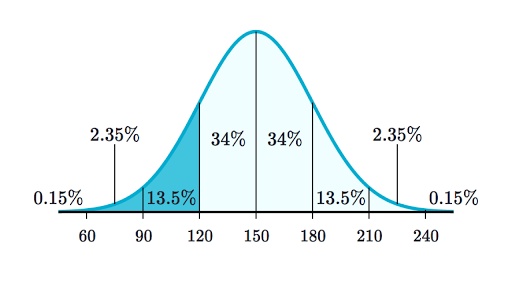

According to Wikipedia, Statistics is the discipline that concerns the collection, organization, analysis, interpretation and presentation of data. Therefore, basically, it covers all aspects data of from its collection to the end result, i.e. presentation of results. Broadly, two main statistical methods are used: descriptive and inferential statistics. Descriptive statistics is about summarizing the data by computing either the central tendency parameters (mean, median, mode) or dispersion parameters (variance, standard deviation), whereas inferential statistics helps to infer population parameters, based on sample data.

Core topics in inferential statistics are parameter estimation with associated confidence intervals and hypothesis testing with associated p-values and the notion of statistical significance. To give you a basic idea about parameter estimation and hypothesis testing, let’s look at the following problem:

Suppose we want to answer the following two questions: 1) What is the average height of citizens of some country? 2) Is the average height of citizens of some country is higher than, say, 1.65 meters. The first question is aimed at finding the mean of heights of citizens, so it is a parameter estimation problem. In this case, the parameter of interest is the mean of the population. In the second question, we have some hypotheses to evaluate. Concretely, we are interested to find out whether the average height of citizens is above 1.65 meters. Therefore, this question is a hypothesis testing problem.

Ideally, to answer those two questions, we would want to measure the heights of all citizens and average them out and, thus, find the mean value and compare it to 1.65 meters. In this scenario, however, there is no need for inferential statistical knowledge at all. However, in the real world, due to a range of constraints (large samples can be too costly/impossible to take), we usually take some sample from the population, that is some fraction of it (e.g. we measure the heights of 100 000 people in a country with a population of 10 000 000) and come up with an approximate measure of the parameter of interest, in our the case, the mean. Exactly, in such kind of situations, inferential statistics comes into play. As we sampled only some fraction of the population and measured the parameter of interest, there is some uncertainty in the estimate of this parameter. Inferential statistics helps with quantification of this uncertainty, by providing a confidence interval (e.g. say, a range between 1.60 and 1.70m for the mean height) around the measured parameter. Having calculated this interval, we can then use it for hypothesis testing and see whether there is statistically significant evidence that the measured height is different from 1.65 meters. We do this by checking whether our computed confidence interval includes 1.65meters (our hypothesized value).

This was a very brief and informal example so that to give you a glimpse into the types of problems addressed by the use of inferential statistics. Hope this gave you some appetite and excitement to learn more about inferential statistics.

So, having got some idea about inferential statistics, I wanted to dive deeper into it and here when I encountered Fundamentals of Statistics course offered by MIT on edx (see the course details on edx). This course is part of the 5-course Statistics and Data Science MicroMasters program.

The course is very expensive for verified learners (300$), however, you can get a 90% discount by applying to financial aid. It is important to be warned that this is a challenging course, requiring some prerequisite knowledge (Linear Algebra, Multivariable Calculus and Probability theory) and major time commitment (15–20 hours weekly) for the duration of 16 weeks. Below is the syllabus for the course:

* Unit 0: Linear Algebra and probability review

* Unit 1: Introduction to statistics and probability

* Unit 2: Foundations of Inference

* Unit 3: Methods of estimation

* Unit 4: Hypothesis testing

* Unit 5: Bayesian statistics

* Unit 6: Linear Regression

* Unit 7: Generalized linear models

* Unit 8: Principal Component Analysis

As it is apparent from the syllabus, the course is very ambitious, trying to survey many topics in statistics in a short time span. Concretely, after reviewing some linear algebra and probability needed for the course, the course dives into inferential statistics in Units 1 to 4. The next 4 units have little connection with each other, however, all of them are fundamental and quite regularly used in Data Science methodology. Unit 5 is about Bayesian statistics, which is an entirely different approach to statistics, where probabilities do not express the expected frequency of events, but rather the degree of belief in the event. Unit 6 is about one of the most fundamental and widely used algorithms, linear regression. Rather than just introducing basic concepts of linear regression, the course dives into more theoretical aspects of it, such as providing theoretical, empirical solutions and hypothesis testing for linear regression. Unit 7 is about generalized linear models. After learning this topic, one sees that ordinary linear regression is just a specific case of generalized linear models, where the error distribution is normal. Unit 8 is about principal component analysis, which is one of the most widely used dimensionality reduction algorithms. Here again, having reviewed the motivation behind the topic, we dive deeper into the mathematical details of the algorithm.

It is worth noting that the course contains lots of exercises embedded into weekly material to help to solidify the concepts. Also, there are two midterms and a final exam to evaluate your knowledge. Overall, I think, I would definitely recommend this course, if you aim to dive deeper into statistics and mathematical details of algorithms and want to practice a lot your knowledge on probability theory and linear algebra.

Happy learning!