In this blog, we will discuss about how Logistic Regression can solve the multiclass classification problem.

As we all know, Logistic Regression is a machine learning classification algorithm used to predict binary outcomes for a given set of independent variables.

OneVsRest(OvR in short, also referred to as One-vs-All or OvA) is a heuristic method for using binary classification algorithms for multi-class classification.

Let’s discuss how the OneVsRest or OneVsAll works!!!

suppose, if we want to solve a binary classification problem using logistic regression where the data will be linearly separable so we try to draw a best fit line in between them and we classify the 2 classes. what If we have more than 2 classes?? still, can we solve this problem using logistic regression? the answer is Yes!.

If Yes, then How does it Work?

Let’s consider F1,F2,F3 are our independent features, Output is dependent feature. the output has 3 classes those are O1,O2 and O3. Based on input features I1,I2,I3, output belongs O1 class and based on I4,I5,I6 features output belongs O2 class. the similarly based on features I7,I8,I9 the output belongs O3 class as shown in below table.

How categories will get split ?

Now, will discuss how 3 classes will be classified ?

output is having 3 classes, for all features we are going create another 3 columns for each class as shown in figure. 1+ and -1 will be assigned for class as below.

Considering this, when we are implementing logistic Regression using One-vs-Rest, model will create number of sub-models is equal to the total number of classes we are handling with. here we have 3 classes so we will get 3 sub-models.

By consider all the input features along output feature column O1, Logistic Regression will create a M1 model. M1 model will able to find out whether O1 is positive or it is negative. If it detects positive then new data points belong to O1 else belong to other 2 classes. Similarly for next models M2 and M3, along with independent features, it considers output O2 and O3 respectively, then generates output.

Now the biggest question is how does it classifies new data points!!!

whenever we are giving a new test data, whole input feature will goes to the M1 model then it will give us one probability value let’s call it as P1, then model M2 and M3 also give P2 and P3 probability values. Sum of all these probabilities P1, P2, P3 should be equal to 1.

P1+P2+P3 =1

probabilities are = [0.20,0.25, 0.55]

for example, if we consider probability for M1 model is P1= 0.20 , Model M2 probability is P2=0.25 and for model M3 probability is P3= 0.55, sum of all these 3 will be equal to 1.

Once we got probability values we have to check which is having the highest probability. suppose, in our case M3 model is giving the highest probability with 0.55 that basically means the new test data belongs to O3 category. This is how logistic regression works for multiclass problem. Let’s go for implementation part.

Let’s try this using our famous Iris dataset, will go for model building directly.

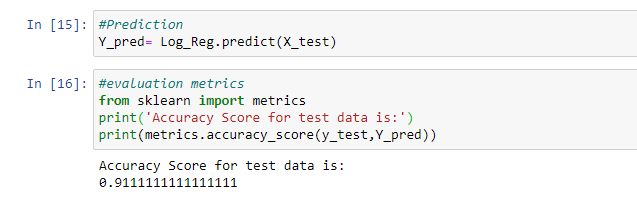

Once model is trained , predict for testing data and obtain accuracy. The model can classify the classes with accuracy of 91% means if we have 100 records, out 100 our model has predicted 91 records correctly and this is pretty good!!

Let’s check classification report for our model.

Now will check for new unseen data. we are passing a list of values to the model for prediction.

we have got 3 probability values as we have 3 classes

So, we can conclude that logistic regression can be used for multi-class classification problem with better accuracy by setting the parameter multi_class to ‘ovr’

This is simple implementation, if want full code it is available at my github.

Thank you for reading. Please let me know if you have any feedback.

for more details, here are the links