Introduction:

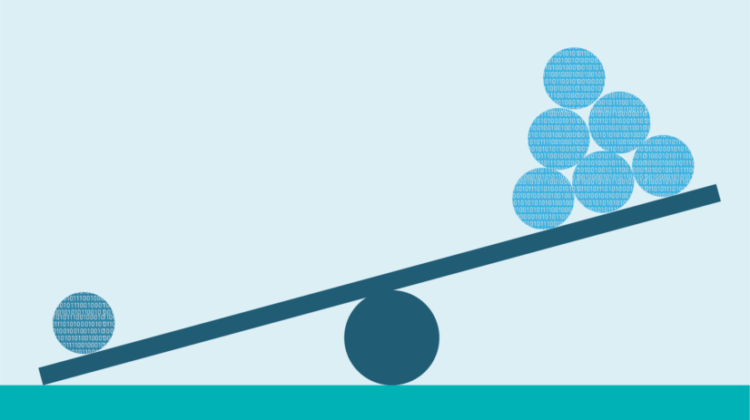

When building a classification model, you may get great results (high accuracy score) only to realize that your model is only predicting every observation to one class. This is caused by class imbalance. Class imbalance is a problem in machine learning where the total number of one class of data significantly outnumbers the total number of another class of data. To illustrate what class imbalance looks like and how it works, let’s say that you have a two-class dataset that includes 50 diabetes patients and 5000 non-diabetes patients. In this example, the classification model will tend to classify patients as non-diabetes patients because it can’t pick up on the data and trends that would lead to a patient having diabetes. Even though the model would end up with an accuracy score of 99% by just classifying every patient as a non-diabetes patient, the model is not functioning effectively to classify the patients appropriately.

Many datasets will have an uneven number of instances in each class, but a small difference is usually acceptable. As a rule of thumb, if a two-class dataset has a difference of greater than 65% to 35%, than it should be looked at as a dataset with class imbalance. If you are using a dataset with more than two classes and are unsure of whether it has class imbalance, you can always try running your models without making any adjustments and determine whether the model is functioning appropriately or whether it is only predicting to certain classes.

Ways to Handle Class Imbalance:

I) Use a Different Performance Metric

As discussed earlier, Accuracy Score is not a good metric to use when there is class imbalance in your data. Some metrics that can be more helpful when handling class imbalance are:

- Precision — the fraction of relevant instances amongst the retrieved instances

- Recall — the fraction of relevant instances that were retrieved

- F1 Score — weighted average of Precision and Recall

- Confusion Matrix — a table that illustrates the correct predictions and incorrect predictions made

II) Collect More Data

This might be self explanatory, but, by collecting more data, you may be able to create a more balanced dataset. If more data is available that can help balance the classes in your dataset, this can be an easy and simple way to combat class imbalance.

III) Resample Your Dataset

You can resample your dataset to create a more balanced dataset by either adding copies of instances from the minority class (over-sampling) or deleting instances from the majority class (under-sampling). These two approaches are very simple and easy to implement. As a general rule-of-thumb, you should generally use under-sampling when you have a very large dataset. In the same sense, you should generally use over-sampling when your dataset is small. Regardless, it doesn’t hurt to try both methods and see how your results are affected.

IV) Generate Synthetic Samples

There are algorithms that you can use to generate synthetic samples such as SMOTE (Synthetic Minority Over-sampling Technique) and Tomek links.

SMOTE is an oversampling method that creates synthetic samples from the minority class. It works by selecting minority observations that are similar to each other and drawing a line between the examples in order to create new synthetic samples.

Tomek links work by detecting observations of opposite classes that are nearest neighbors. It removes the majority instance of these pairs. The goal of Tomek links is to clarify the border between minority and majority classes to make the minority region more distinct in the model.

References:

- Team, Towards AI. “Dealing with Class Imbalance — Dummy Classifiers.” Towards AI — The Best of Tech, Science, and Engineering, 2 Aug. 2020,

- Brownlee, Jason. “8 Tactics to Combat Imbalanced Classes in Your Machine Learning Dataset.” Machine Learning Mastery, 15 Aug. 2020, machinelearningmastery.com/tactics-to-combat-imbalanced-classes-in-your-machine-learning-dataset/.

- Brownlee, Jason. “A Gentle Introduction to Imbalanced Classification.” Machine Learning Mastery, 14 Jan. 2020, machinelearningmastery.com/what-is-imbalanced-classification/.

- Brownlee, Jason. “How to Combine Oversampling and Undersampling for Imbalanced Classification.” Machine Learning Mastery, 4 Jan. 2021, machinelearningmastery.com/combine-oversampling-and-undersampling-for-imbalanced-classification/.

- “Learning from Imbalanced Classes.” KDnuggets, www.kdnuggets.com/2016/08/learning-from-imbalanced-classes.html/2.