Machine Learning Stack for Enterprise ML

Starting my journey with Machine Learning models, I quickly realized the need to deploy, monitor, enhance and extend these models in an Enterprise setting

Drawing a parallel with API — phenomenon, this was natural, but confusing at the same time. Some of the basic questions like below are bound to crop up in an Enterprise ML Strategy discussion

Q. What does a model artifact look-like?

Q. How do we version models? and store them as artifacts?

Q. How can we integrate existing monitoring tools like Elasticsearch with ML models?

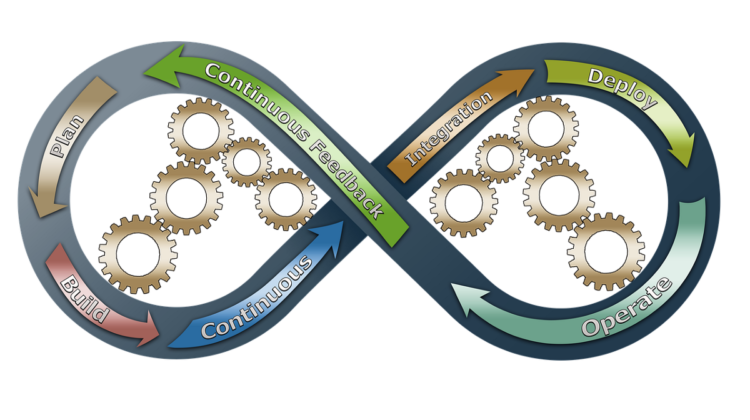

Q. Do Models follow Pipelines - CI/CD Dev-ops or MLOps processes?

In addition, the Explain-ability and Fairness of models are additional Governance aspects which need to be incorporated as part of Enterprise Machine Learning Architectures

My initial efforts to identify each of these aspects and appropriate tools to resolve these aspects has led me to the below list of curated products

note: I have limited my search to open-source tools so I can test these out without licensing overheads and evaluate for Enterprise readiness

[1] Programming Models

: languages to analyze and fit (train) ML models

[2] Analytics Engine(s)

: data-processing tools

[3] ML Ops — Orchestration

: Orchestration frameworks for Machine Learning

MLRun / Kubeflow Pipelines / Apache Airflow

[4] Containerization and Model Serving

: Distributed delivery, serving system

Docker / TF Serving / BentoML / MLflow Models / KFServing

[5] Model Language Format(s)

: Model definition language enabling interoperability

[6] Model Explainability

: explaining the predictions of a model

SHAP / LIME / InterpretML / Alibi / ELI5

[7] Fairness Indicators

: mitigate bias, evaluate and improve models

Tensorflow Fairness Indicators / Fairlearn

[8] Versioning and Artifact Store

: Data Lineage, artifact storage, data versioning

Data Version Control (DVC) / ML Run

Hopefully, the above tools provide some guidance on implementing Enterprise ML stack