Why is Model Compression important?

A significant problem in the arms race to produce more accurate models is complexity, which leads to the problem of size. These models are usually huge and resource-intensive, which leads to greater space and time consumption. (Takes up more space in memory and slower in prediction as compared to smaller models)

The Problem of Model Size

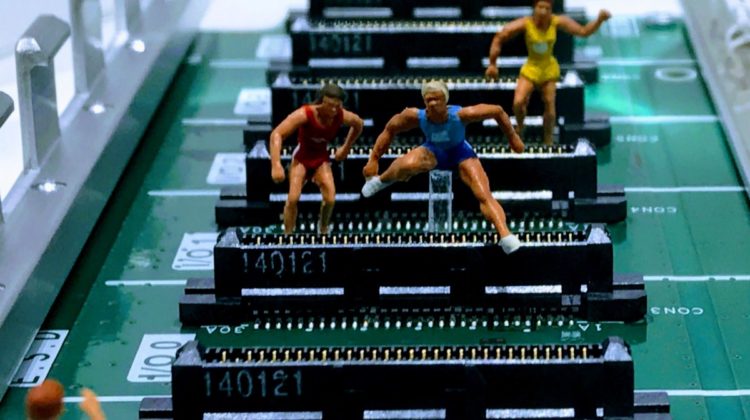

A large model size is a common byproduct when attempting to push the limits of model accuracy in predicting unseen data in deep learning applications. For example, with more nodes, we are able to detect subtler features in the dataset. However, for project requirements such as using AI in embedded systems that depend on fast predictions, we are limited by the available computational resources. Furthermore, prevailing edge devices do not have networking capabilities, as such, we are not able to utilize cloud computing. This results in the inability to use massive models which would take too long to get meaningful predictions.

As such, we will need to optimize our performance to size, when designing our model.

To overly simplify for the gist of understanding machine learning models, a neural network is a set of nodes with weights(W) that connect between nodes. You can think of this as a set of instructions that we optimize to increase our likelihood of generating our desired class. The more specific this set of instructions are, the greater our model size, which is dependent on the size of our parameters (our configuration variables such as weight).

We are to classify between 3 different classes from a given dataset in a Raspberry Pi(RPi). RPi is traditionally not an embedded device, however, in our case RPi was a step towards embedded devices.

A bit hard to read? Here is a simpler view.

Here we have the average accuracy of prediction for all classes.

It seems like on average the winner is the Transformer model as it has higher average accuracy, however, if we compare the performance to the model size. We see that the transformer has a higher total parameter count, then the Encoder-Decoder LSTM.

For the Transformer, a marginal increase in accuracy of 0.1% required an increase of 1.39Mb of parameters, thus it had a lower accuracy over size ratio.

As such the Encoder-Decoder model is the clear winner, however, 3.16MB is still too large of a model to allow for the effective usage of an embedded device.

A re-visit from my previous article, installing TensorFlow 2.3.0 in Raspberry Pi3+/4,

With our autoencoder, on average it takes 1760ms to predict in our embedded device, however as our sensors are sensing every second, this won’t do.

Great, now what?

These are the model compression methods, each with increasing degrees of difficulty in implementation. An excellent and comprehensive survey on each of these techniques has been done here.

- Quantization

- Pruning

- Low Ranked Approximation

- Knowledge Distillation

TensorFlow Lite deals with the first two methods and does a great job in abstracting the hard parts of model compression.

TensorFlow Lite covers:

- Post-Training Quantization

— Reduce Float16

— Hybrid Quantization

— Integer Quantization

2. During-Training Quantization

3. Post-Training Pruning

4. Post-Training Clustering

The most common and easiest to implement method would be post-training quantization. The usage of quantization is the limiting of the bits of precision of our model parameters as such this reduces the amount of data that is needed to be stored.

Features of each Quantization tool

I will only go through post-training Hybrid/Dynamic range quantization because it is the easiest to implement, has a great amount of impact in size reduction with minimal loss.

To reference our earlier neural network diagram, our model parameters(weights) which refer to the lines connecting each note can be seen to represent its literal weight(significance) or the importance of the node to predict our desired outcome.

Originally, we gave 32-bits to each weight, known as the tf.float32(32-bit single-precision floating-point), to reduce the size of our model, we would essentially shave off from 32-bits to 16-bits(tf.float16) or 8-bits( tf.int8) depending on the type of quantization used.

We can intuitively see that this poses significant exponential size reductions as with a bigger and more complex the model, the greater the number of nodes and subsequently the greater number of weights which leads to a more significant size reduction especially for fully-connected neural networks, which has each layer of nodes connected to each of the nodes in the next layer.

You might be quick to think that reducing the amount of information we store for each weight, would always be detrimental to our model, however, quantization promotes generalization which was a huge plus in preventing overfitting — a common problem with complex models. By Jerome Friedman, the father of gradient boost, empirical evidence shows that lots of small steps in the right direction result in better predictions with test data. By quantization, it is possible to get an improved accuracy due to the decreased sensitivity of the weights.

Imagine if, in our dataset, we get lucky, every time we try to detect a cookie our dataset shows us a chocolate chip cookie, our cookie detection would get a high training accuracy, however, if in real-life we only have raisin cookies, it would not get as high test accuracy. Generalization is like blurring our chocolate chip so that our model realizes as long as there is this blob, it is a cookie.

I will be using Tensorflow 2.3.0.

To convert your model post-training to the quantized model:

First, ensure that you saved your tf.keras model in the recommended SavedModel format (tf.lite.TFLiteConverter.from_keras_model() works too however I was met with many errors, be warned):

import tensorflow as tf

tf.keras.models.save_model(model, str(saved_model_dir))

As of 06/09/20, the activation function of SELU is not supported by TensorFlow(TF), I found that out the hard way, in my experience, RELU is a good substitute with minimal loss.

Next, we will convert the file.

import pathlibconverter=tf.lite.TFLiteConverter.from_saved_model(saved_model_dir)

tflite_models_dir = pathlib.Path.cwd() / "tflite_model_dir"

tflite_models_dir.mkdir(exist_ok=True, parents=True)

converter.allow_custom_ops = True

converter.optimizations = [tf.lite.Optimize.DEFAULT]

converter.target_spec.supported_ops=[tf.lite.OpsSet.TFLITE_BUILTINS]

tflite_model_quant = converter.convert()

Note: pathlib is an optional library used to simplify windows pathing.

Instead of predicting using our quantized model, we will run an inference.

The term inference refers to the process of executing a TensorFlow Lite model on-device in order to make predictions based on input data.

To perform an inference with a TensorFlow Lite model, we must run it through an interpreter. The TensorFlow Lite interpreter is designed to be lean and fast. The interpreter uses a static graph ordering and a custom (less-dynamic) memory allocator to ensure minimal load, initialization, and execution latency.

# Load the TFLite model and allocate tensors.

interpreter = tf.lite.Interpreter(model_path=path_to_tflite_model) interpreter.allocate_tensors()# Get input and output tensors.

input_details = interpreter.get_input_details()

output_details = interpreter.get_output_details()#Predict model with processed data

input_shape = input_details[0]['shape']

input_data = np.array(x_3d_pca_processed, dtype=np.float32) interpreter.set_tensor(input_details[0]['index'], input_data) interpreter.invoke()

prediction = interpreter.get_tensor(output_details[0]['index']) outcome = np.argmax(prediction.mean(axis=1),axis=1)

That’s it!

For a more in-depth explanation of these TFlite tools, click here.

For more on TFlite tools, click here.

With our original model as the baseline, we compared with:

– Original Model

– Post-Quantized model

– Post-Pruned model

– Post-Quantized and Post-Pruned model

on our computer before implementing our model in our “embedded” device.