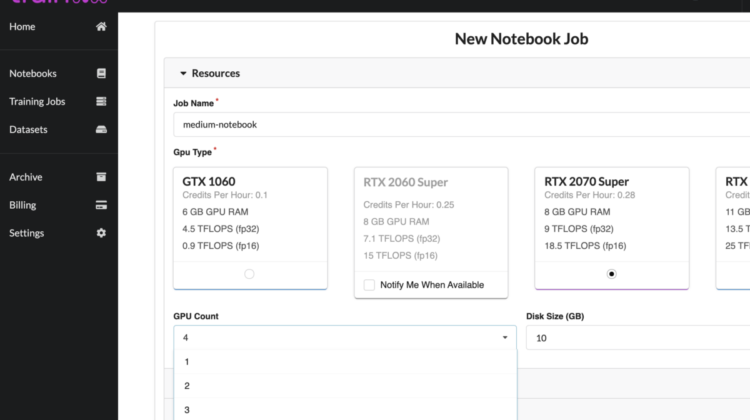

Access to up to 4 GPU’s in just one instance of a trainML notebook enables users to run iterative machine learning experiments, such as hyperparameter tuning jobs, in parallel with ease. This post demonstrates the steps I took to do so using my hyperopt notebook from the end of Part 3 of this series.

I introduced trainML in Part 2 of this series, but in summary, trainML is a cloud GPU service providing access to multiple GPUs for notebook ML model training. trainML uses JupyterLab for their multi-GPU notebook environment, and boasts a superior pricing model as well as access to more powerful GPUs (GeForce RTX 2080 Ti with 11GB memory as of writing this post) than competitors.

Motivation: hyperparameter tuning

As documented in Part 2 of this series, I used hyperopt for the Kaggle competition to build an optimal model architecture for the competition without needing to choose hyperparameters myself. The traditional ML engineer is required to pay close attention to these pretraining steps, but I managed to offload them by supplying hyperopt with a search space containing various ranges of hyperparameter values to try out. After running a series of trials, hyperopt returns the best values according to a specified cost function. I ran hyperopt exclusively on trainML GPUs in order to do all my hyperparameter tuning jobs external to the Kaggle environment.

The first time I ran hyperopt in a trainML notebook, I did not produce the best model architecture: it turned out that the hyperparameter search space that I specified was too narrow. Through trial and error, I expanded the value ranges for certain hyperparameters. I also added more hyperparameters to the search space such as # hidden layers for the neural net. These adjustments or “experiments” eventually gave me a model architecture that performed much better in the Kaggle competition.

trainML multi-GPU notebooks

Instead of sequentially running these experiments and waiting hours for each hyperopt run to complete before starting the next, I managed to run up to four experiments in parallel using trainML. This was made possible due to access to up to four GPUs in just one notebook instance within the trainML environment.

Here are the steps I took to prepare my code for parallel experiments on the four GPUs:

- Made 3 copies of my hyperopt notebook, as shown in the above screenshot.

- Made minor adjustments to the hyperopt config options in each notebook copy, such as changing search space definitions, exposing more/less model hyperparameters, and increasing/decreasing the number of trials per run.

- Allocated the PyTorch device in each notebook to a different GPU. The four GPU indices

cuda:0,cuda:1,cuda:2andcuda:3should each be reserved for only one notebook run. The following screenshots highlight the specific changes I made to each notebook copy:

Following this procedure enabled me to successfully run for copies of my code in parallel, significantly reducing the time (and cost) required for ML experimentation on GPUs. To confirm that all four GPUs were indeed being used, I called nvidia-smi in a Terminal window: