Therefore, we propose a cost modeling method to capture the domain knowledge of different feature manipulation cost. For Twitter spam detection, we used high-dimensional box to capture how much an attacker is able to increase and decrease each feature, by four categories: negligible, low, medium, and high cost. This can be generalized to arbitrary constraint-based threat model, as described in our paper. Our cost model has the same expressiveness as the attack action rule-based model in TREANT. However, our approach directly maps each feature value to perturbed ranges, which can be more easily integrated in the robust training

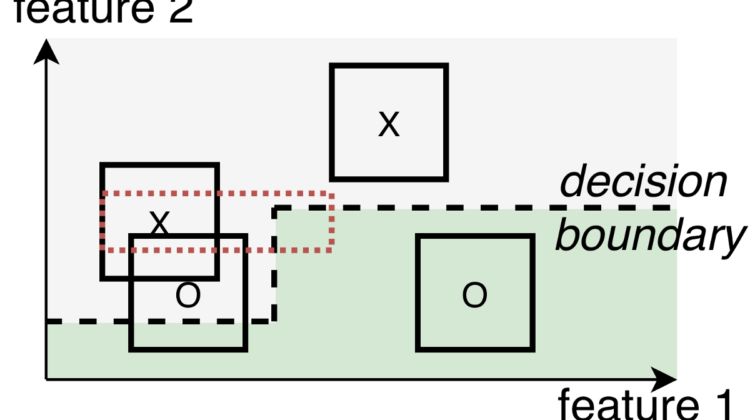

We designed a new training algorithm that is more effective at finding robust splits in trees compared to the state-of-the-art. Briefly, when growing the trees, we evaluate the quality of each split xʲ < η using some gain metric such as information gain, Gini impurity or loss reduction.

but has a 83.3% accuracy.

The cost model tells us the set of data points that are likely to be perturbed to cross the split, which decreases the gain, and then we need to find the best robust split with the highest gain. This is formulated as the max min problem here.

Since it is intractable to enumerate all the possible robust split, we designed and implemented a greedy algorithm to find robust split, which can be generally applied to different types of tree ensembles and different gain metrics. Our algorithm works with random forest in scikit-learn and GBDT in XGBoost. We have open sourced our implementation here.

What if the attacker knows the cost model we use to train the robust Twitter spam classifier? Can we still improve the cost-aware robustness against adaptive attackers?

We proposed a new adaptive attack objective in the strongest whitebox attack (MILP) to directly target the trained cost model. The adaptive attacker minimizes the weighted sum of feature value differences. Each weight represents the unit cost (e.g., some dollar amount) for the attacker to increase the feature or to decrease it. If we allow increasing a low-cost feature to be twice the amount of a medium-cost feature during training, we set the weights for the adaptive attacker such that the cost of changing one unit of a medium cost feature is equivalent to changing two units of a low-cost feature.

We extensively evaluated our cost-aware robust training algorithms, by training 19 different configurations of the cost models and studying how they can increase the adaptive attack cost compared to baseline models. Our results show that, compared to regularly trained model, our robust model increases the adaptive attack cost to evade them by 10.6×.

Our paper “Cost-Aware Robust Tree Ensembles for Security Applications” will appear at USENIX Security 2021, and our code is available on github.