Complete Article contain 3 Parts:

- Introduction to NLP

- Implementation of RNN and LSTM

- Sentiment Prediction RNN

Topics Covered:

NLP and Pipelines, Text Processing, Feature Extraction, Bag of Words, TF-IDF,one-Hot Encoding, Word Embeddings, Word2Vec, GloVE, Embedding for Deep Learning

NLP stands for Natural Language Processing, It is interactions between computers and human language, in particular, how to program computers to process and analyze large amounts of natural language data.

Common NLP Pipeline:

Text Processing → Feature Extraction → Modeling

Each stage transforms the text in some way and produces a result that the next stage needs.

Text Processing:

Sources of text: web, pdf, doc, voice, book scan using OCR, and many more.

Our goal is to extract the plain text that is free of any source-specific markers or constructs that is not relevant to our task.

Once we obtain plain text we can do some further processing that may be necessary, for instance, Capitalization doesn’t usually change the meaning of the word so we can convert all the words to the same Case. Punctuations can also be removed, some common words that often helps in building the structure but do not add to meaning like:” a, and, the, of, are …” can also be removed.

Types of text preprocessing techniques:

- Lowercasing:

example 1: India, INDIA, IndiA → india

example 2: Result, RESULT, resUlt →result - Stemming:

example 1:Connect,connected,connection → connect

example 2:trouble, troubled, troubles → troubl - Lemmatization:

example 1: trouble, troubled, troubles → trouble

example 2: goose, geese → goose - Normalization:

example 1: 2moro,2mrrw,2morrow → tomorrow

example 2:b4 →before

example 3: 🙂 →smile

Feature Extraction:

Feature Representation:

- Bag Of Words:

The bag of word model treats each document as an un-ordered collection or bag of words

To obtain a bag of words from the source text we need to apply appropriate text processing Steps like “Cleaning, normalizing, stemming, lemmatization, etc”.(some of them are discussed above in Text Processing).

example:

“The Court is in Session” → {“court”, “session”}

“Marry had a Little Lamb” →{“mari”, “littl”, “lamb”}

“Twinkle Twinkle Little Star” →{“twinkl”, “littl”, “star”}

A better way to represent is to turn each document into a vector of numbers representing the number of times a word occurs in a document.

A set of documents is known as Corpus. From corpus, we collect all unique words to form our Vocabulary and then form Document-Term Matrix.

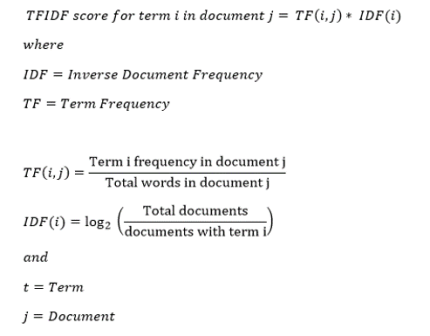

2. TF-IDF:

TF-IDF is a statistical measure that evaluates how relevant a word is to a document in a collection of documents.

This is done by multiplying two metrics: how many times a word appears in a document, and the inverse document frequency of the word across a set of documents.

3. One-Hot Encoding:

One hot encoding is a process by which categorical variables are converted into a form that could be provided to ML algorithms to do a better job in prediction.

4.Word Embedding:

One-hot encoding does not work well when we have a large vocabulary to deal with because the size representation grows with the number of words.

we need a way to control the size of our word representation by limiting it to a fixed size vector.

we want to find an embedding for each word in some vector space and we wanted to exhibit some desired properties.

for example: if two words are similar In meaning they should be closer to each other compared to words that are not. If two pairs of the word have a similar difference in their meaning they are approximately equally separated in embedded space.

This type of representation helps in finding synonyms and analogies, identifying concepts around which words are clustered classifying words as positive negative neutral, etc.