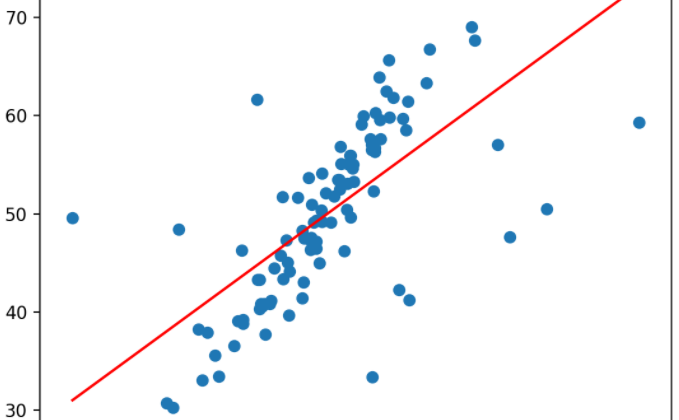

One of the most simple algorithms out there is linear regression, and it comes in handy when trying to model a linear relationship between features and targets.

When implementing some learning algorithm is quite reasonable to think about a cost (or risk) function to transform a problem of finding the right coefficients to fit the regression hyperplane into an optimization problem.

A cost function that captures the deviation of the expected values can be defined as follows:

If you are wondering about why this cost function squares and divides by 2, the answer is quite simple: “Math convenience”

Let me clarify a little bit why is that:

When we are correcting the error, it is desirable to penalize more the values that differ more from the actual value. Other great reason is to not generate negative losses when

Now, when we try to find the minimum of the cost function, if we are interested in a closed-form solution for the problem:

But… we can do computationally better if instead of finding a closed-form solution, we try to implement a learning algorithm.

The algorithm is quite simple:

Where the coefficient is the learning rate parameter.

And finally:

So, with every iteration of the algorithm, each point nudge the parameter and try to reach the minimum of the cost function. Wonderfull right? Well… not quite.

If you are in the machine learning world for some time, the chances is that you at some time encounter the term “overfitting” that together with “underfitting” plagues all the machine learning world. The problem is simple: If our data fits too well on the training data, our model loses the capability to generalize and the chances is that we are going to do poorly on the test data, leading us to overfitting.

To avoid this, we can modify the cost function to add a regularization term in order to achieve this goal, so the modified version will be:

This type of regularization is called ridge. The objective of this regularization is to shrink thetas to 0, and doing so, we are only changing the thetas too much if we have a great evidence to do so.

If we apply the same reasoning to find the new update rule for theta, we find the following equations.

and

So we came up with the expression of update of theta:

Note that the second part of the expression is exactly the same as we did before, but now we have another term that bounds theta. Great!!

Conclusion: Adding a regularization term seems to be a good idea when we are trying to maximize our model capability to generalize!

But, be careful! adding a great regularization term leads to result poorly on training and test sets.

I hope that this article helps to get a deeper understanding about linear regression and regularization:

Note: There are other types of regularization like Lasso.

Note: This ideas are very powerful and utilized in other algorithms.

Please, comment if you have any topic for us to debate =)